Builder uses AI (Artificial Intelligence) in certain features and workflows to help customers speed up design and development time.

This document covers how Builder incorporates AI and how Builder's AI workflows engage with your data.

Builder may use customer data, dependent on certain factors.

- Free plans: Builder may use customer data to train and improve the AI.

- Self-serve paid plans: Customer data may be used to train the AI only if AI content training is toggled On in the customer’s Settings. For instructions on how to control this setting, read Toggle AI content training, later in this document.

- Enterprise plans: Builder does not use customer data from Enterprise accounts for AI training or improvement purposes.

Builder makes sure that customer data:

- Cannot be accessed or leveraged by other customers

- Does not contain any knowingly submitted personal information

- Is not stored beyond what is necessary for debugging and fixing errors

For features that use external Large Language Models (LLMs), we send those LLMs only the data in prompts and, if applicable, the text or content being edited. This data is necessary for the AI to help with your request. Other AI models we use are entirely internal to Builder. The third-party LLMs we use do not train on your data.

Data is strictly used for providing the requested service. There's no secondary usage of the data used in the request.

Current Builder.io privacy policies apply to all Builder features, including AI features. For more detail about AI at Builder, visit AI Terms.

Builder uses two types of AI models:

- Builder's proprietary, privacy-first AI models

- Third-party Large Language Models (LLMs)

Builder's proprietary AI models are fully built and trained internally and hosted on Google Cloud. In this way, these models do not engage with any third party besides Google Cloud, which Builder already uses for all Builder systems.

Builder uses proprietary AI models to:

- Prepare your Figma file with the Figma plugin

- Import your design

- Make your Figma design responsive in the Visual Editor

- Generate fast code

Some, but not all of Builder's AI features, use fine-tuned external LLMs. Currently, Builder uses LLMs to generate:

- Images

- Edit content

- Edit text

- Quality code

Builder currently uses OpenAI and Anthropic's Claude. We may also use open-source LLMs, such as Mistral or Llama 2. Enterprise plan customers can choose which LLMs to use, including using their own LLM, or turn off LLM-use entirely.

By understanding which Builder features use AI, how they use AI, and which models they use, you can make informed decisions and be intentional with your workflow.

Though there's a growing feature set using AI in Builder, you can still create and manage your Builder content without using the AI-enhanced features.

The features in which AI is available in Builder include:

- Visual Copilot

- Text generation and editing

- Content generation and editing

- Generating quality code

- Image generation

Image generation, text generation and editing, and content generation and editing use a fine-tuned LLM to process requests. The following sections show how a prompt is submitted to the LLM and the image, text, or content are returned.

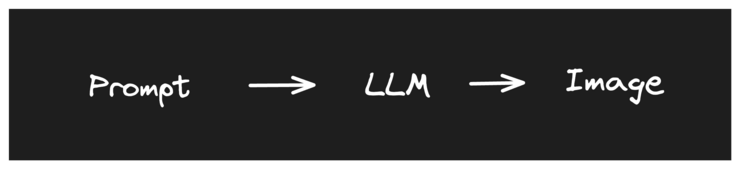

When you use the Visual Editor to generate an image, the prompt that you give in the AI Generate dialogue goes to the LLM, which in turn creates the image. No additional data is sent.

The diagram below shows this flow:

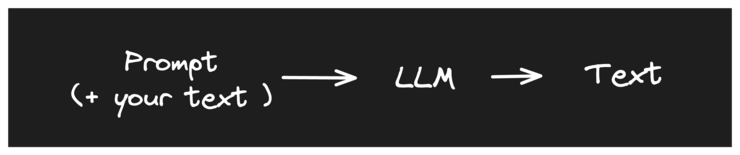

When you use the Visual Editor to generate or edit text, the prompt that you give in the Visual Editor AI dialogue goes to the LLM, which in turn creates the copy. If you're editing existing copy, the original text is included in the prompt.

The diagram below shows this flow:

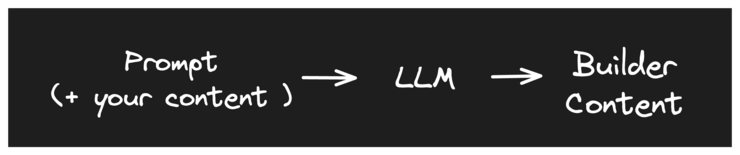

When you use the Visual Editor to generate or edit content, the prompt that you give in the Visual Editor AI dialogue goes to the LLM, which in turn creates the content. For editing, the specific content being edited is converted to code and sent.

The diagram below shows this flow:

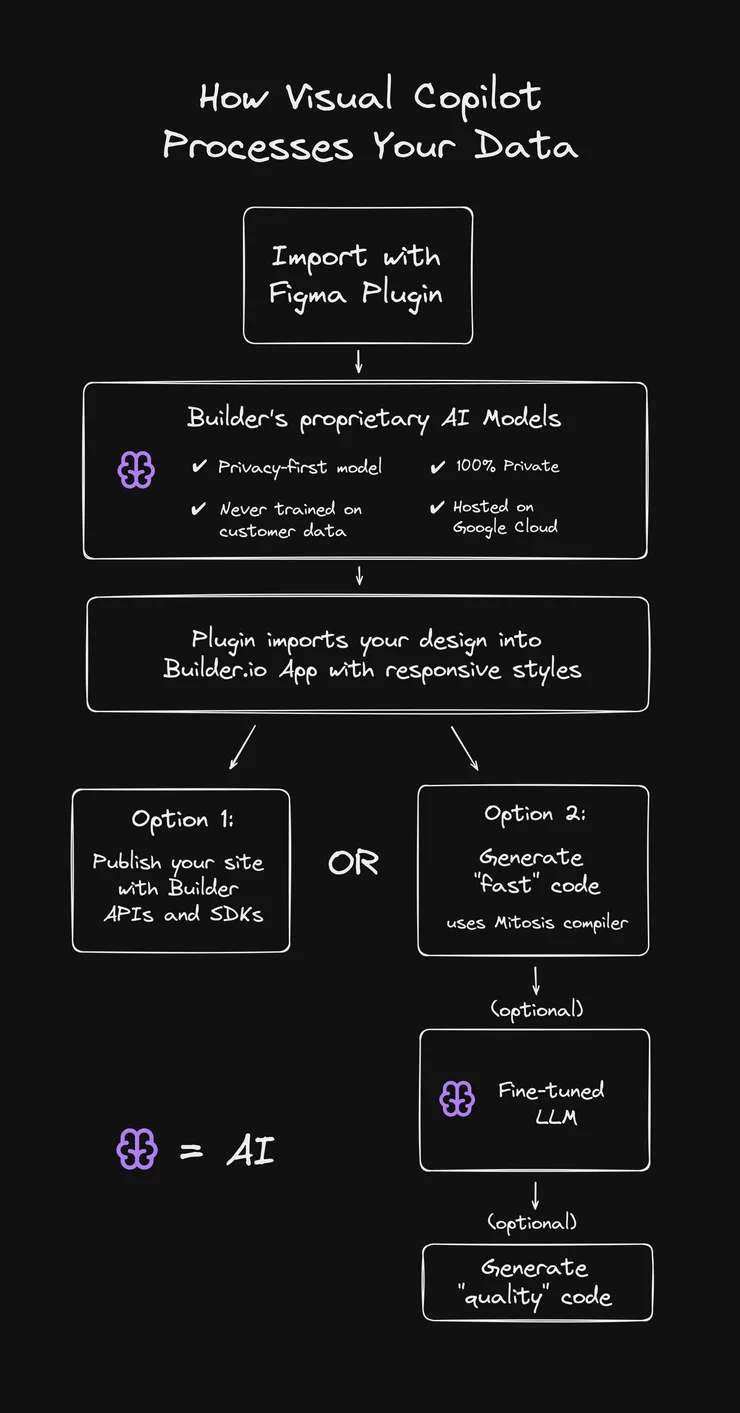

Builder's Figma plugin leverages AI to take care of the labor-intensive tasks in moments. This workflow uses AI to create a responsive design in Builder's Visual Editor from the Figma import.

When you use Builder's Figma plugin, Builder's proprietary AI models and LLMs are involved at several, but different points in the process:

- Builder's Figma plugin sends your design to Builder's proprietary AI models. These models are hosted on Google Cloud (as is all of Builder's infrastructure) to ensure privacy and data security.

- Your design is imported to Builder

At this point you can publish your site using Builder's APIs and SDKs. This option relies only on Builder's private, in-house AI models.

OR

Alternatively, you can generate code. When generating code, you have two options:

- Fast code: this uses a Mitosis compiler without AI.

- Quality code (optional): this uses a fine-tuned third-party LLM. The third-party LLMs that Builder uses do not train on your data.

When the Builder Figma plugin generates code, it bases the code strictly on your Figma file — not on anyone else's code or designs.

By default, AI features are enabled in Builder, which include AI content and image generation, AI content editing, and generating mini-apps.

To toggle all AI features for all users in the current Space:

- You must be an Admin.

- Go to Space Settings.

- In the AI Features section, toggle AIlow AI features off or on as needed.

- For an Enterprise Space, when Allow AI features is toggled on, the additional option, toggle for Visual Editor AI, displays. Non-Enterprise Spaces do not have the toggle for the Visual Editor AI.

When the AI features or the Visual Editor AI are toggled off in a Space, the AI tab in the Visual Editor features a note that an Admin has turned the feature off. Additionally, for Admins, there's a Go to Settings button so the Admin can toggle the feature back on. If you don't have this button available, contact your Admin.

The video below shows toggling off the Allow AI features setting in an Enterprise Space.

in beta

The ability to toggle the AI content training feature on or off depends on your plan:

| Plan | AI content training status |

|---|---|

Free | On, cannot be toggled off |

Self-serve paid | On, but can be toggled off |

Enterprise | Off, cannot be toggled on |

When AI content training is toggled to on, Builder uses your content to train AI models. However, regardless of plan, Builder anonymizes data to protect your privacy.

For self-serve paid plans only, you can prevent the AI from using content for training:

- Go to Settings.

- Toggle AI content training to off or on, as needed.

The video below shows these steps.

For more information on AI features in Builder, visit Visual Copilot.