Most interview processes are built for workflows that soon may no longer exist.

Designers get judged on their pixel craft in Figma, not on whether they can ship experiences that hold up in production. PMs are evaluated on tidy specs and docs, not on whether they can own outcomes. Engineers are quizzed on LeetCode, not on how they collaborate across roles.

Those signals made sense when roles were siloed and handoffs were the norm. But product development is shifting fast. With Visual IDEs and AI-augmented workflows, designers, PMs, and engineers can all work directly in code.

The problem is simple: we are still hiring for the old world, even as the new one takes shape.

Design interviews are a good example. Static screens can look beautiful while the actual user flow is confusing or broken. Beautiful prototypes can look great but be impractical to build. And even when a designer nails the intent, the final product often diverges so much in engineering that you cannot tell what to credit.

Hiring for pixel-perfect polish misses the harder skill: guiding work through constraints and compromises into production. That is the difference between a pretty mock and a product that actually works.

PM interviews have their own blind spots. We ask how candidates treat data, how they run rituals, how they manage workflows. Those are valid questions, but they are built on an old model where PMs handed off specs to designers, designers handed off artifacts to engineers, and engineers were left holding the bag. None of it tells you if someone can thrive in a workflow where roles overlap and everyone touches code.

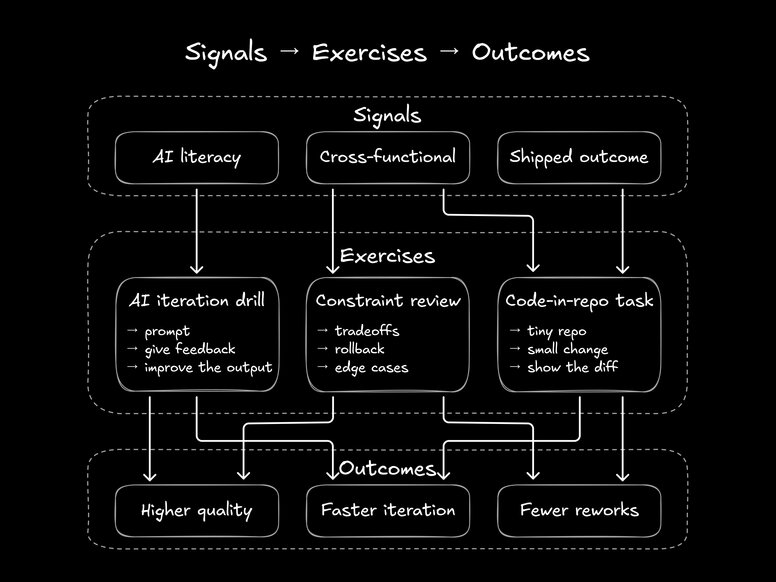

The signals are not wrong, but they are outdated. They reflect a handoff economy that is already ending. Here is a simple map from the signals we care about to the exercises that surface them, and the outcomes they predict:

Pick signals first, design exercises to surface them, get outcomes that matter.

AI is already a core skill. The next generation of candidates will be AI-native: fluent in prompting, iterating, and guiding outputs. But the real signal is not whether someone has "tried ChatGPT." It is whether they can use these tools to produce production-grade results.

The most common mistake is to treat AI like a vending machine. One prompt in, one bad output out, and they give up. Strong candidates know the first output is just a starting point. They iterate, refine, and push until the tool produces something usable.

That is why communication skills matter more than ever. Working with AI is a conversation. The people who are articulate, precise, and iterative unlock leverage. The ones who shrug and move on get left behind.

Even a little hands-on technical fluency matters. Candidates who understand the basics of what is easy versus hard to build, or who have tinkered with Git or design systems, are already ahead. They can guide AI outputs and collaborate with engineering in ways that pure craft interviews never reveal.

Enterprises are already catching on. Fortune 5 companies have started building AI competency checks into their interview cycles. Across industries, most executives say they will prioritize candidates with AI skills over more experienced applicants. Canva's cofounder put it bluntly: they are actively hiring AI-savvy college students regardless of whether they finish their degrees, because fluency with these tools matters more than pedigree.

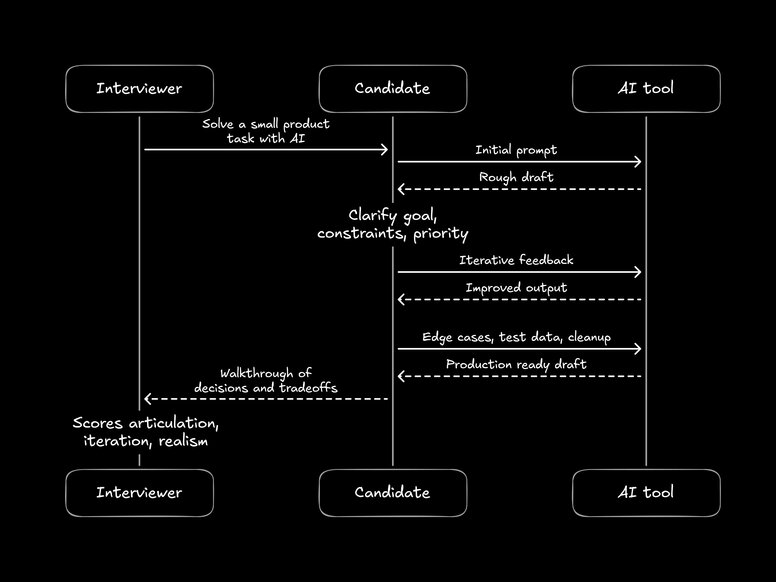

This is what good AI use looks like in an interview, a short loop of clear feedback that turns a rough draft into something you can ship.

AI literacy is articulate iteration, not one prompt and a prayer.

You do not need mythical hires who claim to be brilliant at everything. AI is the force multiplier that turns adaptable generalists and strong specialists into unicorns. The signal that matters is mindset. Does the candidate show curiosity? Have they used AI to produce tangible wins, like a prototype, a side project, or a workflow they improved? Do they have strong beliefs, weakly held? Have they failed, learned, and adjusted?

Craft still matters, but dogmatism kills. "I only work one way" is the worst possible mindset in a world where tools and workflows change every quarter. The best candidates show excitement balanced with realism. They experiment, but they stay grounded in what works.

For designers, this is even trickier. AI design tools are newer and less mature, which makes curiosity and experimentation matter even more. If a candidate has tried to bend emerging tools into their workflow, even if the results were rough, that is a stronger signal than a perfect static portfolio. Figma's own job postings reflect this shift, asking for designers "excited to shape AI-native workflows and define the future of design tools."

This is especially critical in enterprises. Startups can sometimes get away with messy hires and chaotic workflows. At scale, those same mistakes compound. Complexity magnifies every bad fit. Enterprises that keep hiring with old signals will repeat the same bottlenecks, fall behind on delivery, and pay the costs in slower iteration and lost opportunities.

The winners will hire for tomorrow's workflows today: people who are AI-literate, technically fluent, and comfortable working in code.

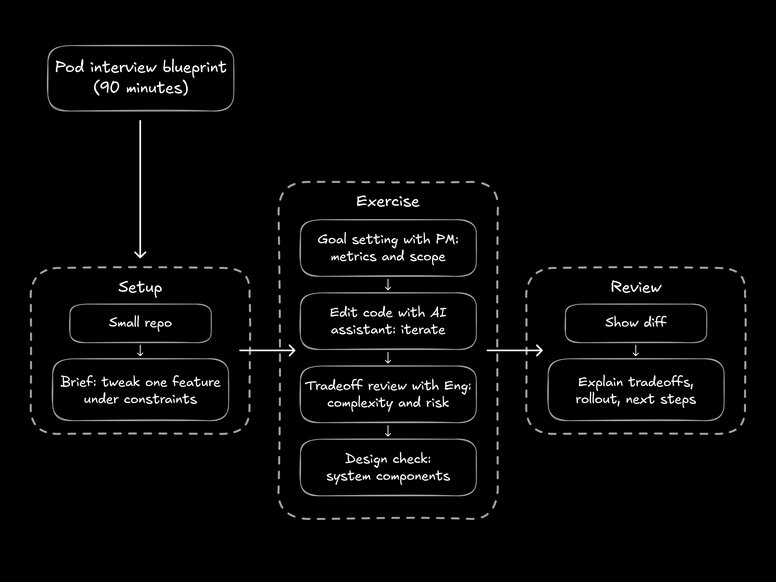

If you want one concrete recipe, run this 90 minute pod exercise and score for ownership, iteration, and production judgment.

One scoped task, real constraints, three collaborators. You collect signal on ownership, AI iteration, and production judgment in under an hour.

That also means hiring for change management, not just execution. Start by bringing in the people who are eager to adopt new tools. Let them prove the value, share wins, and teach others. Those early hires become catalysts, helping the rest of the team evolve.

Hiring for this era is not about trick interview questions or over-engineered processes. It is about checking for curiosity, ownership, and AI literacy. Ask candidates what real wins they have gotten from AI. Ask what pitfalls they hit and how they got around them. Ask whether they see the shipped product as their problem, or just their part of the pipeline. The answers will tell you more than a perfect Figma file or a pristine PRD ever could.

And then remember: hiring is only half the battle. The other half is giving those hires an environment where they can actually work the way you just hired them to. Start with AI prototyping, where ideas move from text to interactive design in seconds, then put it into practice with Fusion. If you want your next round of hires to deliver at the level you are interviewing for, Fusion is the tool that makes it possible.

Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo