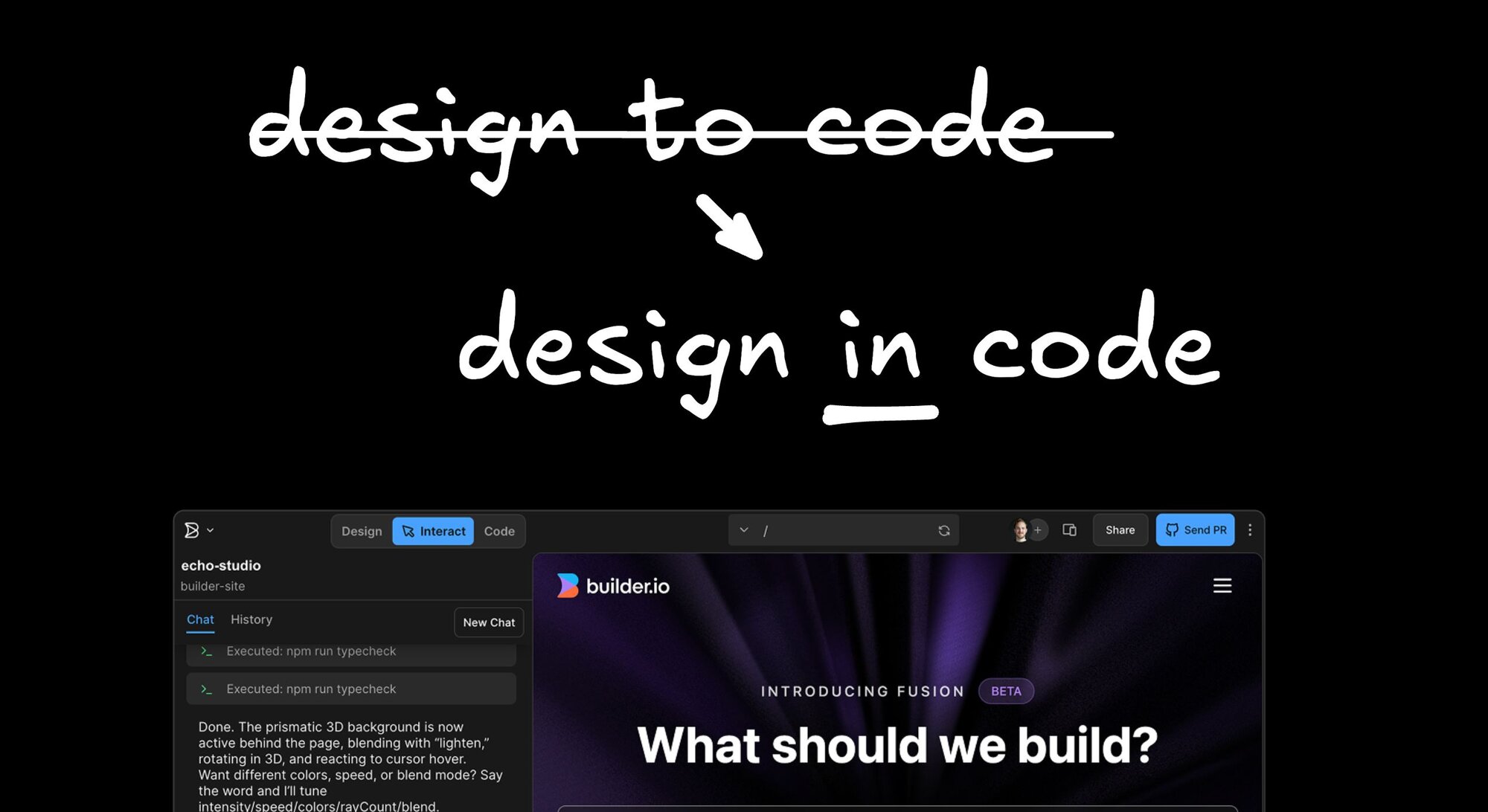

Static design is cool and all. But it is 2025, and we can design in code, and do things that were never possible in design tools.

We can nail the tiniest interactions that make users smile. You do not need to be an expert designer or an expert developer to do this. You just need to learn how to design in code, whether you start from a design or an engineering background, or neither.

So how can you use AI tools to produce rich, interactive designs like this:

Here are my best tips.

The first thing to know about designing with AI: you do not need to start from scratch. There are great resources online with animated templates, effects, and layouts you can drop in as a base. Then use AI to adapt what you like to your brand and your tech stack.

For instance, sites like 21st.dev give you a huge list of interactive starting points.

Or, for the effect you see on the current Builder.io homepage, I got the starting point for that from ReactBits. You can choose from a huge variety of effects on that site. When you find one you like, grab the full source code from the code box, copy it, then go to your AI tool of choice. I am using Builder.io here.

Prompt it something like:

Add this effect as the page background for our app. Here is the code:

<paste the effect code>Two reasons.

- Regardless of the stack used in the example, LLMs like GPT-5 and Anthropic Claude are very good at adapting example code to the stack you are using. Copy from React, ship to Next, Remix, Vite, whatever you run. The model can translate patterns.

- They are also good at adapting visuals to your brand. Tell it to use your color tokens, spacing scale, and typography. If you have design tokens already, mention them by name. If not, be explicit with hex values.

I let the AI port the code to match how we structure our app. Then I had a working ether background in my stack, in real code, not a mock.

Next I gave feedback to match branding better:

Replace the blue and orange with our purple palette. Use the same purples from the primary button on this page. Keep contrast accessible.Like all things in AI, it is about feedback and iteration. Do not expect perfection on the first shot. Give a little feedback, the same way you would to a person, until you like what you see.

There we go. Now we have an awesome purple ripple that matches our brand.

When using AI to design, a failure mode is to use a blank sandbox that gives you generic AI slop. A better alternative is to use tools like Cursor and Claude Code that connect to your actual codebase and reuse existing components, tokens, and design elements.

Those tools require dev literacy though, but you can use Builder.io as a dev, designer, or PM for the same effect and no coding background required.

The additional benefit of using our own codebase is our creations can actually get live to production users, not be just thrown away in the infinite ideas-that-never-shipped pile.

For our latest homepage, I found a planet effect on CodePen, used the same technique of using AI to adapt the code to our brand and stack, and brought it in. Then I explored variants.

I did a lot of playing around with particle effects through prompts to see galaxies, star fields, fireworks. It was fun, but a bit too busy.

Example prompts I used:

Change this particle planet to be a galaxy instead. Change the galaxy to an interact starfield.As you can see, you don’t have to be an expert prompt engineer. Just be clear about what you’re looking for.

I ended up preferring the liquid ether effect. The beauty is that whatever I create, I can open a pull request for the team to review. They can leave comments anywhere. I can ask the Builder.io agent to handle the feedback.

The agent will make the change and push commits. When it looks right, we merge. If you go to Builder.io you can see these effects live and play with them yourself.

When I want ideas and solid starting points, I use:

- ReactBits: reactbits.dev

- 21st.dev: 21st.dev

- CodePen yearly top lists: for example codepen.io/2024/popular/pens

Skim, grab, adapt. Do not reinvent the wheel if a beautiful wheel already exists.

The next critical tip for not making AI slop is to use tools that connect directly to your actual design system.

AI is good at reproducing patterns. The more examples and the more rules you feed it about your specific system, the more it will design and build like you.

You do not want a blank canvas that always rebuilds everything from scratch. You want your actual components, your icons, your tokens, your layout primitives.

In Builder I am literally editing Builder. If I want to prototype something new inside our app, I can just say:

Create a new app-wide tab called "Team Social". Show a feed of what our team is building in Builder. Use mock data for now. Include items like "UI generated", "pull request sent", "pull request merged". Add comments on each item.Note: using a good AGENTS.md goes a long way here too.

Because it is inside our repository, the agent uses our components, icons, and tokens by default. You can do similar things in Cursor or Claude Code too. Those are great, but they assume a little more engineering comfort. If you want designers and PMs in the loop, the Builder UI is friendlier.

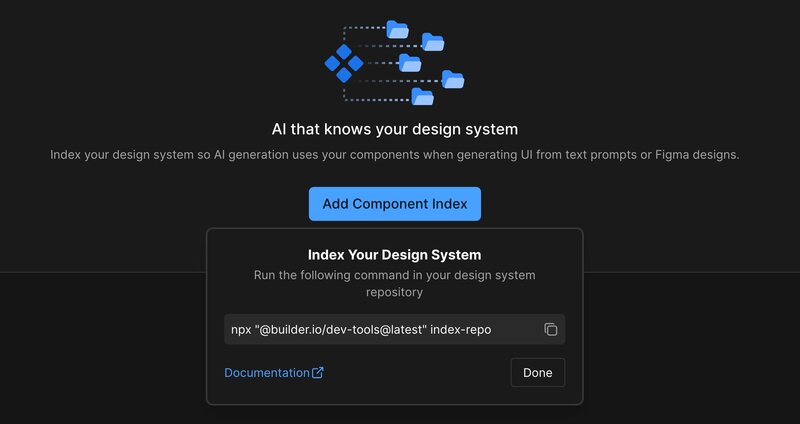

If you have a very bespoke design system, especially one published as a separate package, the AI might not learn it quickly from a few examples. For that, we provide a Design System Index.

It is a script you run in your design system repository. It gathers LLM-optimized instructions about your components, their props, allowed patterns, and correct usage, based on source code, examples, Storybook, and docs. We feed that index to the model every time.

Humans do not always read the docs. The AI can.

Make sure your tool gives you precision control when you want it. In Builder.io I can drop into Design Mode at any time and get a Figma-like precision editor.

Maybe I want a bit more right margin on a card thumbnail. Maybe I want to tweak alignment. I can make that change directly, then the tool writes the change back to code, consistent with our stack and patterns.

For designing with AI, a tight Figma integration is valuable. I like to grab pieces from code, paste them to Figma, tune them visually, then bring those updates back to code.

Example flow:

- Copy the card component from Builder into Figma with the plugin.

- Make edits, like changing the image to a wider aspect, or adjusting link affordances.

- Export back.

- In Builder, select the matching code element, paste the Figma design, and apply updates.

- The tool updates code using the design system and sends a PR if you want review.

It is a clean two way loop. Figma to code, and code back to Figma when you need to communicate tweaks to non-coders.

Look at the result. We have a new prototype of a real feature. The foundations of the UI exist in code. It sits in our repo, using our design system and tokens.

What often happens next is interesting. Designers or PMs play with ideas in Builder, then open a pull request thinking engineering will have to redo everything. Our engineers look at it and say the work is already done. We merge.

Examples inside Builder that started this way:

- Project pinning

- Project duplication

- Lots of UX touches

We also work in parallel more often. With a scaffold in place, engineers can wire backend logic while design refines micro-interactions. The front end is mostly there. We just stitch in the final logic. Sometimes the AI can help pull real data too:

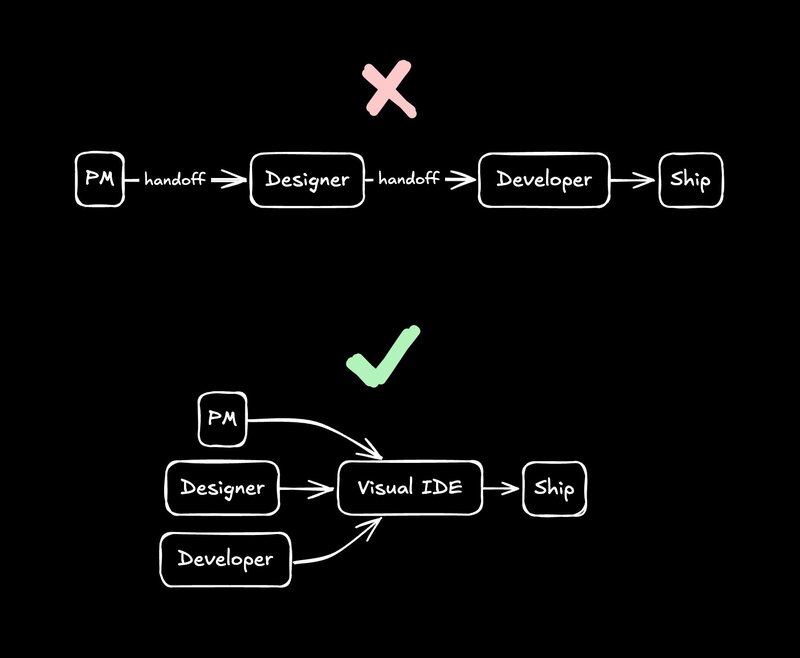

Replace mock events with a live collection from the database. Use the Activity table. Map fields to the feed item shape. Paginate 20 per page.Use this to break out of never ending waterfall processes. Designers can design in code, which means what they produce does not need to be handed off and rebuilt. It is ready. It might need light refactoring. AI can help with that too. It might need functionality. Engineering can wire that. You do not need to recreate the same parts at each step.

PMs can build prototypes directly in code. Designers can come through and elevate the look and feel. Engineers can hook up the data and logic. You can assemble the final result into one or more PRs that hit production much faster.

We call this no-handoff development, which using a Visual IDE like Builder.io enables.

Another nice side effect: engineers become more autonomous. When they need a popover or a modal they do not have to wait for design. The AI knows the design system and can create a good first version. Faster iteration equals faster customer feedback.

Here is the condensed version you can try in your own stack.

- Pick an effect you like from ReactBits or a CodePen top list.

- Copy the code.

- In your AI-assisted editor, paste the code with a prompt like:

- Review the result. Give one or two targeted notes.

- Open a PR. Invite comments. Let the agent address the mechanical nits.

- Merge when you are happy.

- Repeat. Commit small, review fast, ship daily.

- Blank sandboxes lead to slop. Work inside your repo so the AI can reference your components and tokens.

- Too much at once. Ask for one thing at a time, then refine.

- No brand guidance. Give color and spacing tokens, or at least explicit hex values and scales.

- Untouched accessibility. Ask for reduced motion support and color contrast checks.

- Giant diffs. Ask the agent to split files and keep changes scoped. Small PRs are easier to review and revert.

Static design is cool and all. It is just not enough anymore. We can design in code now. We can create living UI that feels great under a cursor or a thumb.

You do not need to be an expert. You need a workflow that starts from inspiration, uses AI to adapt to your system, keeps you in your real repo, and lets you iterate quickly.

If you’re not sure where to start or which AI design tool to use - try using Builder.io and let me know your feedback.

Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo