Test-Driven Development (TDD) is one of those things we all know we should do, like eating our vegetables or flossing. It promises a world of robust, bug-resistant code where every new feature ships with its own safety net.

In practice, though, it often feels like a time-consuming chore. When you're up against a deadline, who really has the time to write a suite of tests for code that doesn't even exist yet?

But AI agents change this story. This post breaks down how AI, especially a highly-iterative agent like Cursor, Claude Code, or Fusion, turns TDD from a best-practice-you-skip into a powerful way to scale resilient applications.

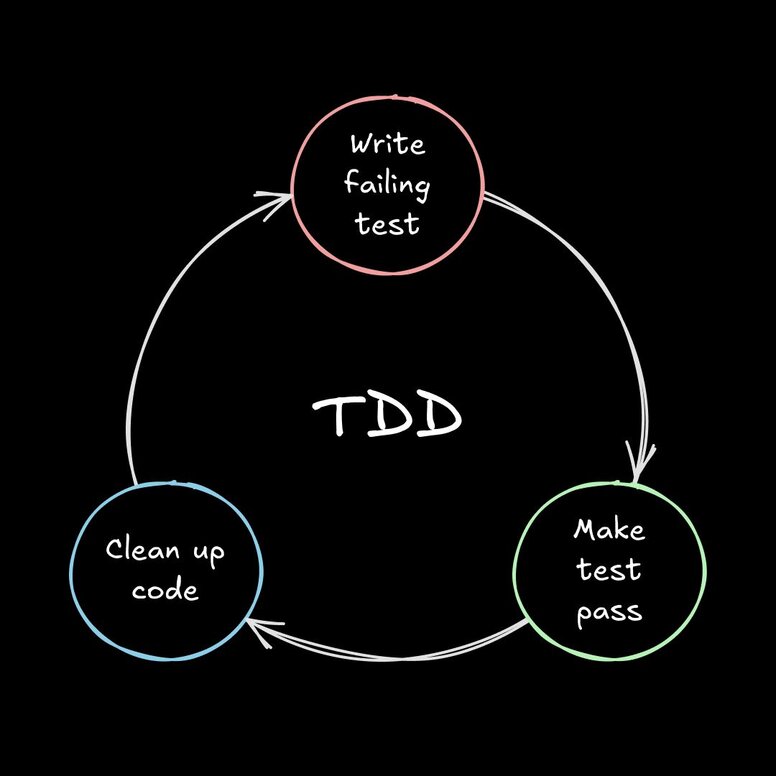

Forget the jargon you might have heard. TDD isn't some mystical practice; it's a simple, rhythmic loop: Red, Green, Refactor.

- Red: You start by writing a test for a feature that doesn't exist yet. Naturally, you run the test, and it fails—it’s red. This isn't a bug; it's your goalpost. You've just defined exactly what "done" looks like.

- Green: Next, you write the absolute bare-minimum code required to make that test pass. No gold-plating, no thinking about edge cases. Just get it to turn green.

- Refactor: With the safety of a passing test, you can now clean up your code. Make it elegant, efficient, and readable, all while re-running your tests to ensure everything stays green.

The big win here? You build with confidence, knowing your code works as specified. And as a bonus, your test suite becomes living, breathing documentation for what your application is supposed to do—something that never gets stale.

If TDD is so great, why isn't every single developer doing it on every single project? Because for humans working on a deadline, the friction is real.

- The biggest and most common complaint is the upfront time cost. Writing a clean, comprehensive suite of tests before you even have a feature feels like you're moving in slow motion, especially when a product manager is breathing down your neck.

- You spend hours crafting the perfect tests. Then, someone refactors a component, and suddenly half your tests shatter. Test maintenance can quickly become a soul-crushing part of your tech debt, a garden that needs constant, tedious weeding.

- Hitting 100% test coverage doesn't mean your app is bulletproof. Your unit tests can be a sea of green, but they can easily miss the bigger picture—like when two "perfectly tested" components simply refuse to talk to each other in production. It’s a safety net full of holes.

Maybe this Reddit post describes it best:

Everything that makes TDD a slog for humans makes it the perfect workflow for an AI agent. AI thrives on clear, measurable goals, and a binary test is one the clearest goals you can give it.

- That time sink? It’s gone. AI can spit out boilerplate, edge cases, and entire test files in seconds, turning TDD's biggest weakness into a massive accelerator. The manual labor of writing tests evaporates.

- Prompting an AI with "make a login button" is a gamble. Prompting it with a suite of tests that defines exactly how that button must behave—covering states like disabled, loading, and error—gives the AI a concrete target. It can iterate, self-correct, and build with precision until the job is done right.

- When you update your UI or refactor a component, an advanced agent like Fusion can understand the change and automatically update the tests for you. That brittle test nightmare that created so much tech debt? It’s over. Now your code self-heals.

Talk is cheap. Let's build a real feature. We'll use our AI agent + visual canvas, Fusion, to build out a shopping cart for an e-commerce site, applying our AI-driven TDD workflow at every stage.

For each type of test, we'll show you the exact prompt you can use and what happens next. Along the way, we'll drop in some pro-tips for getting your AI agent to follow your testing setup, run the right commands, and truly act like a member of your team.

Every good feature is built on a solid foundation of pure business logic. That’s where we’ll start: unit tests. These are small, focused tests that verify a single piece of functionality in isolation, like a single function.

For our shopping cart, the most crucial piece of logic is calculating the total price. Here's the prompt we'll give Fusion:

"I'm building a shopping cart. Create a utility function called calculateTotal. It should take an array of item objects (each with a price and quantity) and return the total cost. Also, generate the unit tests to cover an empty cart, a single item, and multiple items."

With this, Fusion generates two files: the utility function and a corresponding spec.ts file populated with tests for each scenario we asked for. The TDD loop starts with the AI writing both the code and the test that proves it works.

To make this easier, you can tell Fusion about your testing setup (like Vitest or Jest) in your project's custom instructions. This helps the agent not only generate the correct test syntax but also run the tests itself to verify its own changes before opening a pull request.

Okay, so our core logic is solid and tested. But features aren't just isolated functions; they're made of components that need to work together. Integration tests are the next layer of our safety net, ensuring that different parts of our app can communicate without dropping the ball.

Now, let's test how our UI components talk to each other. We want to make sure that when a user clicks a button on a product card, the main cart summary updates correctly.

Here’s the prompt we’ll give Fusion:

"I have a React ProductCard component with an 'Add to Cart' button and a CartSummary component in the header. Create an integration test using TypeScript and React Testing Library. The test should render both components, simulate a user clicking the button on the card, and verify that the CartSummary updates to show the correct item count and new total."

We’ll also use the Context7 MCP integration to pull in the most up-to-date documentation to set up React Testing Library from scratch.

Fusion gets to work, first looking up the React Testing Library docs, installing the necessary packages, and then creating a CartIntegration.test.tsx file. It imports the necessary components, set up the test environment with React Testing Library's render function, simulates the userEvent.click, and uses expect to assert that the text in the <CartSummary> component has changed as requested. It's testing the wiring between our components to catch bugs before they ever reach a user.

Unit and integration tests are fantastic for ensuring your logic works and your components play nicely together. But they have a blind spot: they can't tell you if a CSS change just knocked your entire layout sideways or made your "Buy Now" button invisible. This is where visual tests come in, and it’s where an AI agent that can see becomes a superpower.

Visual tests catch unintended UI bugs by comparing screenshots of your application before and after a code change. For a human, this is tedious. For Fusion, it's second nature.

In our app, we have an annoying visual bug where the favorites list notification is too close to the cart items in the header, but only when there are zero items in the cart. For this, we can ask Fusion to modify our UI and then visually verify its own work.

“When there’s zero items in the cart, the notification counter next to the heart icon in the header is too close to the cart items. When there’s at least one item in the cart, the spacing is perfect. Can you take a screenshot of each state, and then fix the spacing to always match? Confirm at the end by taking screenshots again.”

Fusion doesn't just blindly add code. It parses the screenshot to understand the current layout, makes the requested change to the JSX and CSS, and then runs a visual comparison. It can even parse active state of the DOM and compare it to the code to understand what’s going wrong. It's a great safeguard against the "oops, I broke the CSS" class of bugs.

You could build an entire visual regression suite this way. Prompt Fusion to take baseline screenshots of all your key user flows (checkout, login, user profile) and store them in the code. Then, instruct it to run visual tests against this baseline gallery for any PR that touches UI code, effectively automating your design QA.

If unit tests check the individual bricks and integration tests check the walls, end-to-end (E2E) tests are the final inspection that ensures the whole house will stand up. They simulate a complete user journey from start to finish, interacting with your app just like a real person would.

This makes them incredibly powerful, but also notoriously brittle and a pain to write. With AI, you can just describe the journey.

Let's test our entire shopping cart flow, from adding a product to arriving at the checkout page.

"Write a full E2E test with Playwright. A user should be able to:

- Land on a product page.

- Click the 'Add to Cart' button.

- Open the cart dropdown.

- Verify the correct item and final price are displayed."

Fusion takes this multi-step narrative and translates it into a precise Playwright script. It will generate a new test file that automates the browser to perform each action: page.goto(...), page.locator('button').click(), and finally, expect(page.locator('.final-price')).toHaveText(...). The entire user flow is codified and automated from a simple, human-readable prompt.

Now, we’re ready to commit our PR to the larger codebase, knowing that all our tests pass.

The shift with AI isn't just about speed; it's a fundamental change in your role as a developer. Your job becomes less about the tedious labor of writing tests and more about the high-level, strategic work of defining what "done" looks like.

By providing clear, testable goals, you empower your AI copilot to handle the implementation, turning you into the architect and the AI into your endlessly scalable engineering team.

Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo