I've listened to 80+ sales and product conversions with product, design, and engineering leaders in enterprises evaluating AI coding solutions, interviewed dozens of developers using multiple AI coding solutions, interviewed engineering optimization leaders evaluating AI coding solutions, watched 100s of users build experiences with our AI coding solution, tried Bolt, Lovable, v0, Replit, Cursor, GitHub Copilot, Claude Code, Fusion, and personally shipped AI coded iterations to our own product.

I've concluded one thing:

The majority of teams today are vibe-evaluating AI coding solutions and there's a far better way to do it.

This guide is intended to give product, engineering, and design leaders in enterprise organizations a clear understanding of the three types of AI coding solutions, how AI coding fits into your enterprise, the criteria you should look for when shortlisting your AI coding solution, and how to POC a AI coding solution.

The three types of AI coding solutions

In my opinion, "AI coding" is the process of prompting AI to create code, visualizing the changes, and then iterating on those visualizations. This process continues until the outcome suits your liking.

There are three categories of AI coding solutions and almost every enterprise will use some combination of them:

| AI-powered IDEs | AI Visual IDEs | AI App Builders | |

|---|---|---|---|

Primary users | Developers | Product managers, designers | Founders, solopreneurs, hobbyists |

Interface | Code-first | Visual-first | Visual-first |

Workflow | Developer workflows only | Cross-functional workflows | Solo creator workflows |

Tech stack compatibility | Any stack/framework | Any stack/framework | Restrictions on stack/framework options |

Common use cases |

|

|

|

Example offerings | GitHub Copilot, Cursor, Claude Code | Fusion, by Builder.io | Bolt, Lovable, Replit, v0 |

Common AI coding use cases in enterprises

The overarching theme we hear when product, engineering, and design leaders come to us is that they're looking for ways to increase productivity and velocity "across the entire software development lifecycle". As part of their mandate, they're considering every step from the initial idea to the design through the delivery of that feature.

In many cases, they've identified a few common workflows that take longer and more resources than they probably should in a world where you can literally speak features into existence.

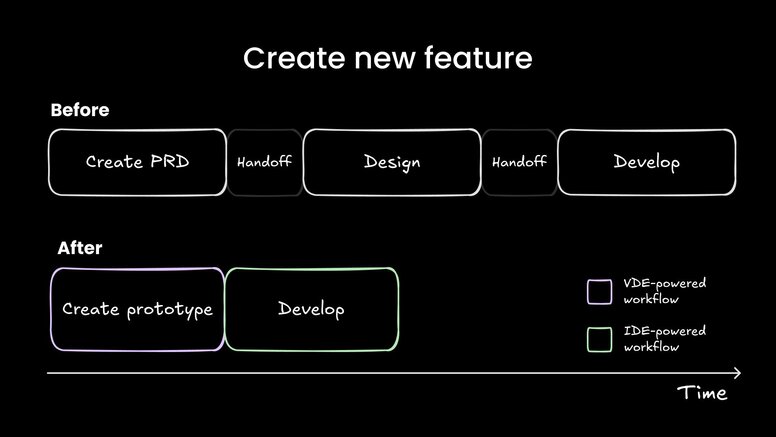

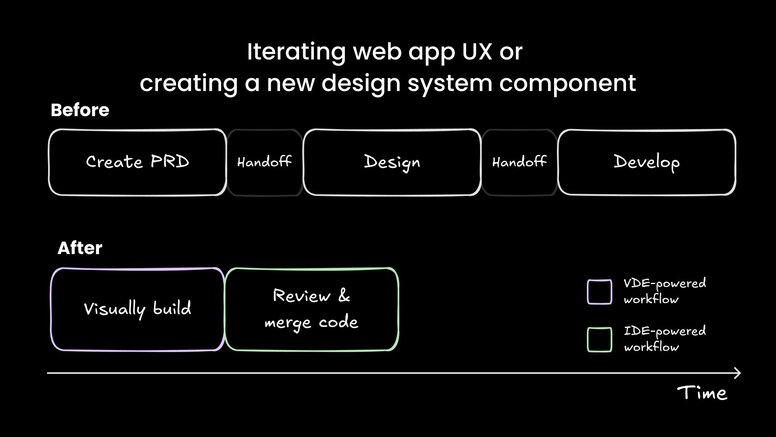

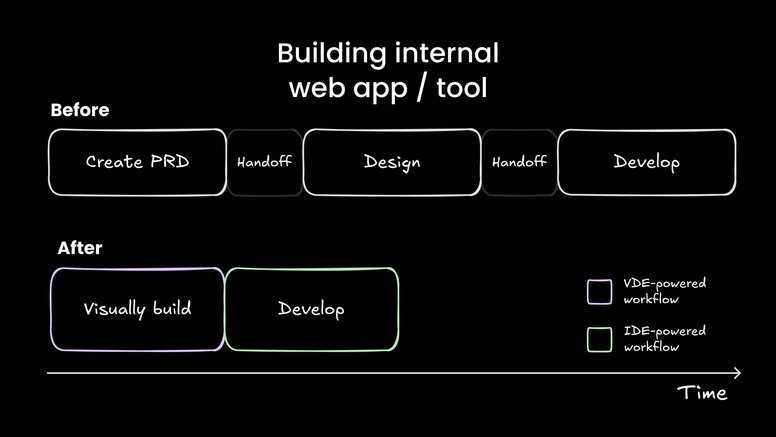

We've outlined three of the most common workflows in the graphic below:

What to look for in an enterprise AI coding solution

Quickly poke around social media and you'll see thousands of indie hackers and hobbyists leveraging AI coding solutions to quickly spin up prototypes and web apps. While these apps are often impressively powerful, they don't often meet many of the requirements enterprise have or accomplish the types of things they need.

Here's one example: I recently concluded interviews with eight users in different organizations who use or have tried multiple types of AI coding tools. In almost every case, they leveraged them for personal or hobby projects but almost none of them used them to accomplish tasks in their day jobs. They mentioned one of the major limiting factors was that the AI App Builders they tried didn't integrate with their organization's design system, code, and/or existing workflows.

To help you evaluate AI coding solutions for your enterprise, I borrowed a framework I used at Forrester Research when I conducted benchmarks to help you identify the right criteria to look for to ensure the AI coding solution you POC doesn't just work at the hobbyist level, it can scale for your entire organization to genuinely accelerate the entire software development lifecycle.

Evaluate AI coding solutions across four categories:

| Category | Description & why it matters |

|---|---|

Context providers | Context providers are the different integrations you can “plug in” to the AI to give it context. We’ve found that the most successful teams leveraging AI have shifted their efforts from “prompt engineering” to “context engineering”: finding ways to bring context from across their enterprise into AI so it can better understand what you want it to do to generate higher-quality results. |

Ease of use | “Quality of generation” is a challenging feature to benchmark as it’s often the result of the context provided. As one proxy, we recommend evaluating “ease of use”. An example of poor “ease of use” is as one design leader mentioned getting stuck in “prompt hell going in circles to fix one small thing”. Many AI coding solutions for hobbyists have done this well and it’s we believe enterprise AI coding solutions should also deliver. |

Developer experience | Even if you’re evaluating a VDE that will primarily be leveraged by non-developers, we still recommend evaluating the developer experience. If the objective is to accelerate the entire SDLC, developers should be able to easily integrate context and review, test, manage, and work with AI coded experiences. |

Security and scale | It’s not just about SOC compliance, SSO, and AI training exclusion, to scale a AI coding solution across a global organization, we recommend evaluating the collaborative features, ability to setup and iterate org-wide templates, and RBAC |

You can use the following scorecard to evaluate when shortlisting a AI coding solution for your enterprise:

Download the template

How to proof-of-concept (POC) a AI coding solution in your enterprise

We recommend a seven step approach to POCing a AI coding solution for your enterprise:

| Step | Task | Details / recommendation |

|---|---|---|

1 | Define the roles and category of AI coding solution you want to start with | We recommend POCing:

|

2 | Define the workflows you want to optimize | We recommend POCing:

|

3 | Set up your environment context for success | This should include:

|

4 | Educate your team on best practices for prompting | Best practices include:

We wrote a blog with others entitled, 11 prompting tips for building UIs that don’t suck. |

5 | Use real repos (if possible) | We realize in the POC stage some organizations cannot connect their real repos to a AI coding solution, but there’s something immensely powerful for a non-developer to be able to iterate a real codebase and see something they created show up in their customer’s/end user’s workflow |

6 | Setup a Slack/Teams/etc. channel and share video recordings of experiences | Whether the experience is good or bad, sharing video recordings of your team’s experience in-context can help others chime in with support, celebrate accomplishments, or gather feedback to bring to your vendor. This is a best practice we found when researching how engineering optimization leaders evaluate AI coding tools in our blog entitled, 3 Very Specific Tips from Engineering Leaders for Evaluating AI Coding Tools. |

7 | Quantify impact across time to market, resolved features/tickets, and efficiency across the whole team (even if you’re only POCing workflows for one role) | Some metrics we’ve seen include: PRs shipped by non-developers, ticket resolution time, lines of code generated, and developer/designer/PM efficiency. Note: even if you’re exclusively POCing workflows for product managers or UX designers, it’s possible that the majority of the productivity benefit could actually be in the development phase; be sure to measure accordingly. |

Looking for an enterprise-ready AI coding solution?

At Builder, we provide an AI Visual IDE called Fusion. It's built for enterprise workflows from the ground up. We've helped some of the largest organizations in the globe figure out how they can leverage AI coding in their workflows and we may be able to help you too. Reach out to get a personalized demo.

Design and code in one platform

Builder.io visually edits code, uses your design system, and sends pull requests.

Design and code in one platform

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo