Limitations of Vibe Coding Tools in 2026

AI coding tools promise speed, and they deliver it for demos. The problem shows up later, when teams try to ship that code to production and discover it doesn't follow their patterns, can't merge cleanly, or breaks under real conditions.

This article explains why most vibe-coded prototypes die before deployment, what specific limitations cause that failure, and how to build prototypes that actually survive the jump to production.

What is vibe coding?

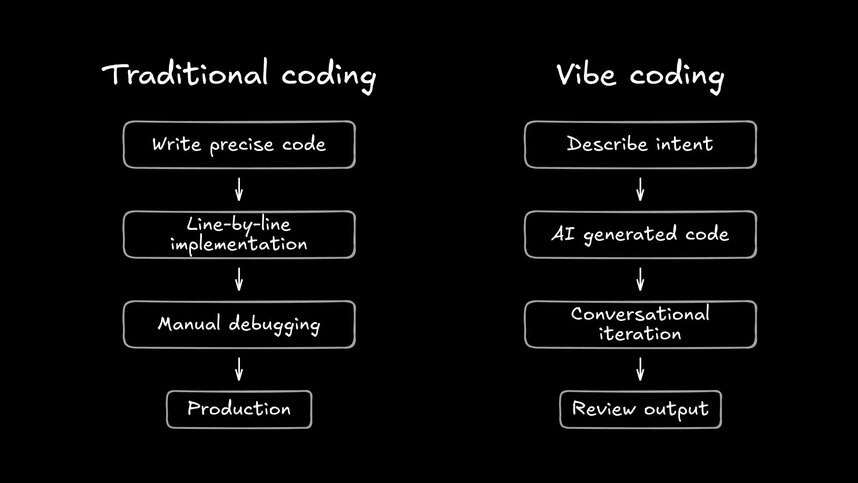

Vibe coding is building software by describing what you want in plain language and letting AI write the code. You tell a tool like Claude, Cursor, or v0 something like "create a checkout form with validation," and it generates working code for you. The name captures the approach: you share the vibe, and AI interprets your intent.

This flips traditional coding on its head. Instead of writing precise instructions line by line, you iterate through conversation. Prompt, review, adjust, repeat. The appeal is obvious: faster output, less boilerplate, and a lower barrier for people who aren't professional developers.

But speed at the start doesn't guarantee success at the finish. The limitations of vibe coding become clear when projects move beyond prototypes into production, where code quality, maintainability, and security actually matter.

Core limitations of vibe coding for production software

Vibe coding tools can spin up a working demo in minutes. The problems start when you try to ship that code to real users, maintain it over months, or scale it across a team. Production software demands consistency, reliability, and observability that vibe-coded projects rarely have.

What follows are the patterns we see repeatedly when teams try to move AI-generated code from experiment to production. Enterprise teams evaluating vibe coding tools encounter these issues consistently.

Inconsistent code quality and style

AI generates different patterns for similar problems, even within the same conversation. Ask for a data-fetching function on Monday and you get async/await; ask for something similar on Wednesday and you might get promise chains instead.

Neither is wrong, but mixing them creates confusion.

This inconsistency compounds fast. Code reviews become frustrating when every pull request looks different from the last. Engineers spend time debating style choices that should have been settled by convention.

Common inconsistencies include:

- Naming patterns: camelCase in one file, snake_case in another, even within the same project

- Error handling: Try/catch blocks in some functions, error boundaries in others, nothing at all in many

- State management: Context API here, prop drilling there, local state scattered throughout

- File organization: No predictable structure for where components, utilities, or types belong

Linters catch some of these issues. They can't enforce architectural decisions. The result is code that works but resists systematic improvement.

Maintainability and technical debt accumulation

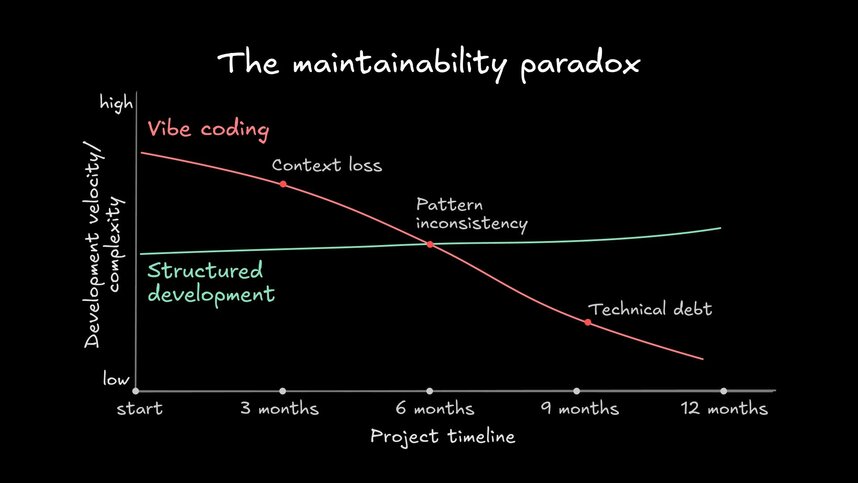

Vibe-coded projects get harder to change over time because AI doesn't remember your previous decisions. Each prompt starts fresh, and the context window has limits on how much information it can hold, contributing to an 8-fold increase in code duplication.

Complex projects exceed those limits quickly. This creates context loss.

You might establish a pattern for handling API errors in one session. The AI won't automatically apply that pattern next time. Quick fixes compound into codebases where no single person understands how all the pieces connect.

| Aspect | Vibe coding | Structured development |

|---|---|---|

Initial speed | Fast prototype | Slower start |

Long-term maintenance | Increasing complexity | Predictable patterns |

Team onboarding | Difficult without context | Clear conventions |

Refactoring | High risk of breaks | Systematic approach |

Onboarding new team members becomes painful. Traditional codebases have patterns that experienced developers recognize. Vibe-coded projects often lack those patterns, forcing new contributors to reverse-engineer decisions that were never explicitly made.

Debugging, testing, and observability gaps

AI-generated code handles the happy path, meaning the scenario where everything goes right. Edge cases, error states, and unexpected inputs often go unaddressed. When something breaks in production, you're tracing through code you didn't write and may not fully understand.

Testing presents a similar challenge. AI rarely generates comprehensive test suites alongside code. The tests that do get written cover obvious scenarios while missing the edge cases that cause real incidents, with 45% of AI-generated code failing basic security tests when evaluated.

- Missing coverage: AI writes code that works for demos but lacks tests for boundary conditions

- Edge case blindness: Generated code handles ideal inputs but breaks on malformed data or network failures

- Debugging opacity: Tracing issues through unfamiliar patterns takes longer than debugging your own code

- Observability gaps: Logging, metrics, and tracing are afterthoughts, making production issues harder to diagnose

CI/CD pipelines can enforce coverage thresholds. They can't ensure tests validate meaningful behavior. Teams discover these gaps after incidents, not before.

When vibe coding works (and when it doesn't)

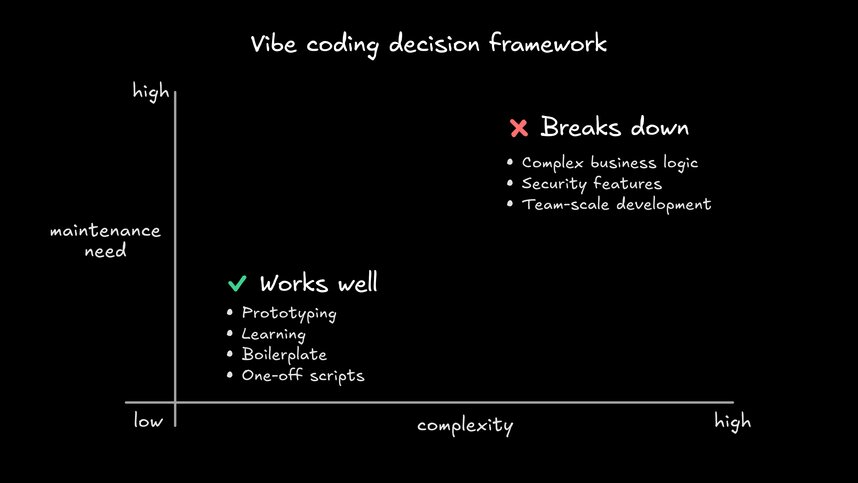

These limitations don't mean vibe coding is useless. They mean it's a tool with specific strengths and clear boundaries. Match the tool to the task and you get value. Mismatch it and you create problems.

Vibe coding works well for:

- Prototyping and exploration: Validating concepts before committing engineering time

- Learning: Understanding new frameworks through generated examples

- Boilerplate: Creating initial scaffolding for standard patterns

- One-off scripts: Data migrations or automation that won't need maintenance

Vibe coding breaks down for:

- Complex business logic: Multi-step workflows with intricate rules

- Performance-critical code: Algorithms requiring optimization or careful resource management

- Security-sensitive features: Authentication, payments, or data handling

- Team-scale development: Codebases with multiple contributors who need shared conventions

A marketing landing page fits vibe coding well. A payment processing system does not. The difference is the cost of getting things wrong and the need for long-term maintainability.

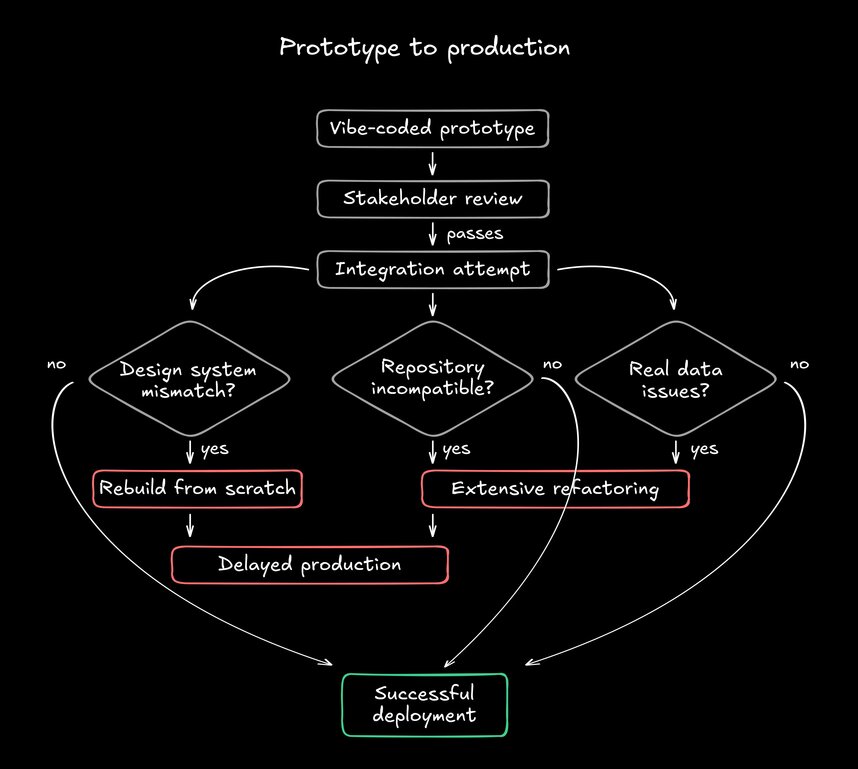

The pattern repeats: teams vibe-code a prototype, stakeholders get excited, then engineers face a choice. Rebuild with proper architecture, or harden the prototype. Neither is fast.

Why vibe-coded prototypes rarely survive to production

The gap between demo and deployment is where most vibe-coded projects die. Understanding why helps you decide when the approach makes sense and when it doesn't.

Generic outputs ignore your design system

Most vibe coding tools generate code from scratch using generic patterns. They don't know your component library exists and have never seen your design tokens.

The output might look right in a screenshot, but the code reveals mismatched buttons, off-scale spacing, and patterns no one on your team uses.

This means every prototype needs translation work before it fits your actual product. That translation often takes longer than building the feature properly from the start.

Code that can't merge into your repository

A prototype has no lasting value if it can't merge cleanly into your codebase. Vibe coding tools optimize for standalone demos, not integration. They export code that ignores your file structure, testing conventions, and build configuration.

Engineers face a choice: spend hours reshaping the export, or start over. Either way, the speed you gained evaporates.

No connection to real data or APIs

Without real data, everything looks better than it is. Placeholder content hides the messy realities of latency, authentication failures, and error states. These details decide whether a product feels fast or broken.

Prototypes built on fake data pass stakeholder reviews. Then reality hits during integration. The layout that looked perfect with three placeholder items breaks with thirty real ones. The form that worked with sample data fails on actual API responses.

How Builder.io approaches vibe coding differently

The appeal of vibe coding is real. Teams want to move faster from idea to working software. The problem isn't wanting speed. It's that most tools optimize for the demo, not the deployment.

Builder.io takes a different approach. Instead of generating generic code from scratch, Builder.io works with your existing codebase, design system, and component library. The AI reads your repository and understands your patterns. Output follows your conventions because those conventions are the input.

This addresses the core limitations directly:

- Consistency: Builder.io uses your design tokens, naming patterns, and architecture. Generated code looks like code your team wrote because it's built from your building blocks.

- Maintainability: Changes are reviewable pull requests, not mysterious exports. Engineers keep full control over what merges.

- Real data: Builder.io connects to your APIs and data sources from the start. You test against actual conditions, not placeholders.

The visual editing layer lets product managers and designers iterate without breaking engineering conventions. Everyone works in the same system. The handoff friction disappears because there's nothing to hand off.

Builder.io doesn't eliminate engineering oversight. It reduces the low-value assembly work that eats engineering time. Designers and PMs move work forward. Engineers review, refine, and merge. Quality stays high because guardrails are built into the workflow.

Common questions about vibe coding limitations

Can vibe coding produce secure code?

Security requires deliberate attention to authentication, authorization, input validation, and data handling. Vibe coding tools, however, focus on functionality, not security.

They generate code that works but often lacks proper validation, exposes sensitive data, or uses vulnerable authentication patterns. Any security-sensitive feature needs human review and hardening.

Should teams ban vibe coding entirely?

Banning vibe coding throws away real value. The better approach is matching tools to tasks. Use vibe coding for exploration, prototypes, and throwaway experiments. Use structured development for production features, security-sensitive code, and anything that needs long-term maintenance.

How do you know when a vibe-coded prototype needs rebuilding?

Watch for these signals: the code doesn't follow your team's patterns, tests are missing or superficial, the file structure doesn't match your repository, and integrating with real APIs requires significant changes. If more than one applies, rebuilding is usually faster than retrofitting. Production-grade AI prototyping tools can help avoid this rebuild trap entirely.

Building prototypes that survive to production

If your team has burned cycles rebuilding prototypes that couldn't survive real requirements, there's a better path. Start with tools that understand your system from the beginning, not tools that force you to translate output after the fact.

Builder.io connects directly to your repository and indexes your design system. This ensures prototypes speak the same visual and technical language as your product.

Changes live as reviewable diffs, and your existing tools like linters, type checks, and CI run as usual.

Sign up for Builder.io and see how prototyping changes when the tool already knows your codebase.