Ask any dev who’s been working for a while, and you’ll hear the same thing: Most of your time isn’t spent writing code. It’s spent reading it.

Plus, when your dev career levels up, that work only grows, as you review pull requests, map system architecture, and make sure everyone else’s changes play nicely together.

Now, AI churns out pages of code in seconds. Whether your title says “Junior” or “Principal,” you’re suddenly responsible for vetting a tidal wave of machine-generated commits at a more senior level.

Here, we’ll look at what effective code review entails, dissect the friction AI injects into traditional review workflows, and start thinking about how to partner with AI in a way that makes you feel more like a senior architect than a code monkey burnout.

Think of a code review as borrowing a few minutes from future-you (and future teammates) to make sure today’s commit won’t become tomorrow’s “jfc, who wrote this?” moment.

At its best, a review…

- catches the obvious bugs and style guide mismatches.

- flags anything that might send the roadmap off a cliff next quarter.

- tests whether a reasonably caffeinated dev could parse code intent in under a minute.

- checks if code plays nicely with existing patterns and performance constraints.

- sniffs out potential security vulnerabilities.

Before you hit “approve,” you’ll want to ask, “Will this code make sense in six months? Does it leave the codebase better than it was before? Could I explain the design choices in a stand-up without breaking into apologetic jazz hands?”

Short answer: Everyone with a GitHub login. After all, reading and reviewing code are what level you up in your dev career.

Junior devs check the ticket and ask, “Does this code work right now to solve the user story?”

Senior devs play 4D chess, understanding the impact the code has on the team and the product in the coming months and years. They know what architecture holds up and why, and they can eliminate overly-complex code in favor of easy-to-grok functionality.

But here’s the thing. AI makes this senior-level mindset mandatory for all developers much sooner. LLMs can crank out code that compiles all day, but they stop at the question, “Does it work right now?”

Humans have to ask the harder stuff.

Ultimately, building the skill of answering these tough questions comes down to practice, but here’s some code review techniques that can help:

- Start at the finish line. Skip the top-to-bottom tour. Jump straight to the line that writes to the DB, returns the API response, or flips the feature flag. Then work backward until you’re sure every step actually leads there—and nowhere weird in between.

- Build the bird’s eye view. While you read, sketch (in your head or on a napkin) how this code touches the rest of the system: Which services get pinged? Which globals get mutated? Which new package just hitched a ride? A quick 30,000-foot view exposes hidden side effects before they become production mysteries.

- Interrogate the code, not the author. Lob questions like “Why this approach over X?”, “What happens when

nullcrashes the party?”, or “How would you unit-test the ugly part?” The goal is to uncover blind spots together—not roast anyone’s skills. - Channel your inner vigilante. Imagine malformed JSON, midnight traffic spikes, or a bored hacker poking every endpoint. Where does this snippet melt? Flag those hotspots now so they don’t page you later.

The skills above can be helpful, but where can you get more experience reviewing code to develop your own best practices?

- Make code reading a ritual. Block an hour a week to wander through a solid open-source repo, or the cleanest corner of your org’s codebase. When something feels odd, rope in a teammate or let an AI explain the plot twist. You’re stocking a mental library of good patterns, not hunting for bugs.

- Watch your team’s PR leaderboard. Which pull requests sail through review and which spiral into 30-comment marathons? Notice the difference in description quality, changeset size, and structure. Observing that flow teaches you what good looks like without writing a single line.

- Shrink the diff, shrink the pain. Monster commits fry reviewer brain cells. Practice slicing work into small, single-purpose pull requests. When using AI code tools, find ones like Fusion, which generate the tiniest possible diff for a given tweak. Smaller changesets mean faster, more thorough reviews—and happier humans.

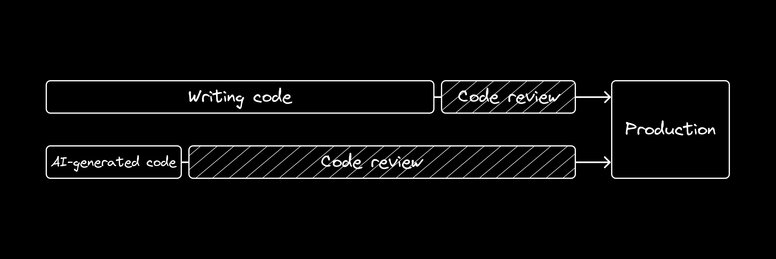

Here’s the problem. At any given org, the single biggest blocker to shipping code isn’t writing it. It’s the time a pull request sits idle, waiting for review. And AI isn’t helping.

AI code assistants speed up the writing of code, but they end up pouring gasoline on the PR backlog fire. They make huge changesets, often across many unrelated files, and they tend to touch files they don’t need to, creating extra work for reviewers.

Often, AI-generated code doesn’t actually speed up your team’s workflow:

At the end of the day, an AI assistant is focused on making sure your narrow task succeeds, much more like a junior dev than a senior one. They can be remarkably effective at coming up with solutions for problems, but in doing so, they often forget to check if anyone on your team already made code to solve them.

So, the loop looks like this:

- AI makes dizzying, epic changes to code.

- The human in the loop, whether working in Cursor or reviewing a PR, eventually gets tired of trying to track everything and starts hitting “Approve All” to keep work moving.

- Lines of code grow much faster than code quality.

- The test suite becomes the real gatekeeper, and production becomes the bug tracker.

Unfortunately, this isn’t just a hypothesis; studies echo this pain. Developers spend significantly more time debugging and fixing security issues in AI-generated code than human written. 59% of developers report that using AI coding tools leads to deployment issues at least half of the time.

Code review is where talented developers refine code to keep working for years to come. AI code generators exhaust traditional review resources through sheer quantity of code produced.

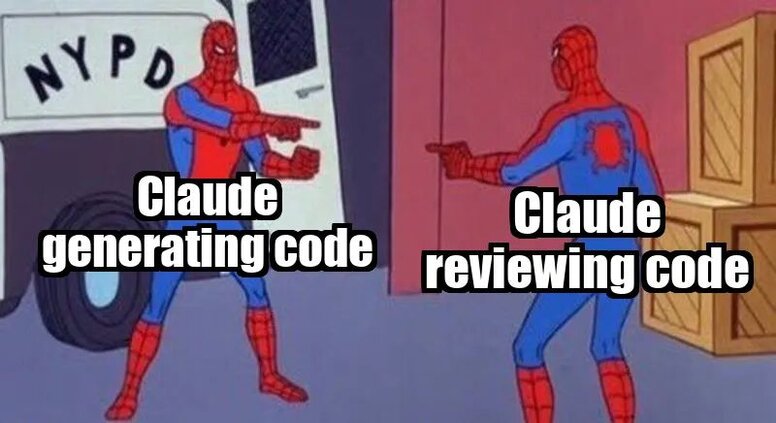

So, why not put more AI on the job? Can’t it rubber-stamp its own pull request?

That thought has spawned a wave of AI-powered code reviewers. For narrow jobs like spotting duplicated snippets, flagging known security bugs, or policing style guides, these bots are relentlessly helpful. Tools like Claude Code and CodeRabbit churn through pattern checks and trim review time for those mechanical chores.

But asking an AI to grade its own work is a bit like hiring spell-check to edit a novel. Sure, it’ll flag the commas and sentence fragments, but you’ll get zero insight into whether the plot works for readers.

Similarly, AI reviewers can confirm that the syntax is tidy and the patterns are familiar, but they can’t guarantee the business logic or overall architecture hasn’t secretly gone bananas. Worse, machine-generated code often looks more polished than human work, and its symmetry can hide deep nonsense.

The core issue is context blindness. These tools stumble on the same things humans excel at: spotting cross-file dependencies, weighing long-term architectural impact, and following a hunch down into the weeds.

In practice, today’s AI reviewers are best thought of as linters on steroids. They’re handy for surface-level cleanup, but nowhere near a substitute for human judgment. We’re still the bottleneck, and that’s crucial for code quality.

So, instead of focusing on code output quantity, we ideally need AI that writes higher-quality, easier-to-review code.

While it's possible to coax tools like Cursor into writing good, component-driven code, it often requires constant hand-holding and correction. You have to feed it the right context, double-check its work, and gently nudge it back on track when it starts to hallucinate.

The whole process starts to feel more tedious than just… writing the code yourself. Personally, I’d rather be a developer than an AI babysitter. I want to tell AI what to do, as if it’s a real junior dev, leave it alone, and then come back later to check as clean a diff as possible, with a good explanation of what it changed and why.

I’d rather be a senior code architect and reviewer than just a prompt context engineer.

Fusion is an AI-powered visual canvas that integrates directly with your existing codebase, giving it a native understanding of your components, design systems, and overall architecture.

Fusion is also built on a simple idea: small diffs are better than large ones.

What we’ve found in building the tool is that if an AI agent can truly go and grab any context it needs, by instrumenting your actual application to get a real-time understanding of how visuals and functionality are working, then it can figure out how to solve any problem.

For instance, imagine you're fixing a dark mode bug. You tell the agent, "This text is too dark and hard to read." A traditional AI, after asking which text you mean, may argue with you for a while about how the text is nearly pure white.

The Fusion agent, however, can see the problem live, and it can say something like:

"It looks like the rendered CSS color for this text is rgb(50, 50, 50). However, the design token applied in the code is --color-text-primary, which should resolve to rgb(230, 230, 230). I can see the style is being overridden by the global CSS file at app/do-not-use. I will fix the selectors’ specificities to ensure the correct color is applied."

Then, we force the agent to reuse your existing components and design system. Fusion maps rendered UI to the source files that generated it, so it always knows where to look for what, and if that context exists, it will use it.

If you ask a typical AI tool to "make this button purple," it might generate an entirely new button component. Fusion, however, knows that button corresponds to src/components/Button.tsxon line 12, and it will make the precise, single-line CSS change required in the correct file. It will also check if “purple” should mean an existing CSS variable.

Then, since you’re already working on a git branch, it raises a PR with its one-line diff and adds a summary explaining the change. The code reviewer has the easiest possible job.

In this example you can see Fusion only adds code needed for a search bar, reusing the codebase’s Angular Material components:

Instead of 1000-line edits across multiple unrelated files, you get surgical precision, only touching code that you asked the AI to change. Combine this with better PR hygiene—one PR per issue that you’re fixing in the code—and you now have AI-generated PRs that successfully use existing functions and fly through review.

Even when the AI chooses a pattern that doesn’t align with the code’s longterm plans, the PRs are still easier to patch up by hand. The goal was never to replace the human in the loop, but rather to empower us to better do our job.

AI hasn’t sidelined developers; it’s promoted us to strategic architects, steering quality while the bots crank out the boilerplate.

Thriving in that role means honing your code-reading instincts and wielding tools that ship tiny, review-friendly diffs rather than thousand-line nightmares. You can keep leveling up those skills and upgrading your AI toolkit to quit babysitting AI and start reviewing like an architect.

Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo