How to Write Cursor AI Prompts That Don't Waste Your Time

Most developers prompt Cursor AI the same way they'd prompt ChatGPT, typing vague requests like "fix this bug" and wondering why the output doesn't fit their project—a critical issue when 46% of developers actively distrust AI-generated code accuracy. The difference is that Cursor reads your actual repository files, imports, and dependencies, but most prompts waste that context by treating it like a blank-slate conversation. This guide shows you how to write prompts that produce usable code the first time by giving clear context, setting explicit constraints, and specifying exactly what output you need.

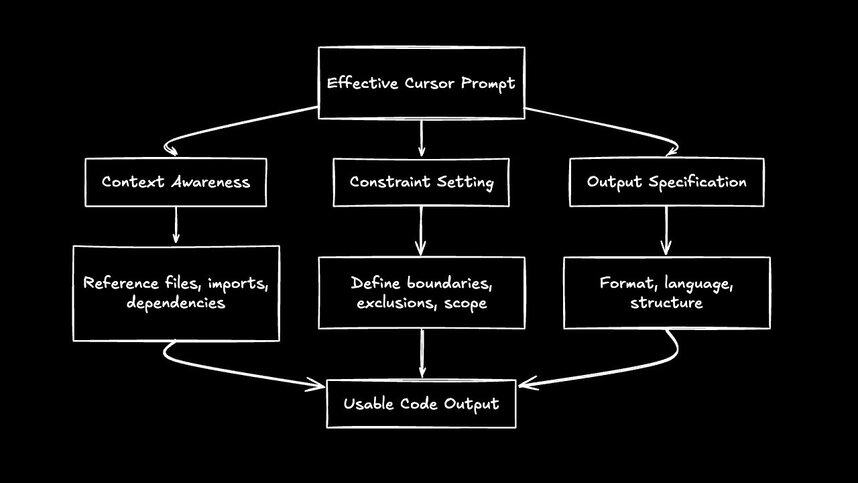

What makes a Cursor AI prompt effective

The best prompts for Cursor AI do three things: they give clear context about your codebase, set explicit constraints on what the AI should do, and specify exactly what output you need. Most developers waste time because they prompt Cursor the same way they'd prompt ChatGPT. They type "fix this bug" and wonder why the output doesn't fit their project.

Cursor is different. It reads your actual repository files, your imports, your dependencies. Effective prompts take advantage of that context instead of treating it like a blank-slate conversation.

- Context awareness: Cursor can see your files and project structure. Reference them directly instead of describing everything from scratch.

- Constraint setting: Boundaries produce better code than open-ended requests. Tell Cursor what not to do.

- Output specification: "Fix this" produces guesswork. "Return a TypeScript function that handles the null case" produces usable code.

How to write Cursor AI prompts that produce usable code

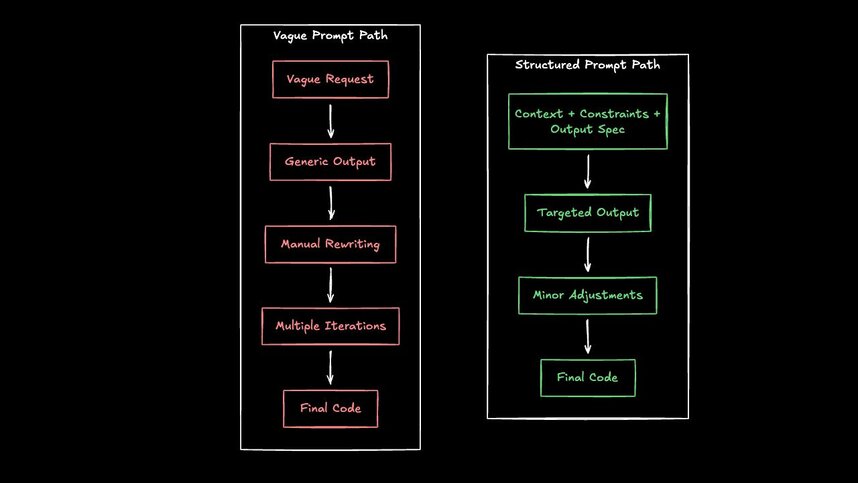

Good prompts follow a predictable structure. You ground the AI in your system, tell it exactly what you want, and set limits on scope. Skip any of these and you'll spend more time fixing the output than you saved generating it.

Provide context about your codebase and tech stack

Start every prompt by telling Cursor what it's working with. Mention your framework, component library, and any patterns that matter.

A vague prompt looks like this: "Create a button component."

A better prompt looks like this: "Create a Button component using our existing design tokens from tokens.ts, following the pattern in components/Input.tsx, with TypeScript props for variant, size, and disabled state."

The second prompt produces code that fits your project. The first produces generic code you'll rewrite anyway.

Provide context about your codebase and tech stack

Start every prompt by telling Cursor what it's working with. Mention your framework, component library, and any patterns that matter.

A vague prompt looks like this: "Create a button component."

A better prompt looks like this: "Create a Button component using our existing design tokens from tokens.ts, following the pattern in components/Input.tsx, with TypeScript props for variant, size, and disabled state."

The second prompt produces code that fits your project. The first produces generic code you'll rewrite anyway.

Specify your desired output format

Tell Cursor exactly what shape the output should take, whether it's a React component, tests in Jest format, or a function with inline comments. Being specific about the desired format eliminates guesswork and produces more accurate code.

Set constraints and boundaries

Constraints prevent scope creep. Without them, Cursor might refactor your entire file when you only wanted a small change.

- "Only modify the

handleSubmitfunction" - "Use existing components from our design system, don't create new ones"

- "Keep the implementation under 30 lines"

- "Don't change the function signature"

Best Cursor AI prompts for building new features

Feature-building prompts need more context than debugging prompts. You're asking Cursor to create something new, so you need to describe where it fits in your existing system.

Scaffolding components with your design system

When generating new components, reference your design system explicitly. A prompt like "Create a Card component that uses our spacing tokens from theme.ts, follows the composition pattern in components/Modal.tsx, and accepts children, title, and optional footer props" produces consistent output.

If your team uses specific naming conventions or file structures, include those too. Cursor follows explicit instructions well. It can't read your team's minds.

Adding functionality to existing code

Extension prompts should reference the current implementation. Try: "Add pagination to the UserList component. Use the existing usePagination hook from hooks/, maintain the current loading state pattern, and add prev/next buttons using our Button component."

Point Cursor to the specific files and patterns you want it to follow. The more specific your references, the less cleanup you'll do later.

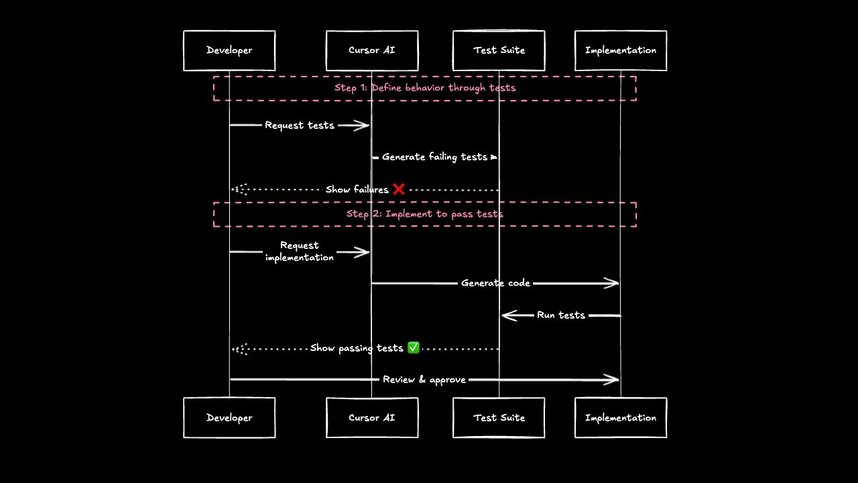

Creating test-driven implementations

Test-first prompts work well with Cursor. Start with: "Write failing tests for a validateEmail function that should return true for valid emails, false for invalid formats, and throw for null input. Use Jest and follow our test file conventions."

Once you have tests, follow up with: "Now implement validateEmail to pass these tests."

This two-step approach produces more reliable code than asking for both at once.

Best Cursor AI prompts for debugging and fixing errors

Debugging prompts need specific error context. Cursor can't guess what's wrong from "this doesn't work." Give it the actual error message, the relevant code, and what you expected to happen.

Diagnosing build and type errors

Copy the full error message into your prompt, especially important since developers spend 35-50 percent of their time debugging and validating code. A good debugging prompt looks like: "I'm getting this TypeScript error: Type 'string | undefined' is not assignable to type 'string' on line 42 of UserProfile.tsx." The user.name property comes from our API response type in types/api.ts. Fix the type error without changing the API type.

Include the stack trace for runtime errors. The more context Cursor has, the more accurate its fix will be.

Iterative debugging with console logs

For complex bugs, use Cursor to add strategic logging first. Try: "Add console.log statements to trace the data flow through processOrder. Log the input, the result of each transformation step, and the final output. Use descriptive labels."

Once you've identified the problem area, follow up with a targeted fix prompt. This systematic approach beats asking Cursor to "find and fix the bug" in one shot.

Best Cursor AI prompts for refactoring code

Refactoring prompts need clear before-and-after specifications. You're changing structure while preserving behavior, so tell Cursor exactly what pattern you want to move toward.

Improving code structure and readability

Specify the refactoring pattern you want. Instead of "clean up this code," try: "Extract the validation logic from handleSubmit into a separate validateFormData function. Keep the same behavior but improve readability. The new function should return an object with isValid and errors properties."

For complex refactors, break them into steps. Ask Cursor to do one transformation at a time so you can verify each change.

Converting between frameworks or patterns

Migration prompts need explicit source and target patterns. A good example: "Convert this class component to a functional component using hooks. Replace this.state with useState, componentDidMount with useEffect, and keep the same prop interface. Don't change any business logic."

Reference existing converted code if you have it. "Follow the pattern we used in components/Dashboard.tsx when it was converted" gives Cursor a concrete example to match.

Common Cursor AI prompting mistakes that waste time

These mistakes cost the most time. Avoid them and your Cursor sessions become dramatically more productive.

| Mistake | What happens | Better approach |

|---|---|---|

Underspecification | "Make this better" produces random changes | Specify exactly what "better" means |

Context truncation | Massive prompts lose important details | Focus on relevant files only |

Scope creep | One prompt tries to do everything | Break large tasks into steps |

Ambiguous requirements | No clear success criteria | Define what "done" looks like |

The most common mistake is treating Cursor like a mind reader. It's powerful, but it needs explicit instructions. Every minute you spend writing a clear prompt saves five minutes of cleanup.

How to create reusable Cursor AI prompts for your team

Reusable prompt patterns enforce consistency across your team. Instead of everyone writing prompts differently, create templates that embed your standards.

- Coding standards: Include style guide references: "Follow our ESLint config and Prettier formatting"

- Commit conventions: "Generate a commit message following Conventional Commits format"

- Architecture patterns: "Use the repository pattern from

services/for data access" - Testing requirements: "Include unit tests with at least 80% coverage"

Document your best prompts in a shared location. When someone finds a prompt pattern that works well, the whole team benefits.

When text prompts hit their limits

Better prompts reduce review cycles, critical when engineers take around 13 hours to merge a pull request on average. When Cursor output matches your team's patterns, reviewers spend less time requesting changes and more time shipping features.

The real productivity gain isn't generating code faster. It's generating code that doesn't need to be rewritten.

But text-based prompts have limits. You're still describing visual layouts in words and hoping the AI interprets them correctly. For frontend work especially, this translation step introduces friction and fidelity loss.

This is where visual-first AI tools offer a different approach. Instead of describing UI in text, platforms like Builder.io let teams work with visual context and real components, allowing the AI to see your design system and produce code that matches. If your team is tired of describing what UI should look like, exploring how visual context changes the equation can be a powerful next step.