How to Write Better Prompts for AI Code Assistants

Most developers have asked an AI to "build a login form" and received code that looks right but doesn't match their tech stack, component library, or team standards—a challenge for the 80% of developers now using AI tools in their workflows. The gap between generic AI outputs and mergeable code comes down to how you write prompts. This guide covers practical strategies for writing AI coding prompts that produce code your team can actually ship, from including the right context upfront to building iteratively and referencing your design system explicitly.

What are AI coding prompts and why they matter

The best coding prompts for AI assistants are specific instructions that include your tech stack, existing patterns, and clear constraints. In coding, prompts do more than ask questions; they set behavioral rules, provide technical context, and define what success looks like.

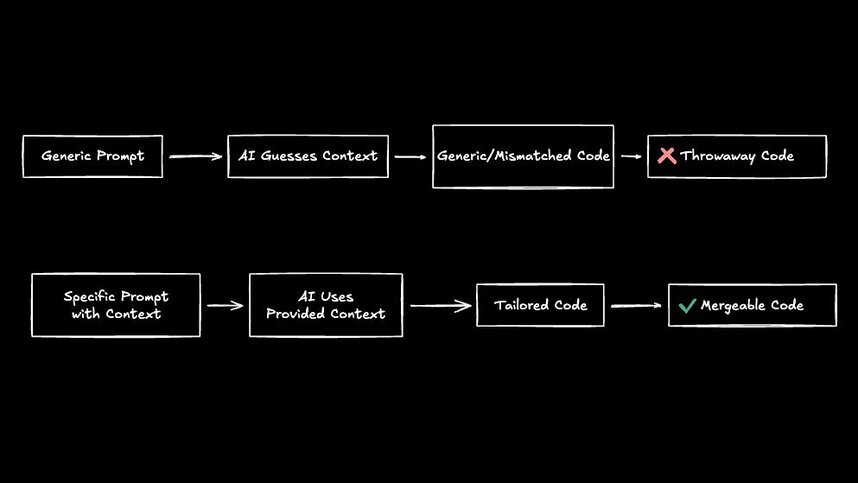

The difference between "build a login form" and "build a React login form using our AuthContext, styled-components tokens, and Formik validation" is the difference between throwaway code and mergeable code. Generic prompts force the AI to guess. Guesses rarely match your codebase.

A few concepts help you write better prompts:

- Context window: The amount of information an AI can process at once. Longer windows let you include more codebase details.

- Tokens: The units AI uses to measure text length. Complex prompts consume more tokens, limiting what fits.

- Hallucinations: When AI generates plausible-sounding but incorrect code. Clear constraints reduce this risk.

Prompt strategies that produce mergeable code

Moving beyond "be specific" requires understanding how to structure prompts that your team can actually ship. The strategies below focus on including the right context, setting clear boundaries, and building iteratively.

Include your tech stack in every prompt

Generic prompts produce generic code. When you ask for "a dashboard component," the AI has no idea whether you use React or Vue, Tailwind or styled-components. It fills gaps with assumptions that rarely match your setup.

Effective prompts front-load technical context. Include your framework, key libraries, and existing patterns. Reference specific components, hooks, or utilities your team has already built.

A context-rich prompt might look like: "Using Next.js 14 with App Router, TypeScript, and Tailwind, create a dashboard component that extends our existing Card component and integrates with our GraphQL User query returning id, email, and role."

Use constraints to reduce ambiguity

Constraints paradoxically improve AI output. Open-ended requests give the AI too many choices, leading to generic solutions. Bounded problems with clear success criteria produce focused, usable code.

| Vague Prompt | Constrained Prompt |

|---|---|

"Create a dashboard" | "Create a dashboard with 3 metric cards using our Card component, fetching from /api/metrics, handling loading and error states" |

"Add authentication" | "Add JWT authentication to Express routes, store tokens in httpOnly cookies, implement refresh token rotation" |

"Improve performance" | "Reduce bundle size by code-splitting admin routes and lazy-loading the charts library" |

The constrained versions tell the AI exactly what to build, what components to use, and what edge cases to handle. Less ambiguity means fewer rewrites.

Build iteratively instead of asking for everything at once

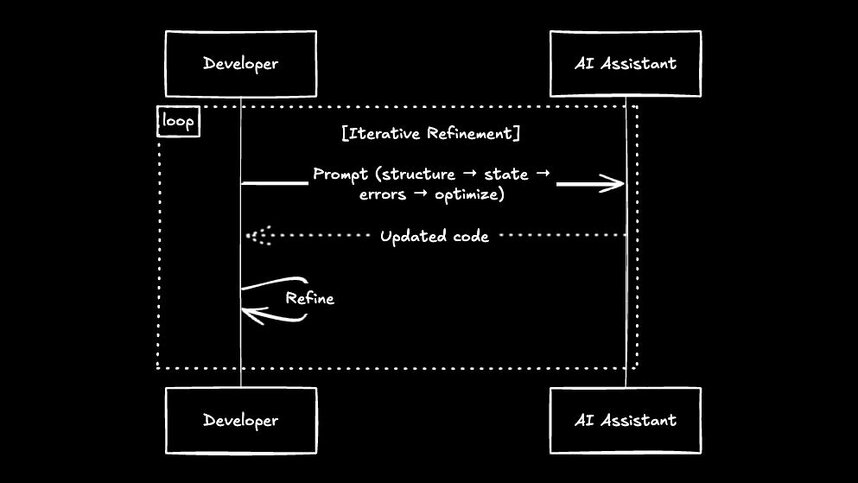

Treating AI as a one-shot oracle leads to disappointment. Treating it as a pair programmer leads to better code. The prompt-review-refine cycle mirrors how actual development works.

Start with structure, then layer in complexity:

- Initial scaffold: "Create the component structure with props interface"

- Add behavior: "Now add state management for form validation"

- Handle edge cases: "Add error boundaries and loading states"

- Optimize: "Refactor to use our existing useFetch hook"

Each iteration builds on the previous output. You catch issues early and guide the AI toward your standards incrementally.

Reference your design system explicitly

The fastest path to unusable AI code is letting it reinvent your component library. Every team has established patterns, design tokens, and approved components. Prompts that reference these produce code that fits.

Include these elements in design-system-aware prompts:

- Specific component names and their props

- Design token variables instead of hardcoded colors or spacing

- Accessibility requirements upfront

- Links to existing similar implementations in your codebase

- When the AI knows to use your Button component instead of creating a new one, you skip the rewrite cycle entirely.

Prompt templates for common workflows

Templates save time and produce consistent results. These examples cover tasks developers face daily, structured to produce mergeable code rather than demos.

Component generation prompts

Component generation works best when you specify file structure, prop types, and related files upfront.

Create a [ComponentName] component that:

- Uses our base [BaseComponent] as foundation

- Accepts props: [list specific props with types]

- Follows our file structure: component.tsx, component.styles.ts, component.test.tsx

- Includes Storybook story with [specific variants]

- Handles these states: loading, error, empty, successThis template produces a complete component package, not just a single file that needs manual expansion.

Code review and debugging prompts

AI excels at pattern recognition across code. Use it as a second pair of eyes for catching issues humans miss.

Review this code for issues:

[paste code]

Check specifically for:

- Race conditions in async operations

- Missing error handling

- Memory leaks in useEffect

- Accessibility violations

- Security vulnerabilities in data handlingSpecific review criteria produce specific findings. Generic "review this code" prompts produce generic observations.

Design-to-code translation prompts

Bridging Figma to functional components requires translating visual specifications into technical requirements.

Convert this design to code:

- Design shows: [describe key visual elements]

- Use our tokens: spacing-md, color-primary, typography-body

- Responsive breakpoints at: sm, md, lg

- Interactive states: hover, active, disabled, loading

- Animation: 200ms ease-out transitions on hoverIncluding token names and interaction states upfront prevents the back-and-forth that slows design implementation.

How to review and refine AI-generated code

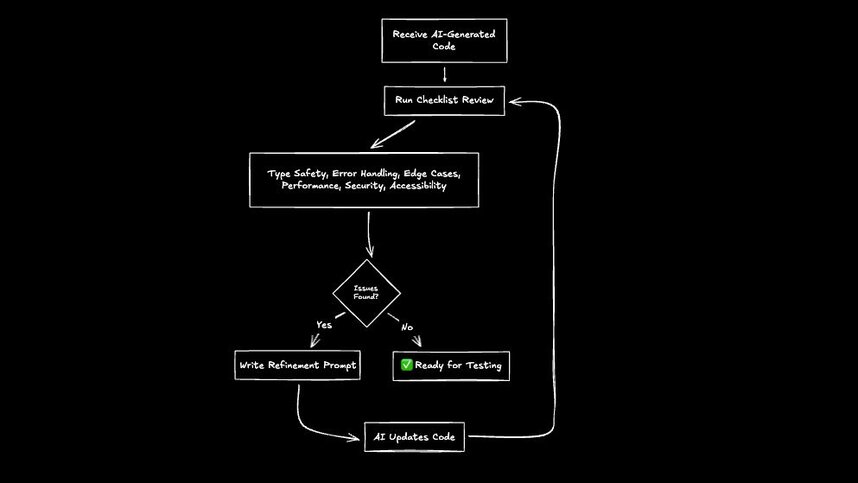

First drafts from AI rarely ship without changes. The review-refine cycle turns suggestions into production code.

Run every AI output through this checklist:

- Type safety: Does it satisfy TypeScript without using

any? - Error handling: Are all failure modes addressed?

- Edge cases: Does it handle empty states, nulls, and boundary conditions?

- Performance: Any obvious inefficiencies or missing optimizations?

- Security: Input validation, sanitization, and authorization checks present?

- Accessibility: Keyboard navigation, screen reader support, ARIA labels included?

When issues surface, prompt for specific improvements rather than generic requests. "Add proper TypeScript types, avoiding any" produces better results than "improve the types."

Common prompting mistakes that waste time

Understanding what breaks AI outputs helps you avoid wasted cycles. These mistakes lead to code that looks right but fails in production.

Ignoring codebase context

Asking for "a user dashboard" produces vanilla HTML or generic React. The AI has no knowledge of your state management, component library, or API structure. It fills gaps with assumptions.

The fix is simple: always include your tech stack, key dependencies, and existing patterns. Front-load context so the AI works within your constraints from the start.

Skipping code review

AI outputs often look correct but contain subtle bugs. Trust-but-verify is the only safe approach.

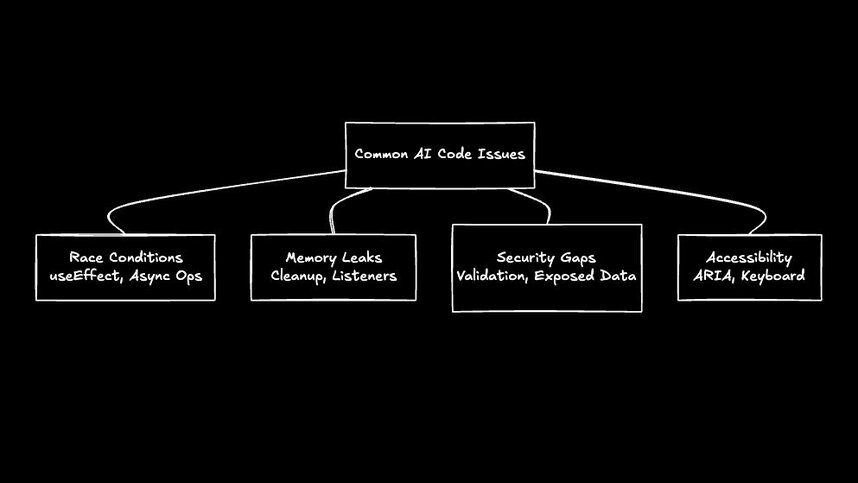

Watch for these common AI mistakes:

- Race conditions: Especially in useEffect and async operations

- Memory leaks: Forgotten cleanup functions, missing event listener removal

- Security gaps: Missing input validation, exposed sensitive data (48% of AI code contains security vulnerabilities)

- Accessibility misses: Incorrect ARIA attributes, missing keyboard handlers

Treating outputs as production-ready

AI is a drafting tool, not a senior engineer. Every output needs review, testing, and often refinement before it ships—especially since AI-generated PRs contain 1.7x more issues than human-written ones.

This validation step is non-negotiable, requiring you to run linters, execute tests, and check bundle size impact. Automated evals help you prevent regressions and measure success systematically. Always review outputs against team standards and test edge cases manually before shipping.

How Builder.io eliminates prompt engineering overhead

The strategies above work. They also require significant effort to maintain context, reference design systems, and validate outputs manually. Builder.io eliminates much of this overhead by acting as an AI frontend engineer that already knows your codebase.

Builder.io reads your repository, indexes your design system, and understands your patterns before generating code. A prompt like "create a product card" automatically uses your Card component, follows your spacing tokens, and matches your established patterns. No manual context injection required.

The visual editing layer lets PMs and designers refine AI outputs without touching code, while engineers maintain control over the final merge. This eliminates the rewrite cycles that plague most AI-generated code and keeps everyone working in the same system.

Getting started with better AI prompts

Start with one change: add your tech stack and component references to every prompt. You'll see immediate improvement in output quality.

For teams tired of prompt engineering gymnastics to get usable code, see how Builder.io handles the context automatically by learning from your codebase.