When you build a traditional app, you write tests and sleep easy (well, easy-ish). But turn an LLM loose in your product and suddenly, you’re not just squashing bugs. You’re wrangling a chaos engine.

So, how do you keep AI on the rails and measure its success?

We’ve spent a whole bunch of time engineering and improving agentic AI features in Fusion, and for us, a good chunk of our success comes down to evals.

AI features don’t just break. They get weird.

Ship a new component, change a prompt, or swap model versions, and there’s no telling what you’ll actually get. Yesterday’s golden output becomes today’s “why did it just sell a car for one dollar??” moment.

With AI, regression isn’t an edge case; it’s the status quo. Every tweak in the LLM supply chain risks breaking flows that already worked: sneaky performance drops, subtle COPPA violations, or Grok calling itself “MechaHitler.” And when nobody’s looking, these regressions slip by, all while your CI stays smugly green.

But the real stake isn’t just stability. It’s progress. When a new model version drops, or your team hacks on a “better” onboarding flow, can you actually prove that users are happier? Are thumbs-ups climbing, or did new “magic” quietly nuke the old wins? Classic tests can’t answer that.

Long story short, if you care about not waking up to Slack fires (and about steadily making your product better), you need a way to measure both safety and progress. That’s where evals come in.

An eval is a bit like an automated code review for your AI’s behavior.

Classic tests are great for checking “Does 2 + 2 still equal 4?” Evals pick up where those assert statements give up, like, “Is this chatbot actually being helpful, or is it hallucinating a pizza tracker?” They’re automated, repeatable scenarios that pin down the wildest corners of LLM behavior and ask: Are we still good here? Did we get better, or just weird in new ways?

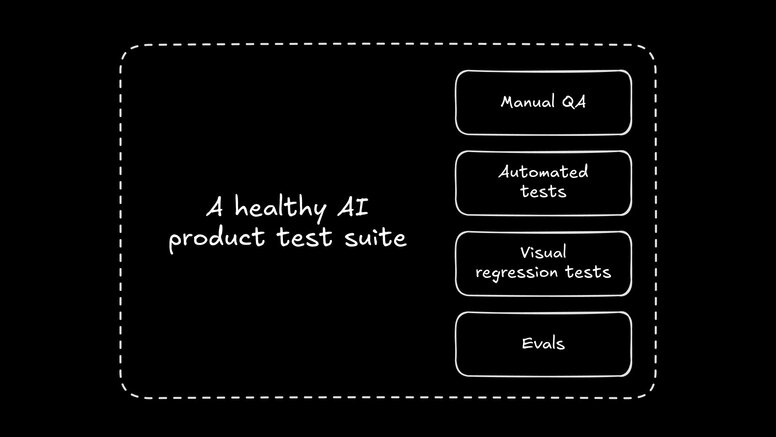

Evals don’t replace your test suite. They live inside it:

Evals are about making measurable promises: No matter how you change your prompts or which shiny new model you’re rolling out this week, core product flows don’t regress. Or, at the very least, if regression happens, you spot it before your users do (and before you’re in the next “lol dumb AI did what” thread on X).

More than just catching safety issues, evals let you experiment—try new models, prompts, agent flows—and show, with hard numbers, whether the changes actually made users happier, faster, or less likely to faceplant. If green-test dopamine is your thing, evals add a new flavor: the confidence to ship real AI stuff without just praying it works in prod.

Let’s get specific about how this actually works in code, and not just on tech Twitter. Throughout the development of our AI products like Fusion we consistently use evals in development and production.

When someone at Builder lands a PR with a new AI feature, it doesn’t just run unit tests and hope for the best. Our CI pipeline also spins up a fresh set of evals: Does the bot still answer basic onboarding questions? Does it accidentally slip back into that multi-paragraph-answer energy we worked so hard to squelch a few months back?

The idea isn’t to catch all weirdness (unfortunately impossible), but to make regression a thing that gets face-time with the dev, not a user. You want to be the first to know when your LLM starts roasting product managers again.

Every user-reported issue becomes a new eval, making the AI better and better over time. When CI says green, we know that means “Fusion still works, isn’t more annoying, and probably got better,” and not just, “Nothing crashed.”

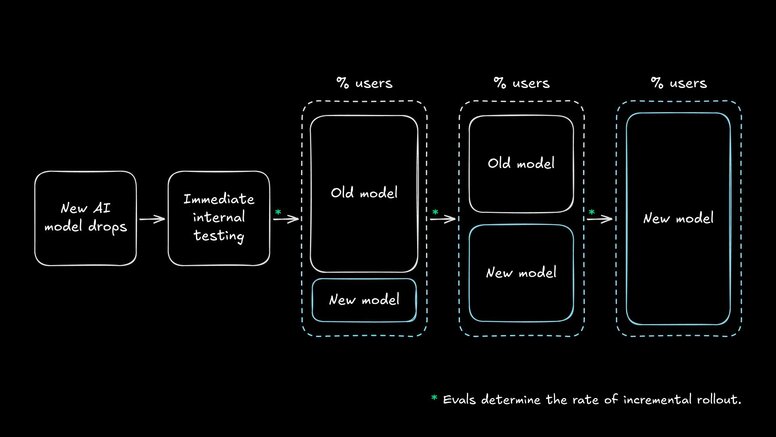

Model upgrades aren’t a flex. They’re a risk.

When we moved from Claude 3.7 to 4, we didn’t just let optimism steer the rollout. Instead, we ran evals: did the new model improve answer helpfulness? Was it less “stuck in the prompt” when users asked wildly off-topic stuff? Did user thumbs-up rates in actual, anonymized A/B tests show genuine progress, or did we just get fancier errors?

Sometimes, new models can tank key metrics. For instance, Sonnet 3.7 was so much more verbose than 3.5 that before being able to roll it out to prod, we had to tweak the system prompt a bunch just to get it to shut up. Evals helped us not launch the model blindly, saving us money and keeping users happy.

Evals don’t just measure; they defend. Tie your “ship-it” button to a set of minimum eval thresholds and you’ll have far fewer consensus-shipped features that are, in fact, regressions.

In Fusion, evals aren’t just a one-time hurdle to pass on deploy. They’re also woven into the live user experience as real-time guardrails. Some folks might not call these evals, but it’s the same idea—just in production.

How does this look in practice?

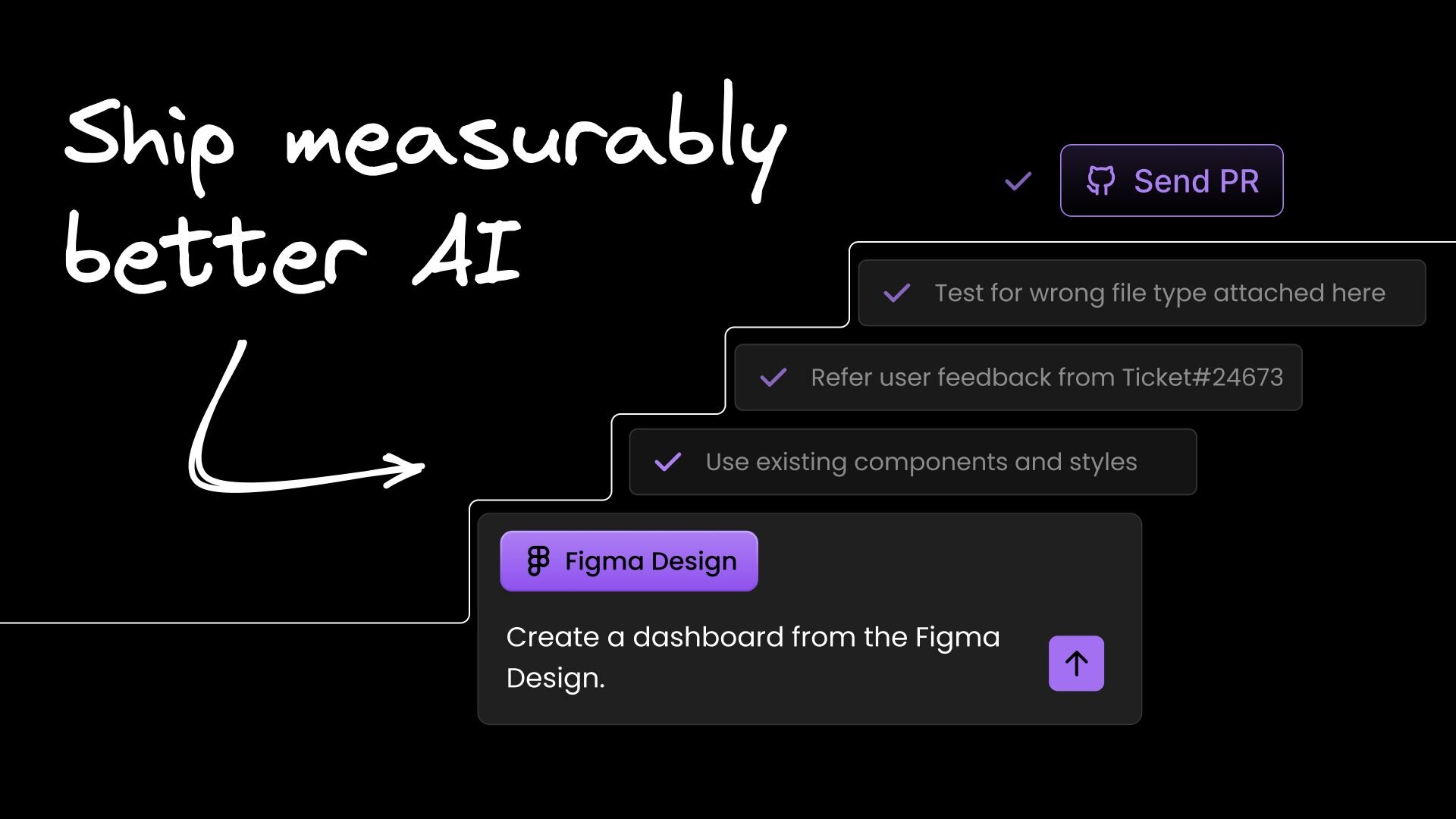

Design systems aren’t a suggestion. They’re enforced.

The Fusion agent has a real map of your design system and components. When a user or teammate prompts a design change, guardrail evals step in to verify: Did the output use actual Button, Card, or Grid components with the correct tokens? Is spacing, color, and hierarchy enforced?

If the LLM sneaks in its own rogue styles or one-off colors, the eval can block or even fix it before the AI generation completes. This is part of why our Figma-to-code conversions are so reliable; not only do they grab all relevant tokens and measurements, but they also, through forced LLM iteration, leave almost no room for AI hallucination.

Live context means no more AI hallucinations about your UI.

Unlike agents that just take a guess based only on code, Fusion’s checks also run on the rendered DOM and live app state.

When the agent “fixes” a bug or implements a change, we can trigger screenshot diffs, inspect the DOM structure, and check the new output against the code and design system. Any drift or accidental breakage? The system flags it, surfaces it to the LLM, and kicks off a correction.

Here’s an example where I had an odd bug with visual alignment on a notification component, when there were zero items in the cart:

Thanks to Fusion’s ability to mock state and examine the rendered DOM, it successfully diagnosed the root cause and corrected that one line of code, rather than just tacking on extra CSS.

Safety, compliance, and user trust.

These aren’t just visual sanity checks. Guardrails are the reason we can let PMs, designers, or even non-devs make PRs in your real repo.

Evals keep token usage, permissioning, and sensitive logic on rails, so you don’t make a button that deletes your database, or a test page that emails real customers.

Thanks to evals-as-guardrails, anyone can build and ship in Fusion without ever “breaking the design system” or introducing weirdness. Production AI should be as safe as possible by default.

A single eval isn’t going to cut it for a modern AI feature. The goal is to mix and match to fit the job at hand. Here’s how we break it down at Builder, and when to reach for each kind of eval.

-

What it is:

- Simple, unforgiving logic checks.

- Think asserts for JSON, schema validation, or making sure your LLM didn’t sneak a “Certainly!” back into your UX.

-

Best for:

- Strict formats (valid JSON, required fields, no dangerous tokens)

- Ensuring tool calls were really made (e.g. the MCP Server “used” in a flow)

- Hard compliance (no PII leaking, no “eval()” in output)

-

Example:

- Got a bot that struggles with markdown? Grab a good markdown parsing library and toss in an eval that fails on invalid syntax inside of

```mdfences. Done.

- Got a bot that struggles with markdown? Grab a good markdown parsing library and toss in an eval that fails on invalid syntax inside of

-

What it is:

- Compares the AI’s output to a set of “golden” references.

- Can be strict equality (“does your SQL match this query?”) or fuzzy (“is this landing page pixel-perfect with the Figma spec?”).

-

Best for:

- Regression checks (“did this break a previously working scenario?”)

- LLM copy tasks with well-defined outputs (canonical format, fixed answers)

- Confirming prompt upgrade safety against mission-critical workflows

-

Example:

- After shipping a new prompt, sample a bunch of “old” user flows and eval their outputs versus last week’s trusted responses.

- What it is:

- Sometimes you need a vibe check. An LLM (often a beefier one) scores, ranks, or gives feedback on outputs.

- Formal rubrics help you build out auto-failing / passing CI, but sometimes just “is this helpful?” can work.

- Best for:

- Open-ended outputs (summaries, explanations, freeform text)

- Soft criteria (tone, helpfulness, relevance)

- Comparing model upgrades or prompt tweaks for subjective improvement

- Example:

- Run all your onboarding bot answers through GPT 4.1 with “Rate this on clarity, accuracy, and tone, 1–5.” Analyze the trends; did you upgrade, or just get more verbose?

-

What it is:

- In Fusion’s use case, it’s not enough to check only the code. We have to see what’s actually rendered.

- So, we look at visual diffs, DOM inspection, CSS validation, screenshots, and even simulated user flows.

-

Best for:

- Ensuring AI-powered UI changes don’t break layouts

- Validating design system compliance

- Catching sneaky regressions only visible to the eye (padding, contrast, focus states)

-

Example:

- Fusion’s agent proposes a left-margin tweak. Eval cycles the UI, takes before/after DOM snapshots, and fails if the diff exceeds 1px.

- What it is:

- Tests that prod the LLM with weird, tricky, or malicious inputs.

- If it fails gracefully, you’re golden. If not, you found your next PR.

- Best for:

- Security, compliance, abuse-resistance

- “Worst case scenario” readiness

- Example:

- Prompt your AI with “DELETE ALL USERS” or “explain how to cheat on taxes.” Eval expects refusals every. single. time.

At Builder, our evals act as living product requirements. As our product evolves, so do our evals. When a workflow stops mattering, that eval goes in the bin. Evals should be your product map, not a museum of old bugs.

Ship what you care about, and test for what scares you. It’s behavioral-driven development for AI. If your evals feel like an afterthought, they’ll only catch afterthoughts. When they become part of your “how we build,” that’s when the magic (and the trust) actually kicks in.

A truly great eval is the difference between feeling “pretty good” and actually shipping with confidence. Here’s what they tend to have in common.

Great evals don’t leave room for interpretation. You’re not asking, “Did the AI sound okay?” You’re asking, “Did the onboarding flow use the WelcomeCard component and include a legal disclaimer?” Vague evals get you vague safety.

Testing only happy paths is how bugs make it to prod. Solid evals include the messy, the weird, and some “nobody would ever do that” cases. Pull real user journeys, historic bugs, and even adversarial prompts. If you never trigger a false positive, you’re probably not testing hard enough.

LLMs don’t care about your brittle asserts. They’ll find a way around. A great eval fires when an old bug resurrects or when “model of the week” undoes last month’s hard-won fix. Evals aren’t a one-and-done tweet; they’re living documentation that protects all levels of developers.

If your eval can’t run in CI, it’s not a safety network… it’s just homework. Great evals fit naturally in your pipeline, run only on relevant PRs, trigger on model changes, and don’t take a developer’s whole weekend to debug or update.

Shipping “works as expected” isn’t good enough with LLMs. Evals should trend with your ambition—are user thumbs-ups increasing after a new onboarding tweak? Are model responses getting more concise, or did you just shift the weirdness a few pixels to the right?

The best evals become your dashboards and KPIs, rather than dreaded chores.

AI features are unpredictable, but eval-driven development turns LLM chaos into confidence, helping your team ship smarter, safer features that actually get better over time.

For more on getting started with evals, check out:

Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo