What Is an AI Agent in Software Development?

AI agents are showing up in every development tool pitch, but most teams still can't explain what separates an agent from a chatbot or why it matters for their workflow.

This guide explains what AI agents actually do in software development, how they differ from coding assistants like Copilot, and where they create real value versus where they're still mostly hype. You'll learn how agents work, what types exist, and how to evaluate whether your team is ready to adopt them without breaking your existing processes.

What are AI agents?

An AI agent is software that pursues goals on its own. You give it an objective, and it figures out the steps. This is different from traditional automation, which needs every action spelled out in advance.

Think of it this way: a script runs the same sequence every time, but an agent adapts. It observes what's happening, decides what to do next, takes action, and checks the result. Then it repeats until the job is done or it needs your input.

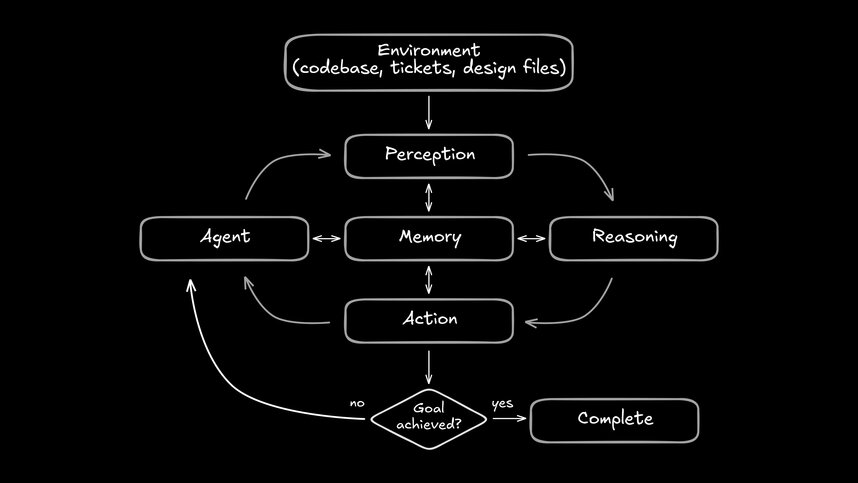

Four components make this work:

- Perception: The agent reads its environment, whether that's a codebase, a ticket, or a design file

- Reasoning: It decides which action moves it closer to the goal

- Action: It executes, whether that means writing code, calling an API, or running a command

- Memory: It remembers context across steps so it can handle long-running tasks

Modern agents are built on large language models, which let them understand natural language instructions. But the agent layer adds something critical: the ability to use tools, maintain state, and orchestrate complex workflows without constant human prompting.

What makes AI agents different from AI coding tools?

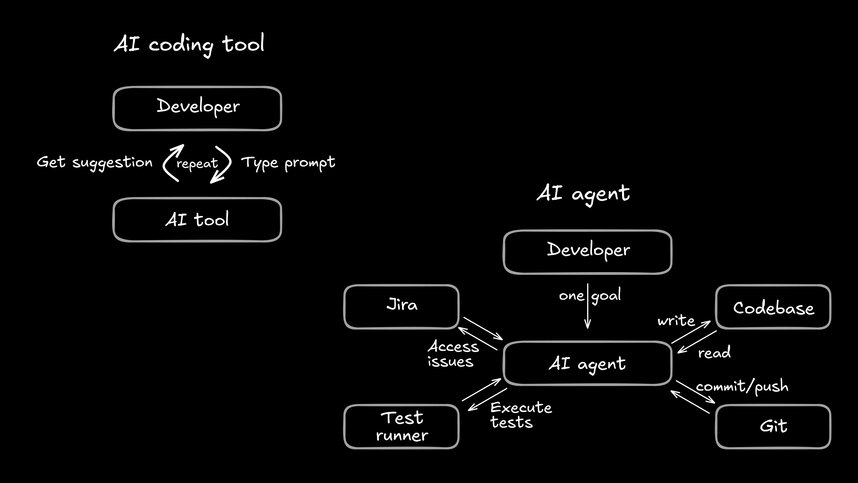

If you've used GitHub Copilot in the early days, you know the rhythm: you type, and it suggests the next line. While helpful for autocomplete, the tool is fundamentally passive. It waits for you.

AI agents don't wait. They take a goal and execute. They plan multiple steps, call different tools, and iterate until the task is complete.

| Aspect | AI Coding Tools | AI Agents |

|---|---|---|

| Interaction | Responds to each prompt | Executes toward a goal |

| Memory | Forgets between prompts | Retains context across tasks |

| Tool use | Suggests code | Calls APIs, runs commands, opens PRs |

| Planning | None | Breaks goals into steps |

The distinction matters because agents can orchestrate. They might read a Jira ticket, analyze relevant files, generate changes across multiple components, run tests, and open a pull request. A coding assistant would need you to prompt each of those steps one at a time.

How AI agents work in software development

In development, agents follow the same loop: observe the codebase, reason about changes, execute, and evaluate. But the environment is your actual repository, your tickets, and your CI pipeline.

Autonomous decision-making and task execution

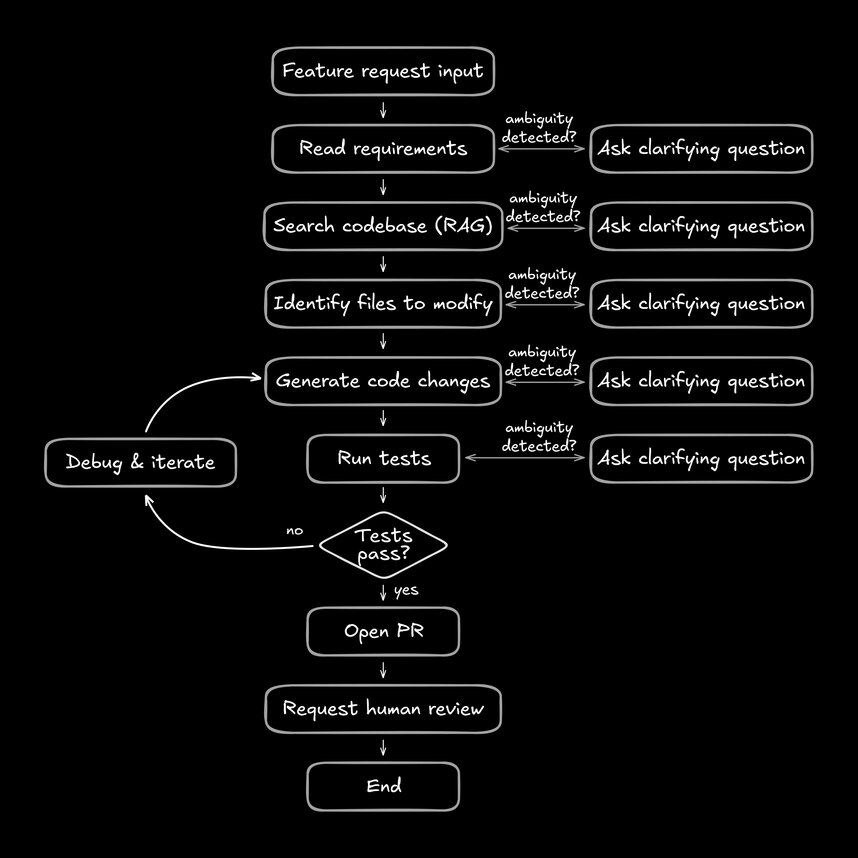

When you tell an agent to implement a feature, it breaks that goal into steps. First it might read the requirements. Then it identifies which files to change. It generates the code, runs the tests, and checks whether the output matches the spec.

If something is ambiguous, a good agent asks. If it can make a reasonable assumption, it does. The goal is progress and not perfection on the first try.

Learning from code patterns and team workflows

Effective agents don't generate code out of thin air. They first study your codebase to understand your conventions, naming patterns, and architecture.

This happens through retrieval-augmented generation, or RAG. The agent searches your repo for relevant examples before generating anything new. The result looks like code your team would write and not generic output that needs heavy editing.

Integration with development tools and repositories

Agents connect to the tools you already use: Git, your IDE, Jira, Slack, your CI system. They interact through APIs and CLIs, running in sandboxed environments that prevent accidents.

This integration separates real agents from demos. If an agent can't push to your actual repo or trigger your actual tests, it's a prototype. Production agents fit into your existing workflow.

Types of AI agents in software development

Different agents handle different parts of the development cycle. Most production systems combine several types.

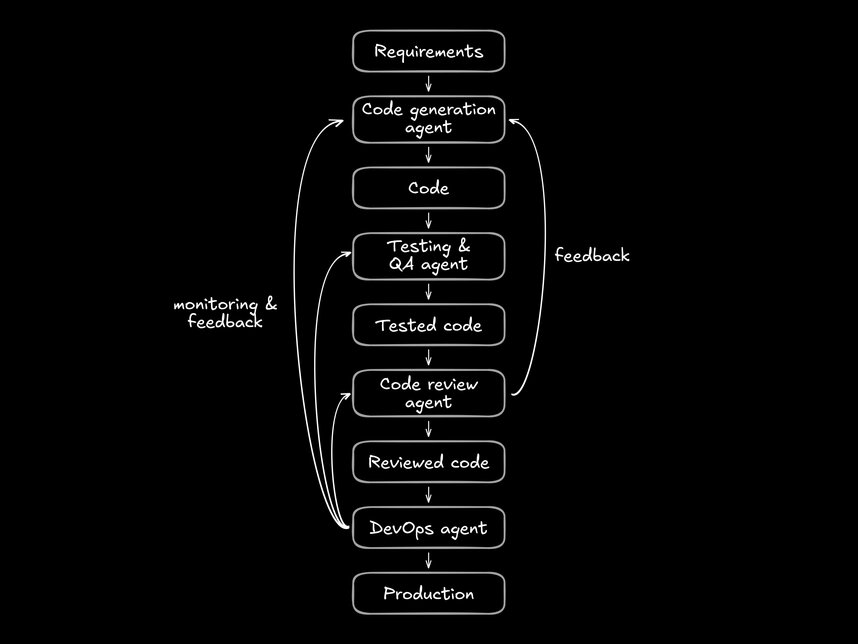

Code generation agents

These translate requirements into working code. They read tickets, design files, or plain descriptions and produce implementation. The best ones understand your design system and component library, using your existing pieces instead of inventing new ones.

Testing and QA agents

Testing agents write test cases, run regression suites, and catch edge cases. They analyze your changes to figure out what needs testing and can perform visual regression checks to spot UI differences.

Code review and optimization agents

Review agents perform static analysis and flag issues before human reviewers see the code. They catch style violations, security vulnerabilities, and performance problems early in the cycle.

DevOps and deployment agents

These automate your CI/CD pipeline, provision infrastructure, and handle incidents. They monitor systems, detect anomalies, and can roll back failed deployments automatically.

AI agent use cases in software development

The practical value of agents comes down to eliminating repetitive work and cutting the time between idea and production.

Automating frontend development workflows

Frontend work involves a lot of assembly: translating designs into components, making layouts responsive, keeping everything consistent with the design system. Agents handle this translation, producing code that uses approved components and follows established patterns.

Accelerating design-to-code translation

The handoff from design to development is where intent gets lost. Agents that understand both Figma and code can preserve fidelity while mapping visual elements to real components.

Streamlining code reviews and testing

Agents provide initial review feedback on style, security, and performance before humans look at the code. They generate tests based on requirements and flag gaps in coverage, catching problems earlier.

Managing deployment and CI/CD pipelines

Deployment agents coordinate rollouts, manage feature flags, and scale infrastructure. When something breaks, they diagnose the issue and can fix it automatically, reducing downtime.

Benefits of AI agents for development teams

The payoff from agents is eliminating waiting time and repetitive work across the entire cycle.

Faster development cycles and reduced time-to-market

Agents remove the dead time between handoffs. Instead of waiting for specs, then waiting for implementation, then waiting for QA, agents execute steps in rapid sequence.

Improved code quality and consistency

When agents use indexed design systems and follow established patterns, output stays consistent. This prevents the drift that happens when different developers interpret the same requirement differently.

Reduced manual work and context switching

Developers lose hours to context switching. Agents handle the routine work (70% of agent users report reduced time on specific development tasks) so engineers can focus on architecture and complex problems that actually need human judgment.

Challenges of implementing AI agents in software development

Adopting agents comes with real friction. Understanding the tradeoffs helps you plan realistic implementations.

Integration with existing development workflows

Most teams have established processes and approval workflows. Agents need to fit into these systems and not just replace them wholesale. Start with bounded tasks and expand as trust builds.

Code quality and review requirements

AI-generated code still needs human review. Remember, the output is a draft and not a final product. Teams that skip validation will run into quality issues.

Security and data privacy considerations

Agents that access codebases need proper security controls, audit trails, and compliance certifications like SOC 2, which verifies that a company's security practices are tested and proven effective—critical since 87% of developers have concerns about accuracy and 81% about security and privacy of data. Not every platform meets these standards.

Best practices for working with AI agents

Successful adoption follows predictable patterns. Start small, maintain oversight, and connect to real systems.

Start with well-defined tasks and clear constraints

Begin with bounded problems: generating a specific component, writing tests for a module, updating documentation. Expand responsibilities as the agent proves reliable.

Maintain human oversight and code review processes

Keep humans in the loop. Agents suggest, humans approve. This builds confidence while maintaining quality. Treat agent output as a starting point.

Connect agents to real repositories and design systems

Agents produce better output when they work with your actual codebase and components. Connection to real systems improves quality and ensures output fits your architecture.

The future of AI agents in software development

The direction is clear: agents will handle more implementation work while humans focus on judgment, strategy, and hard problems. The handoff era, where designers mock up, PMs spec, and engineers translate, will be replaced by shared execution. Everyone works in the same system, contributing directly to code instead of passing artifacts across functional boundaries.

Platforms like Builder.io show what this looks like in practice, acting as an AI frontend engineer that works directly in your repository with your real components. This allows designers and PMs to contribute production-ready UI while engineering standards stay in place, resulting in faster cycles without the rework.

If your team is ready to move from handoffs to shared execution, sign up for Builder.io and see how agents fit into your workflow.

Common questions about AI agents in development

Can AI agents replace frontend developers?

Agents handle repetitive implementation work (with 41% of all code now AI-generated or AI-assisted) while developers focus on architecture, complex logic, and decisions that require judgment. The goal is to amplify your team.

How do AI agents maintain design system consistency?

Agents reference your indexed design system directly, using only approved components and tokens, rather than generating arbitrary styles. Every output follows your established patterns without manual enforcement.

What separates AI agents from GitHub Copilot?

Copilot provides single-file completions based on prompts. Agents orchestrate entire workflows across multiple files, tools, and services to achieve broader goals. They plan, execute, and iterate rather than just suggesting snippets.