I love Qwik. It’s no secret, and I'm not just saying that because it's built by the company I work for. It’s because I’ve felt the pain of the problems it solves. And I’m not the only one.

Just a little while ago, Tejas Kumar, a well-known web developer & speaker, released his first impressions of Qwik in this video:

He starts by telling a story of way back in the day when he and his colleague thought about ways to make web applications faster. In a later part of the video, he explains some of the hard work it takes to attempt this sort of endeavor.

I am no stranger to being in that place either. Back when I was working on an SEO-focused web app, we also attempted to “make it faster”. There was a lot of code juggling that needed to be done in order to see any sort of improvement.

It’s a painful process…and many other devs have probably felt it as well.

You might be wondering what we needed to do and how we went about it, but we’ll get to that later. First, we need to understand what slows apps down.

Long story short, web apps are slow because we ship way too much JavaScript.

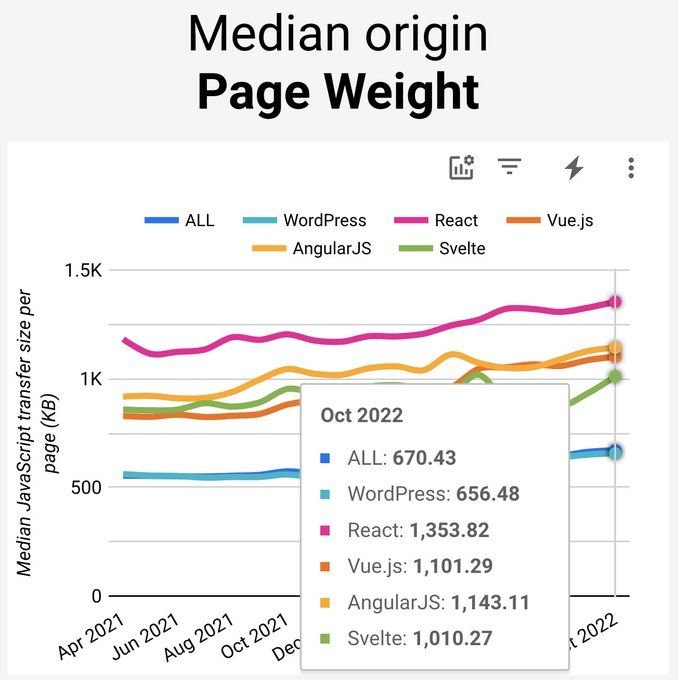

Lately, even Dan Shappir (performance tech lead at Next Insurance and co-host of JS Jabber), showed us what the median origin page weight by framework was in the past year:

Let's take a moment to dwell on that. Why does a lot of JS make a web app slow?

There are a few of steps in the way:

- Download

- Parse

- Execute

Each of these takes time, which means users need to wait. That’s not a good experience for anyone. Especially when you pay double or triple the cost if your internet is shoddy and your device isn’t the latest and greatest.

When you don’t have a good user experience you abandon ship, whether it’s your shopping cart, that sign-up flow, or a post you’re reading. That business can forget about those conversions — 💰 down the toilet.

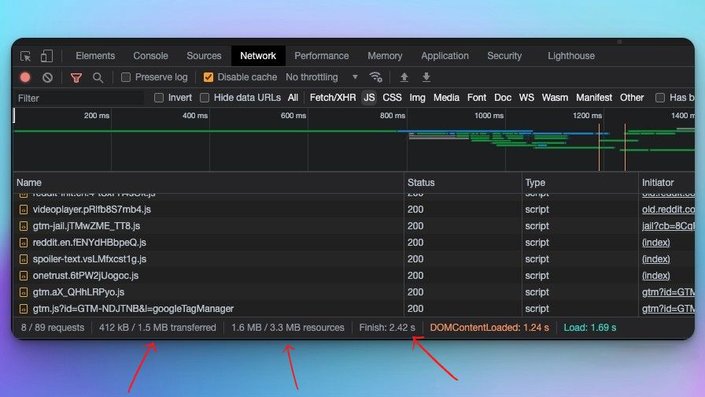

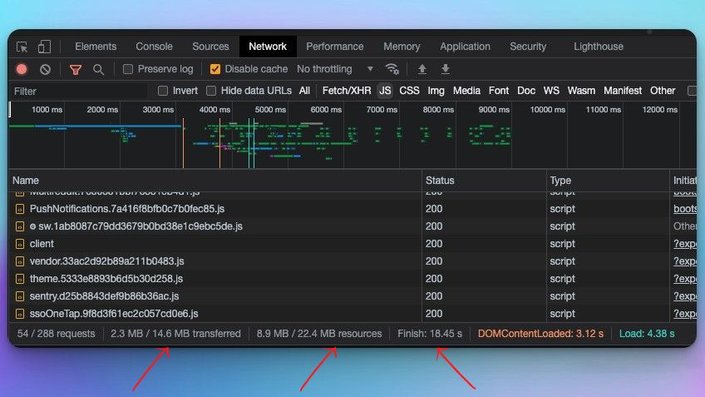

Things used to be lighter, as Tejas also demonstrated in his video when he showed the current Reddit website compared to the “old Reddit”.

Here we can see the total JS over the network for the “old Reddit”:

Here is the same for the “new Reddit":

It takes forever, and that’s without doing anything. JavaScript is for adding interactivity. Yet we got a ton of it — without any interaction.

There are a few reasons I can think of:

- It has never been easier to

npm install(oryarn add) your problems away, whether it’s a library to format dates or some animation library. - Third-party scripts like analytics, chat, or whatever your marketing team stuck inside GTM.

- First-party code.

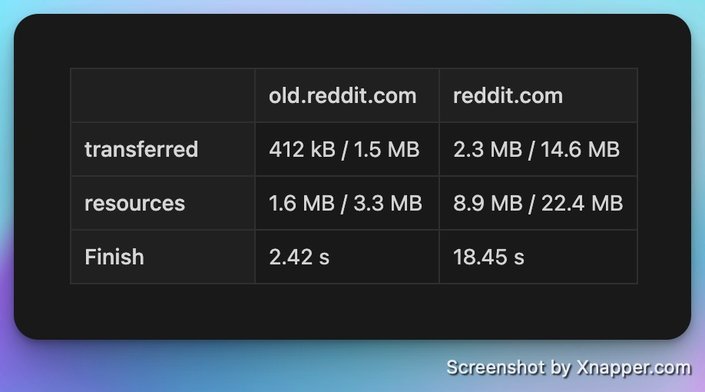

As developer experience improved, our JS bundle sizes grew, and they have been growing for the past decade or so, as in this graph displays:

But why is it steadily growing? I would say it’s due to product requirements and user expectations for rich interactive experiences. The more features we add, the more code we generate, the more JavaScript we ship.

Furthermore, there is somewhat of a correlation between DX (developer experience) and UX (user experience). To better understand this, we can look at this slide from a recent talk that Shai Reznik (international speaker and Founder of HiRez.io) gave:

UX/DX correlation over time by framework generation (slide courtesy of Shai Reznik).

This graph shows that whenever we improved the DX, we degraded the UX.

First, we had static pages that easily served HTML documents on demand.

Thereafter, servers could inject data into HTML templates that hit the database (DB) to create a personalized experience and send that response back to the browser — and load times increased.

Then we had JavaScript sprinkles in the form of jQuery da OG DOM manipulator.

Later came the age of Rich Internet Applications (RIA), popularized by tech such as Adobe Flash and Microsoft Silverlight. These are buried deep in our collective mind because Steve Jobs decided to basically kill it.

After that came the second half of gen 2 web apps with the birth of SPAs (Single Page Applications), which were led by frameworks such as Backbone, Knockout, Angular, React, Vue, and so on.

This came with the rise of Node.js and NPM, which saved devs time, as they could install packages easily. However, that increased bundle size, which in turn increased TTI.

According to Shai Reznik, we’re now at gen 2.5, though Shawn @swyx Wang — writer, speaker, and DevRel — might have a different definition. Also, Kent C. Dodds has one of his own, which kind of combines the best of static pages; dynamic server-side rendered pages along with a SPA experience on the client.

However, the caveat of current generation frameworks is that as the app size grows, so does the JavaScript bundle, and in turn Time To Interaction (TTI).

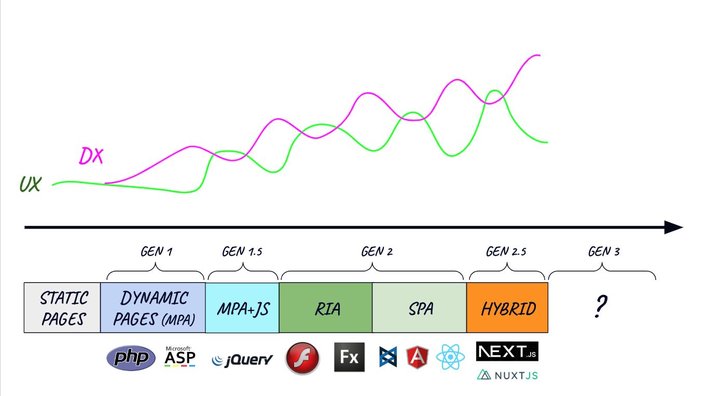

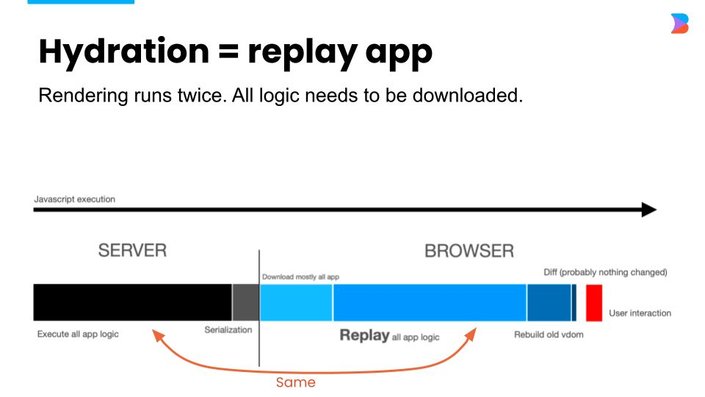

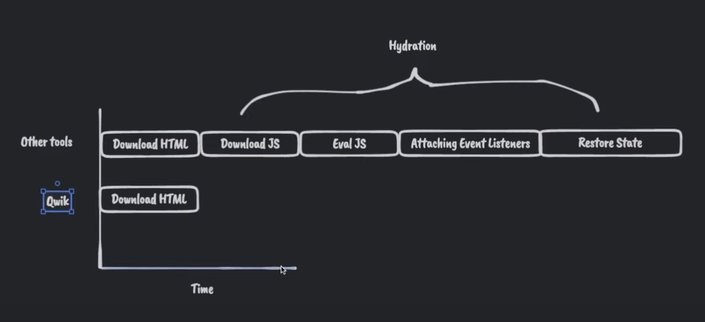

The main reason for this is hydration, which is the process of attaching behavior to declarative content to make it interactive.

I’m not going to dwell on what hydration is, as it’s been covered well by Miško Hevery in Hydration is Pure Overhead.

One possible solution to improve TTI on a web app is code splitting. It is a technique in which a large codebase is divided into smaller, more manageable chunks or modules.

This can improve the performance of an application by reducing the amount of code that needs to be loaded at once and allowing different parts of the code to be loaded on demand, as needed.

Another would be lazy loading. JavaScript lazy loading is a technique that defers the loading of JavaScript resources until they are needed, potentially improving web app performance.

However, these methods are manual and tedious. As Tejas states in his video, this is something he and his co-worker initially thought to do when they tried to improve performance.

I’ve also tried this method myself once. It’s really easy to screw it up. You have to take a lot of things into account and play around with chunks and caching.

Furthermore, you’d have to think about what happens if there’s a network connection error; what should we do then? Do we prefetch? Do we use a service worker?

Besides, the process of hydration can negate a lot of these hard work effects, as there is still a need for the whole bootstrap phase which includes eagerly loading all those lazy JS chunks.

It’s like playing a video game — you progress on a level and collect loot, but then you accidentally die and have to restart the level from square one.

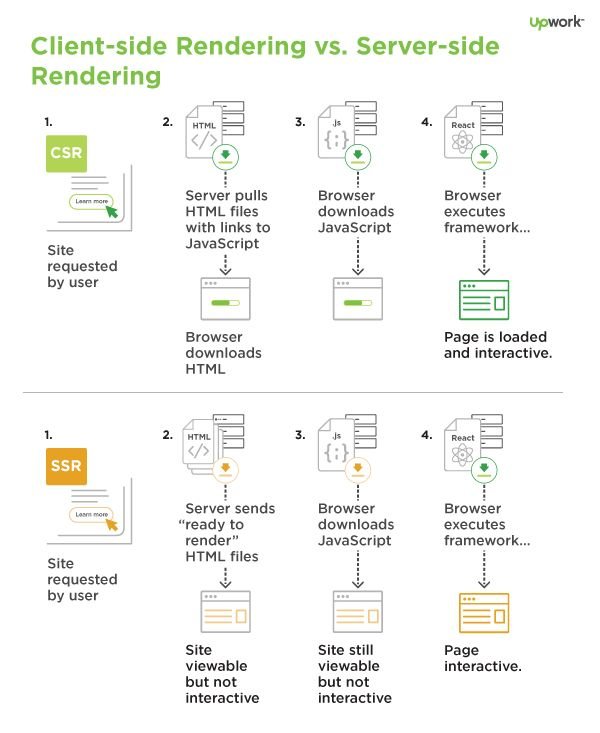

For a site to be interactive in traditional frameworks, it must have event listeners attached to the DOM; click, mouse move, and other events that trigger when a user performs the action. There are two problems with this:

- The framework has to know where the event handlers need to be attached.

- The framework needs to know what should happen when the event is triggered.

To figure out where the events should be attached, the framework must download all visible components and execute their templates. By executing the templates, the framework learns the location of the events and can register the events with DOM.

This brings us to the second point. What should happen if the event is triggered? It requires the code for the event handler to be present.

It does not matter if the user interacts with a menu or not. The code still needs to be eagerly loaded. And therein lies the problem.

Hydration forces eager download and execution of the code. It’s just how frameworks work.

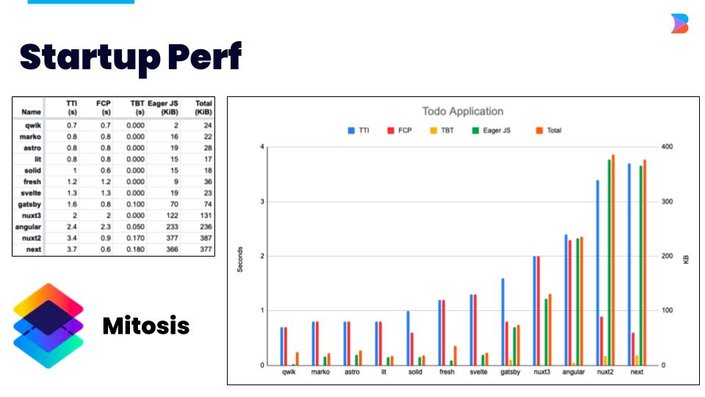

With the realization that site startup performance does matter, the industry has started taking strides in the right direction.

React 18 and server components promise to deliver less JavaScript to the browser by sending only HTML to the client when there’s no interaction.

Next.js 13 is in effect the only framework that currently supports RSC as well as many more optimizations that improve performance. This is definitely an improvement, but it still requires the developer to think about it on a component-by-component basis.

Astro has a different approach with their “islands architecture”:

“Astro Islands represent a leading paradigm shift for frontend web architecture. Astro extracts your UI into smaller, isolated components on the page. Unused JavaScript is replaced with lightweight HTML, guaranteeing faster loads and time-to-interactive (TTI).” —Astro website

This is pretty awesome, as it also allows you to write components in almost any frontend framework you like. You can make an island interactive on a case-by-case basis and with specific directives to control hydration, which they call partial hydration.

I myself use Astro for my blog, and I’m really enjoying it. Even though I thought I’d write different components in different frameworks, I ended up mostly writing Astro components. 😅

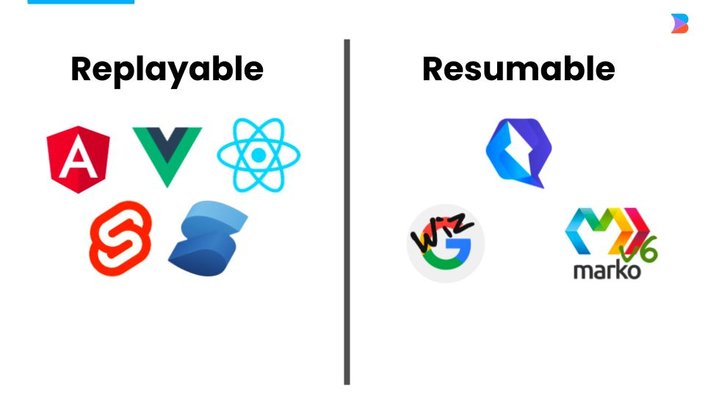

Again, this is great, but there’s the caveat of triggering hydration in any framework because it would once again eagerly load your JS.

Marko is a huge leap in the right direction. It has streaming, partial hydration, a compiler that optimizes your output, and a small runtime. I’ve also heard through the grapevine that Marko V6 adds resumability to the framework as well.

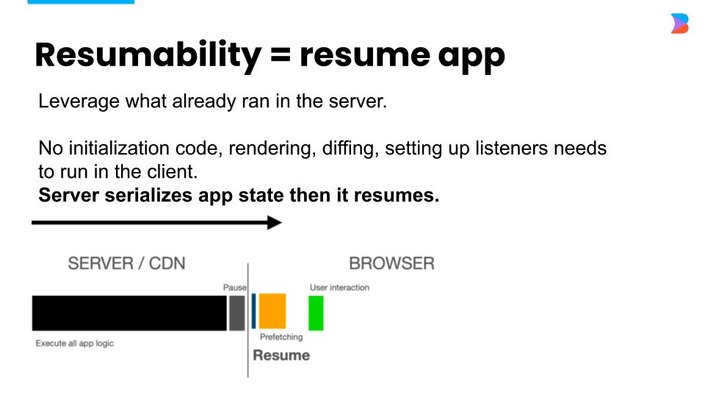

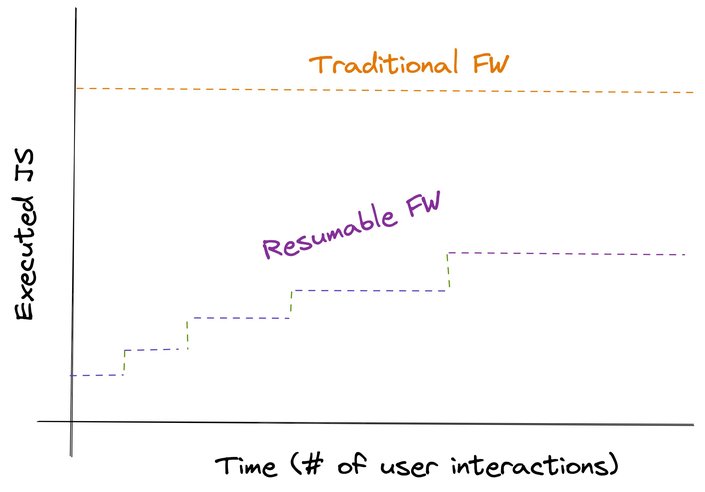

In an ideal world, no JavaScript gets downloaded and executed until the user interacts with a particular part of the page. If the user clicks the "buy" button, then the framework should bring in only the code which is necessary for processing the "buy" button; it should not have to bring the code associated with the "menu.”

The problem is not that there is too much JavaScript; rather, the JavaScript must be eagerly downloaded and executed. (As I’ve mentioned, many existing systems have lazy loading, but only for components not currently in the render tree).

In a nutshell, with hydration we run our code on the server and then on the client. Resumability means running the app once, pausing execution, and then resuming where we left off, just on the client.

It’s kind of like how VMs work. A virtual machine can run an app, say a text editor, on an operating system and then be stopped, moved to another machine, and then resumed.

Resumable frameworks are not new. For almost a decade, Google has been using Wiz (an internal framework at Google), which powers Google Search and Photos. Marko, a framework from eBay, is promising resumability in their next version.

But, we’re not here to talk about other frameworks, we’re here to talk about one framework in particular — Qwik, which is the first open source framework whose primary focus is Resumabilty.

Qwik takes on a new approach to the problem space. It’s a paradigm shift in the way we’re used to serving JS to the browser.

Let’s talk about that for a second. The JS required for most frameworks to boot up is something like 100Kb-200Kb (un-minified, not Gzipped). Even if you do code split and lazy load, it starts off from that starting point, and then more JS gets loaded.

Qwik loads JavaScript on demand. It starts with a constant of 1Kb of JS until there’s a user interaction. To put it into more “computer sciencey” terms, most frameworks are O(n), while Qwik is O(1).

If you don’t add interactions, there’s no need to load more JavaScript.

Rendering performance is important, but time to interaction matters more when we look at real user experiences.

Resumability solves the time-to-interactive (TTI) problem because there is nothing for the framework to do on startup (outside of setting up global listeners). The application is ready instantly.

In contrast to other frameworks, Qwik considers bundling, serialization, and prefetching to be core competencies and has a holistic approach to fast startup performance. This allows it to be resumable.

Let’s see it in action!

Let’s see how this looks with some code:

import { component$, useSignal } from '@builder.io/qwik';

import type { DocumentHead } from '@builder.io/qwik-city';

export default component$(() => {

const count = useSignal(0);

return (

<div>

<h1>

Counter is {count.value} <span class='lightning'>⚡️</span>

</h1>

<button onClick$={() => count.value++}>Increment</button>

</div>

);

});

export const head: DocumentHead = {

title: 'Welcome to Qwik Demo',

meta: [

{

name: 'description',

content: 'Qwik site description',

},

],

}; This is the result:

For demo purposes, I’m not going to go into how to start a Qwik project. For more info on getting started with Qwik, check out the Qwik docs or our Stackblitz Qwik Starter.

Tip: For the code for this demo, check out the Qase for Qwik GitHub repo.

What we’ve done is create a simple counter component inside a QwikCity project, which is the meta-framework for Qwik. Basically, it’s like SvelteKit for Svelte, or SolidStart for Solid. It is generally in charge of routing and the build.

If you're coming from React (or Solid), you can see that a lot of the above looks familiar. As Qwik uses JSX, you should feel right at home. A signal is a reactive piece of state, similar to Solid.js signals, which consists of an object with a single property (signal.value) that when changed will update any component that uses it. Similarly, useStore is almost the same as a signal but accepts an object as a parameter, that then becomes reactive.

The second thing to notice is the $ sign after component$ and onClick$, which is a symbol in Qwik. These are hints for the optimizer to break our code into pieces that can later be loaded intelligently. It’s important to note that only serializable data can be used in a lazy-loaded boundary.

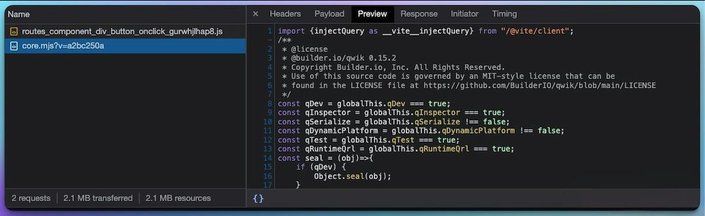

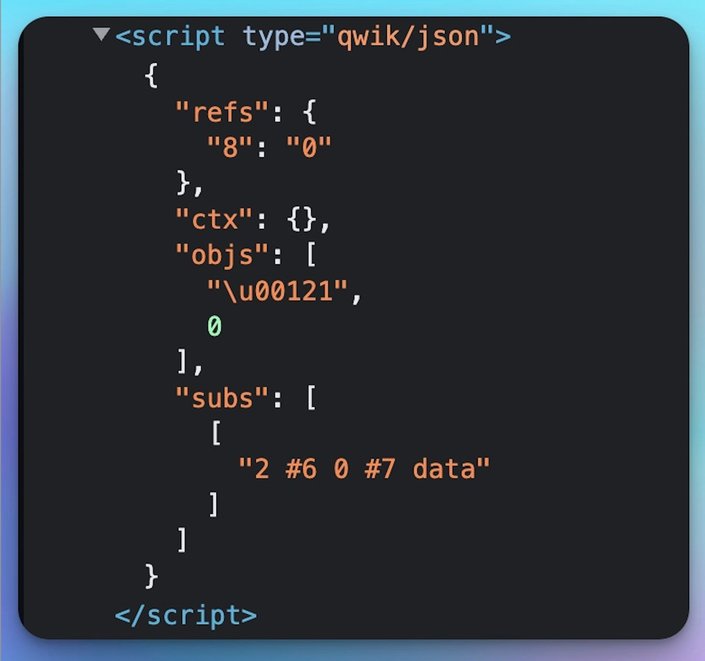

If we go back to our counter example, devtools shows this in action:

What’s happening there?

While on the Network pane of devtools, I’ve selected only to show JS requests. At first, it shows two files: client and enb.mjs. Those are files from Vite; our dev server, which also powers Qwik and QwikCity. To hide those files so we can get to the actual JS that a production build would serve, we can filter any path that has Vite in it. After I’ve hidden all Vite-related files, I hit refresh again (with cmd + R), then…no JavaScript is downloaded!! 🤯

Then, when I go on to click the Increment button, the real magic happens! Two files get downloaded, and the button actually works and increments our count. The beauty of it is that now there’s no need to load that JS again! Qwik has divided the code into separate files, which can be loaded as needed.

Let's review what these files are:

core.mjs?v=a2bc250a: this file is the main Qwik core runtime script from the dev server (unzipped). Breaking it down is beyond the scope of this post.

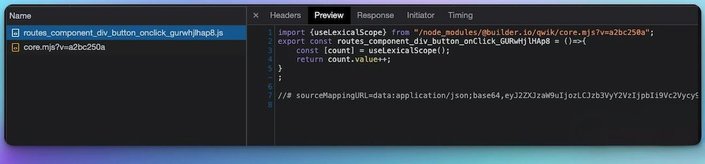

routes_component_div_button_onclick_gurwhjlhap8.js: this file is actually what handles our signal and theonClick(as the name might suggest, followed by a hash). Let’s look at the contents of this file:

Here we have a variable that references a function that extracts the count variable from within the useLexicalScope() hook and then returns an incremented count.value. The useLexicalScope() hook is how Qwik retrieves the reactive variables reference, whether it’s a store or a signal.

Now for the HTML:

We can see where the reference to the script is ☝🏽.

And then we can see where more information is stored:

On top of that, Qwik attaches event listeners to inform it when it needs to load something. It can also tell what JS is more likely to be used and prefetches it. For example, it’ll load your shopping cart code, before the newsletters.

Courtesy of Tejas’s video

Other tools need to do a lot of stuff before they can be interactive. For the most part, Qwik downloads only HTML.

As we covered above, we have information stored in the DOM, but it’s not just information, it’s serialized. Qwik runs on the server and generates info, serializes it, and pauses. When the server sends the HTML to the client, it has all the info.

It’s kind of like a video you’re streaming:

You can resume your app once it’s in your browser.

And then, you can even pause it, with all its state, and resume it on another browser 🤯

Behold:

What just happened in that video? 🥴

- I interacted with the app on browser A.

- Paused the app with

$0.qwik.pause()($0in devtools is a way to select the element you’re inspecting), it ran on the<html>element, which is a Qwik container. - Copied the HTML.

- Opened a new browser (Safari, not that it matters).

- Went to our app’s URL, where I got the counter once again with a

0state. - Deleted the HTML.

- Pasted the “paused” app HTML.

- The state was restored in a new browser 🕺🏽.

- Everything is still interactive.

If that doesn’t blow your mind, I’m not sure what will. The use cases this opens up are endless.

As long as the Qwik loader had run, when you move from one Qwik to another or one Qwik container to another, you can transfer state.

Mull over that.

So we’ve talked about the why, what, and how. Why are we at this point where we need a new paradigm? Why you should consider using Qwik? How does it work? And we'll cover some cool features.

But we still haven’t shown how it’s fast and performant it is.

Let’s dig in.

Most people measure web apps and website performance nowadays with Google Lighthouse, Page Speed Insights, or the good ol’ WebPageTest.

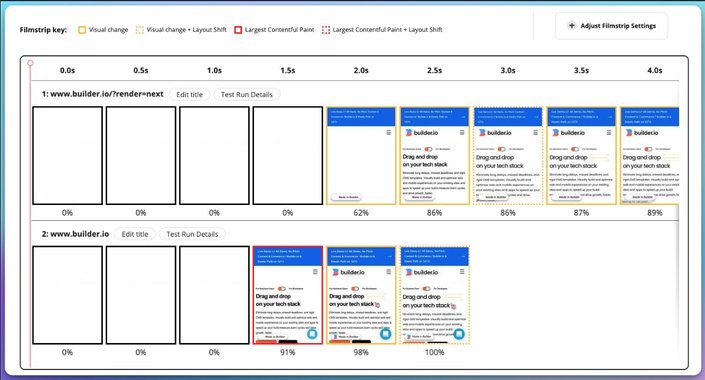

To be able to compare a web app it’s always better to compare apples to apples. So, in order to do so, we’ll look at the Builder.io website. Specifically the home page.

"Why?" you may ask. Because the Builder home page has 2 versions:

- Qwik

- Next.js (v10)

Considering that there have been major improvements in Next in the last 3 versions, this is the closest thing we have that has similar content and functionality.

In order to render the old Next.js version we have a query param to use, so when we hit http://www.builder.io/?render=next we’ll get it.

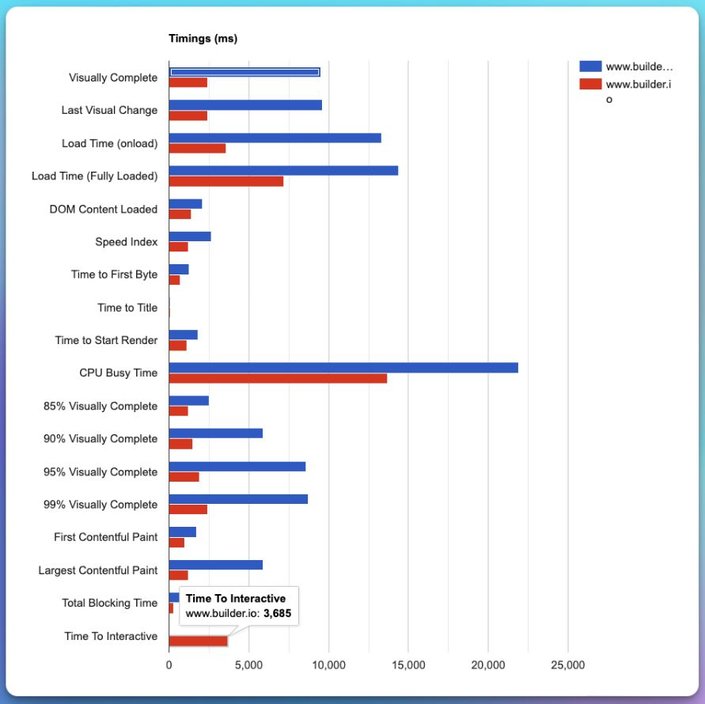

To compare the two, I ran a WebPageTest on both versions of our home page (Mobile 4G USA test configuration) and used the comparison feature.

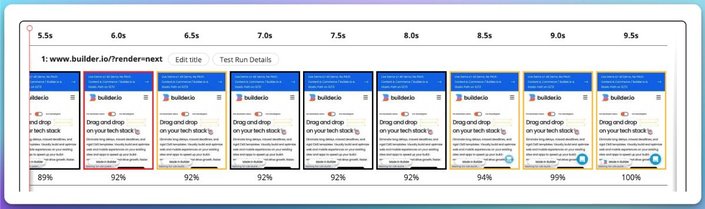

Right away, the Next.js site starts loading something visual while Qwik showed almost everything after 2.5 seconds.

The Next.js version fully loads after 9.5 seconds.

Now let’s look at all the timings (notice that Qwik version is red and Next.js is blue):

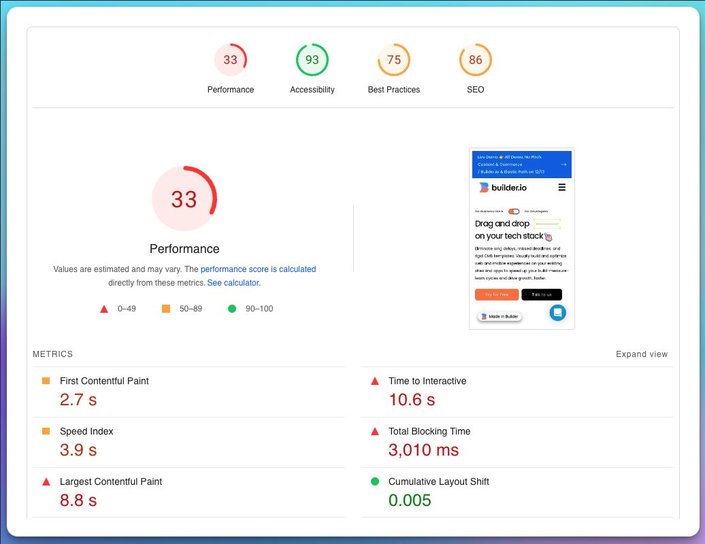

Note that TTI metric on the bottom doesn’t show for the Next.js version, not because it’s at 0 but because for some reason WebPageTest has issues with getting that metric. So, I ran Page Speed Insights on it:

This run worked, but I had many failed runs attempted. And, as we can see TTI on it is benched at 10.6 seconds.

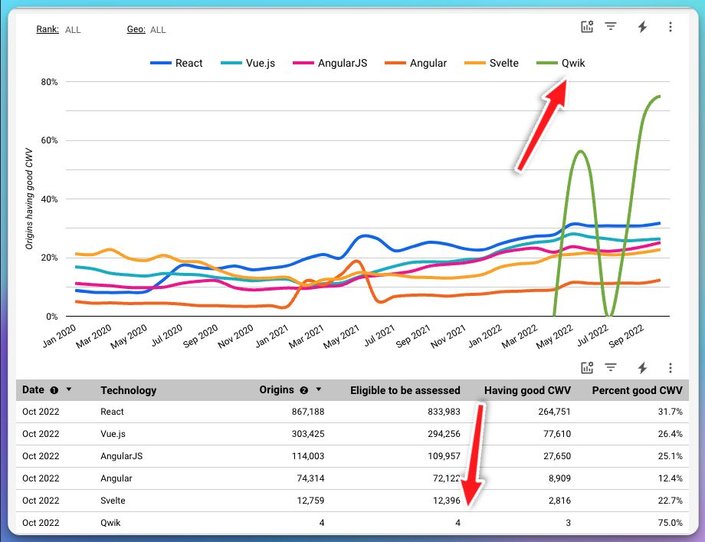

It’s still early days to get a lot of real user performance data from Google Chrome User Experience Report (CrUX for short) to show how Qwik performs. But there is some:

Yes, a whopping 4 is the number of sites that show up on the report when choosing popular frontend tech, but it’s somewhat of an indication.

Qwik is made by 3 performance nerds (they said it, not me 😉) that have 4 frontend frameworks under their belt.

They are inspired by all the latest frameworks and hard work that has been done by incredible engineers, and in so, they have brought a lot of the loved features of those frameworks.

The general realization they came to, that birthed the innovation for Qwik, was that server-side rendering for all SPA frameworks was an afterthought. At some point, you hit the hydration bottleneck, and you simply cannot optimize anymore on your end as a developer.

it’s easy to put things together, but It’s hard to split things up.

Qwik does not just support SSR/SSG/SPA, it is a hybrid. You can choose whichever fits your needs.

The demo in this post doesn’t do justice in demonstrating Qwik’s full capabilities — it’s not real-world enough. It shines in highly interactive big apps, as it would perform the same as our simple example. It doesn’t matter how many features or lines of code an app has.

One of Qwik’s best features is it makes doing the right thing achievable. You don’t have to work hard with it to get good performance. It’s a given, just by its nature.

Other than that, there are more features to Qwik:

- Easy Micro Frontends

- You can leverage the entire React ecosystem within Qwik

- DX: Click to go to component source code, and more on the way!

Qwik and QwikCity are still in beta, but the community is starting to blossom. It’s never been more straightforward to build fast web apps than it is with Qwik. So what are you waiting for? Give it a try, the team is looking for more feedback 🙂.

Introducing Visual Copilot: convert Figma designs to code using your existing components in a single click.