Is AI Actually Writing Production-Ready Code?

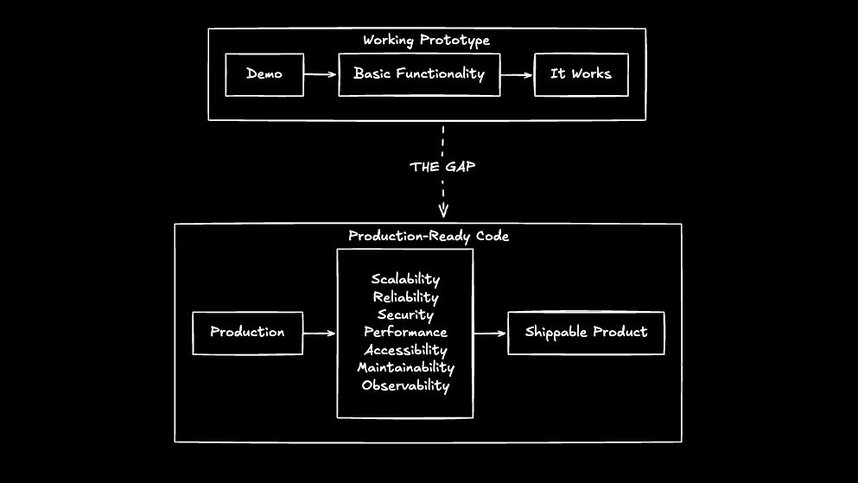

AI tools can spin up a working website in minutes—used daily by 50% of developers—but most teams spend weeks turning that demo into something they'd actually deploy. The gap between "it runs" and "it's production-ready" comes down to security, scalability, testing, and integration work that AI typically ignores. Closing that gap without burning your team on rewrites is the difference between a demo and a shippable product.

What makes a website production-ready

Production-ready means your website can handle real users, real traffic, and real problems without falling apart. A working prototype and production-ready code are not the same thing.

The difference comes down to non-functional requirements. These are the qualities that don't show up in a demo but determine whether your site survives contact with actual users.

- Scalability: Your site handles traffic spikes without crashing or slowing to a crawl

- Reliability: When something breaks, the system recovers gracefully instead of taking everything down

- Security: Authentication works correctly, data stays protected, and vulnerabilities get caught before attackers find them

- Performance: Pages load fast enough that users don't leave, measured by Core Web Vitals

- Accessibility: People using screen readers, keyboards, or other assistive tools can actually use your site

- Maintainability: Your team can update the code six months from now without a complete rewrite

- Observability: When production issues happen, you can see what went wrong and fix it

Most AI-generated websites check the "it works" box. Few check all seven boxes above. That gap is where teams burn weeks turning demos into shippable software.

What AI can build for production today

AI tools excel at certain types of web development and struggle with others. Knowing where AI-generated code can reach production with minimal modification helps you set realistic expectations before you start.

Websites and apps AI handles well

AI performs best on projects with clear patterns and limited complexity. Static sites, landing pages, and templated layouts fall squarely in AI's wheelhouse because they follow predictable structures that AI models have seen thousands of times.

Projects where AI-generated code often reaches production:

- Static marketing sites with standard layouts

- Landing pages with forms and basic user interactions

- Simple CRUD apps with straightforward database operations

- Documentation sites generated from markdown files

- Portfolio sites with image galleries and contact forms

These projects share common traits: limited state management, predictable user flows, and well-established patterns. When your project fits these constraints, AI can get you surprisingly close to production-ready.

Where AI-generated code falls short

Complex scenarios expose AI's limitations quickly. Multi-tenant architectures, sophisticated state management, and legacy system integration require contextual understanding that current AI tools lack.

- Complex integrations: Multiple APIs with different authentication patterns trip up AI because it can't reason about how systems interact

- State management: Real-time synchronization across distributed systems requires architectural decisions AI isn't equipped to make

- Edge cases: Handling failures, timeouts, and race conditions gracefully demands the kind of defensive thinking AI skips

- Legacy systems: Working with undocumented, brittle codebases requires human judgment about what's safe to change

- Concurrency: Managing parallel processes without deadlocks needs careful design that AI rarely provides

AI generates code that handles the happy path. Production systems need code that handles everything else.

The gap between AI code generation and production deployment

Code that runs locally often fails in production. The gap between "it works on my machine" and "it's deployed and monitored" involves environment configuration, secrets management, and CI/CD pipeline integration that AI tools typically ignore.

Production deployment requires environment parity. This means your local development setup, staging server, and production environment all behave the same way. AI-generated code rarely accounts for these differences.

Configuration must adapt to different deployment targets without hardcoding values. Secrets like API keys and database credentials need secure management, not hardcoded strings that end up in version control where anyone can find them.

Dependency management creates another gap. AI-generated code often pulls in packages without considering version conflicts, security vulnerabilities, or license compliance. Your CI/CD pipeline needs automated checks that catch these issues before code reaches production.

Why most AI-generated code isn't production-ready

The gap between demo and deployment is where the real issues hide: in code quality, security vulnerabilities, and broken integrations—with 66% of developers frustrated by AI solutions that are "almost right, but not quite."

Code quality and maintainability issues

AI-generated code often passes basic functionality tests while failing code review. Missing error handling, inconsistent naming conventions, and lack of proper abstraction create technical debt from day one.

Common quality issues include:

- Missing try-catch blocks and error boundaries that leave users staring at blank screens when something fails

- Inconsistent naming that violates your team's conventions and makes code harder to navigate

- No unit test coverage for generated functions, so you can't refactor safely

- Documentation gaps that make future maintenance a guessing game

- High cyclomatic complexity that makes code hard to reason about and debug

- These issues compound over time. Code that ships without proper structure becomes increasingly expensive to maintain.

Security vulnerabilities and testing gaps

AI models learn from public code, including code with security vulnerabilities. OWASP Top 10 issues like SQL injection and cross-site scripting appear frequently in AI-generated output—with over 40% containing security flaws—because the training data included plenty of insecure examples.

Security concerns in AI-generated code:

- SQL injection vulnerabilities from unsanitized inputs

- Cross-site scripting risks from improper output encoding

- Missing authentication checks on protected routes

- Hardcoded credentials in configuration files

- Unvalidated user inputs passed directly to databases

Production code requires security scanning and often penetration testing before deployment. AI-generated code rarely passes these checks without significant modification.

Integration with existing systems

Connecting AI-generated code to real infrastructure exposes mismatches between generated assumptions and actual system requirements. AI assumes ideal conditions. Production operates under real constraints.

| Integration Challenge | What AI Assumes | What Production Requires |

|---|---|---|

Authentication | Simple API keys | Enterprise SSO/SAML |

Database | Fresh schema | Migrations without data loss |

APIs | Stable contracts | Version compatibility |

Services | Single instance | Load balancing and failover |

What it takes to make AI code production-ready

Transforming AI-generated code into shippable products requires specific processes and human oversight. These steps add time but prevent costly production incidents.

Code review and validation requirements

Human review remains essential for AI-generated code—experienced developers actually take 19% longer with AI than without it. Peer review catches issues that automated tools miss, while architecture decision records document why certain approaches were chosen.

Effective review processes include mandatory peer review before any merge to main branches, automated PR checks for linting and security scanning, and checklist validation against production requirements.

Code ownership matters. Someone on your team needs to understand and take responsibility for every line that ships.

Testing and quality assurance processes

The testing pyramid applies to AI-generated code just like human-written code. Unit tests provide fast feedback on individual functions. Integration tests verify that components work together correctly. End-to-end tests validate complete user journeys.

| Test Type | Purpose | When to Run |

|---|---|---|

Unit Tests | Verify individual functions | Every commit |

Integration Tests | Check API and database operations | Every PR |

E2E Tests | Validate critical user flows | Before deployment |

Performance Tests | Ensure scalability | Before major releases |

CI pipelines should block deployments when tests fail. Flaky tests need immediate attention because they erode confidence in the entire test suite.

Design system integration

AI-generated code often ignores existing design systems, creating inconsistent UI that requires rework. Production-ready output uses your team's actual components, design tokens, and established patterns.

Design tokens are the named values for colors, spacing, typography, and other visual properties that keep your UI consistent. When AI tools understand your design system, generated code matches your existing patterns instead of introducing visual inconsistency that designers have to fix.

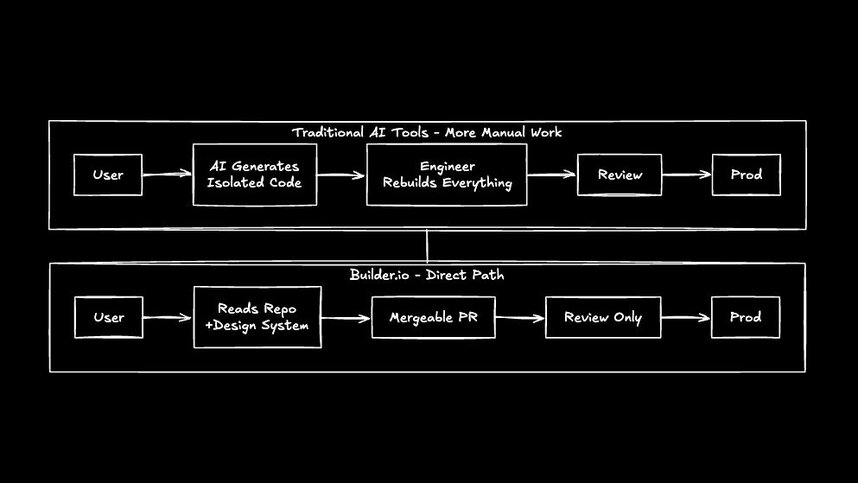

How design-to-code AI tools compare for production use

Different approaches to design-to-code generation offer different tradeoffs between speed and production readiness.

Figma plugins export designs directly to code, but the output often lacks semantic HTML structure and accessibility features. You get something that looks right but doesn't work correctly for all users.

AI app builders like v0 and Bolt.new turn prompts into working apps quickly. They excel at demos and simple CRUD applications but struggle with complex state management and real-world data handling.

The key question for any tool: does the output fit into your existing codebase, or does it create a parallel world that engineers have to rebuild anyway?

How Builder.io bridges the AI-to-production gap

Most AI coding tools work great for weekend projects. Almost none survive enterprise security reviews. That gap, between hobbyist speed and enterprise rigor, is exactly where Builder.io lives.

Real codebase integration

Builder.io connects to your existing repository and understands your project structure. Instead of generating isolated code snippets, it produces mergeable PRs that fit your existing architecture.

This means Builder.io reads your component registry, respects your path aliases, and follows your TypeScript configuration. The output works with your build tools, whether you use Vite, Webpack, or another bundler.

Design system enforcement

Builder.io indexes your design system so that components, tokens, and documentation become the default way UI gets built. Generated code uses your actual button component instead of creating a new one that looks similar but behaves differently.

This enforcement happens automatically. Designers and PMs can make changes knowing the output will match engineering standards without manual policing.

Production-ready output from day one

Builder.io generates code with server-side rendering support, accessibility compliance, and performance optimization built in. Tree-shaking and bundle splitting happen by default, not as afterthoughts.

The output passes the same code review standards as human-written code because it follows your team's established patterns. Engineers review and merge, maintaining full ownership of what ships.

Making AI-generated websites production-ready in practice

Start small. Choose one page or flow and generate it inside your real repo using your components, your data, and your normal review process.

You'll see the difference immediately: cleaner diffs, faster reviews, and a shorter path from concept to commit. The rebuild tax that plagues most AI-generated code disappears when the tool understands your system from the start.

If your team is tired of paying the rebuild tax on AI-generated code, sign up for Builder.io and see how it plugs directly into your repo.

The handoff era is over. The future is everyone building in code.