Generative AI has been exploding recently, and we encounter the terms “ChatGPT”, “LLMs” and “Agents” several times a day. With so many new developments and powerful tools, it’s hard to keep up. In this article, you’re going to learn all the basics so you can officially add AI to your toolbox.

I’m a developer at heart. And when I say “our”, I mean us — developers. The recent (and upcoming) advancements in AI can safely be called a paradigm shift. Here’s why.

Traditionally, for a business to use AI, they would have to:

- Hire top talent across various fields (data science, AI/ML) for model development.

- Gather, scrape, or buy a lot of data to train the model.

- Buy/rent expensive hardware for each training run.

- Test, reinforce/fine-tune, and deploy the model to production in a scalable way.

Today, anyone can benefit from AI — it’s one API call away. These APIs tend to be affordable, easy to consume and reliable for most tasks.

This makes AI very attractive for projects at all stages. Now that AI is not exclusive to fortunate enterprises, we developers are going to spearhead the implementation of AI at a world scale.

LLMs (Large Language Models) are models that are trained on billions of parameters. These are different than traditional AI models that are trained to accomplish a very specific task.

LLMs are trained to understand natural language. This is very powerful because such models can connect more dots. You can use LLMs to produce content, analyze sentiment, write code, validate outputs, provide customer support, and much much more.

Some LLMs are open-source — such as Falcon, Mistral, Llama 2 — and some are closed-source and served through an API — such as, OpenAI GPT, and Anthropic Claude. In this article, we’ll focus on OpenAI.

First you’ll need to sign up at OpenAI and obtain an API key. Once obtained, make sure you set it as an environment variable (OPENAI_API_KEY).

Create an app.ts somewhere in your file system. Initialize a new NPM projcet (npm init -y) and make sure to install OpenAI client (npm i openai). You should be good to go!

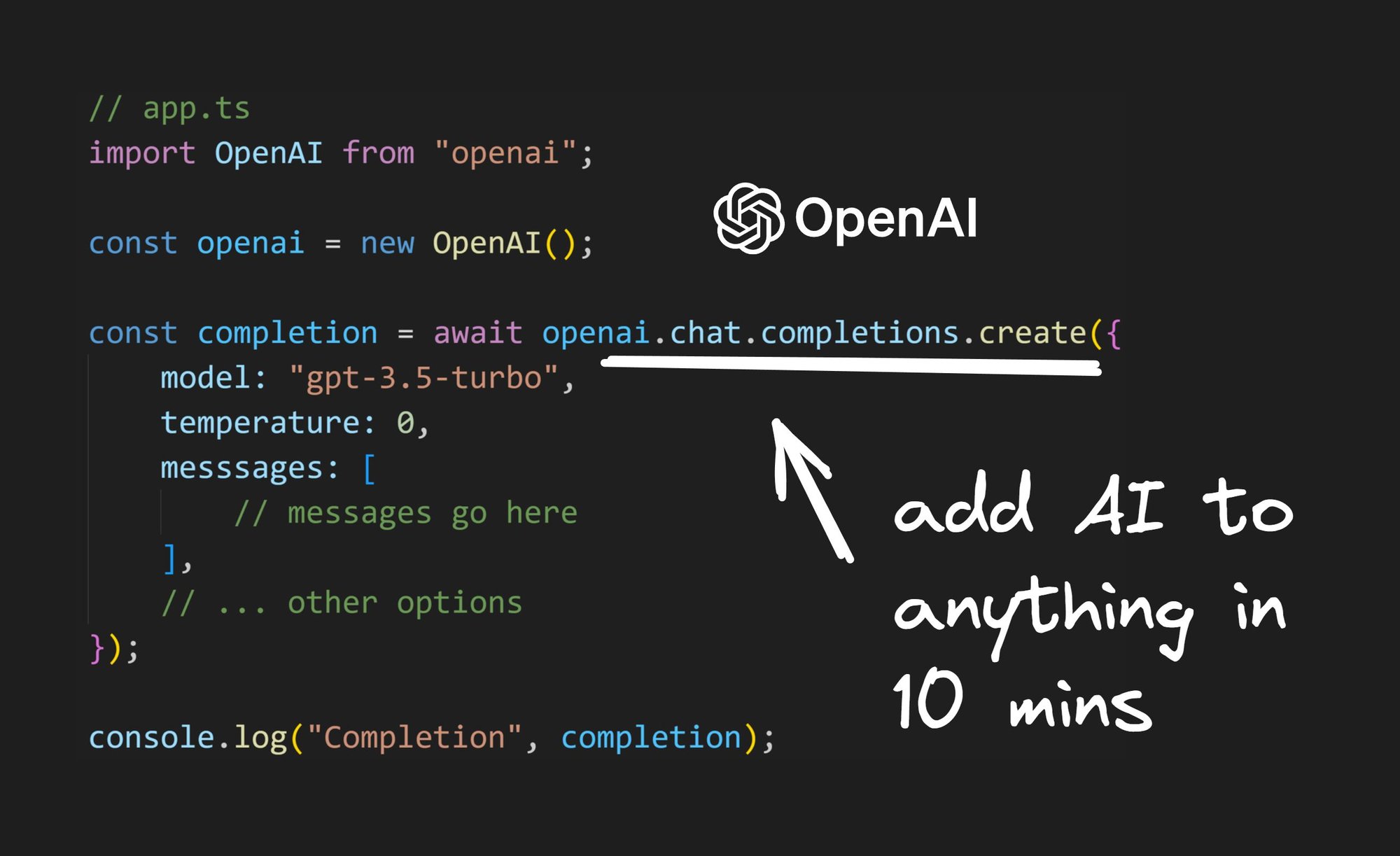

Here’s an example of how we’d call the OpenAI API using the OpenAI Client:

// app.ts

import OpenAI from "openai";

const openai = new OpenAI();

const completion = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

temperature: 0,

messsages: [

// messages go here

],

// ... other options

});

console.log("Completion", completion);

Let’s quickly go through what’s going on here.

First, we import OpenAI from the openai NPM package

Then, we initialize a new OpenAI client. We don’t provide an API key explicitly, as it is automatically fetched from the OPENAI_API_KEY environment variable set earlier.

Finally, we create a Chat Completion. “A Chat Completion? What is that?” you might be thinking. Let me explain.

OpenAI provides various APIs. DALL-E for image generation, Whisper for audio transcription, Embeddings API, and so on. Probably the most well-known and used API is the Chat Completions API. Basically, creating a chat completion means having a chat with the AI model.

Despite the term “chat”, it is not exclusively used in chat applications only. Chat Completions can be used in single operations/tasks as well. It’s just the most capable API that supports the most capable models (gpt-3.5-turbo and gpt-4).

When creating a new Chat Completion, you’ll provide some options. Let’s overview some of them:

model: The model you want to use for this particular call. In this example, we use gpt-3.5-turbo.temperature: How creative we want the AI model to be. Zero would mean no additional creativity beyond baseline, and 1 would mean maximum creativity. If your tasks require precision, attention to detail and factuality, definitely set this to 0.max_token: The maximum amount of tokens to retrieve in the response. We’ll talk about tokens later in this article. In short, this is your opportunity to limit the response length, save on costs and help reduce latency.messages: This is where the magic happens. Here, you’ll provide a set of messages. This can be anything from one message for a basic task/operation, to a set of messages to represent a full chat history. You’ll spend most of your time here.

For the full list of options, check out the OpenAI API documentation.

As I mentioned earlier, the messages property is where the magic happens. Each item in this array represents a message. A message can hold two properties. First, there is the role, which an be one of the following:

system: Use system messages to provide guidelines, set boundaries, provide additional knowledge or set the tone. Imagine this as some “inner voice” that the AI model will take into account when generating responses.user: This represents messages sent by the user. For example, if you are building a chat app, you want to send the user’s messages as user messages.assistant: These messages represent the AI model’s responses.

[

{

role: "user",

content: "Hi, my name is Ariel. Greet me!"

}

]

The response to that would be:

{

role: "assistant",

content: "Hi Ariel, how are you doing?"

}Now, let’s find out how I can add a System Messages to control the behavior of the AI model:

[

{

role: "system",

content: "You are a rude support agent. Only respond with uppercase."

},

{

role: "user",

content: "Hi, my name is Ariel. Greet me!"

}

]And the response:

{

role: "assistant",

content: "HELLO ARIEL, WHAT DO YOU WANT?"

}Pretty cool! We can use the system message to dictate how the AI model should behave, depending on our needs.

Let me share a few more examples in which utilizing the System Prompt is useful:

Consider this example — a user asks an AI customer support bot for stock information on shoes:

[

{

role: "system",

content: `

You are an AI assistant for a shoe store, "Best Shoes".

You introduce yourself as "Shoezzer".

Here is stock information in CSV format:

model,size,stock

nike,12,4

nike,11,0

nike,10,4

nike,9,1

`

},

{

role: "user",

content: "Hi, do you have Nike shoes, size 11?"

}

]The response:

{

role: "assistant",

content: "Hi, my name is Shoezzer and I'm here to help you. Unfortunately, we do not currently have Nike shoes size 11 in stock."

}In this example, we have provided the AI model with information about stock availability using simple CSV format. It could also be JSON, XML, or anything else. LLMs can handle it!

LLMs will try to satisfy the user no matter what. They are trained to do that. What if our business use case requires stricter boundaries? Take a look at the following example:

[

{

role: "system",

content: `

You are a Doctor's Assistant. You only do the intake. The user will share some of the

symptoms with you and you will help identify potential diagnosis to be shared with the doctor.

You are not allowed to provide any assistance to patients under the age of 16.

In such a case, politely decline and ask them to call the practice at 1-800-PRACTICE. No additional text.

`,

},

{

role: "assistant",

content: "Good afternoon. In order to help you, please tell me your age and any symptoms you're suffering from.",

},

{

role: "user",

content: "I'm 15 years old. I have pretty bad stomach pain and have been coughing since yesterday.",

},

]The response:

{

role: "assistant",

content: "I'm sorry, but as a Doctor Assistant, I am not able to provide assistance to patients under the age of 16. I recommend that you call the practice at 1-800-PRACTICE for further assistance."

}Imagine that we want to use AI for a single task, rather than a chat app. We want to be able to render the output of the AI response in some UI. This is obviously not possible with the traditional text responses. Here’s how we can approach it:

[

{

role: "system",

content: `

You are a talented task planner. The user will tell you their goal and

you will generate exactly 3 tasks to help them accomplish it.

You must strictly follow this schema:

{

tasks: {

title: string; // max 50 characters

description: string; // max 100 characters

difficulty: number; // on a scale of 1 to 5

}[]

}

`

},

{

role: "user",

content: "I want to visit to Amsterdam!"

}

]The response:

{

role: 'assistant',

content: '{\n' +

' "tasks": [\n' +

' {\n' +

' "title": "Research Amsterdam attractions",\n' +

' "description": "Find out about the top attractions in Amsterdam and create a list of places you want to visit.",\n' +

' "difficulty": 2\n' +

' },\n' +

' {\n' +

' "title": "Book accommodation in Amsterdam",\n' +

' "description": "Search for and book a suitable accommodation in Amsterdam that fits your budget and preferences.",\n' +

' "difficulty": 3\n' +

' },\n' +

' {\n' +

' "title": "Plan transportation in Amsterdam",\n' +

` "description": "Figure out the best way to get around Amsterdam, whether it's by public transportation, bike, or walking, and plan your routes.",\n` +

' "difficulty": 2\n' +

' }\n' +

' ]\n' +

'}'

}Check that out! It’s a perfectly valid JSON response that you can JSON.parse, return to a front end, and render!

If you require structured responses, keep temperature at 0, and check out the OpenAI Function Calling feature. It’s very powerful. Let me know if you want me to write an article about OpenAI Function Calling!

A response from OpenAI is something like this:

{

id: '...',

object: 'chat.completion',

created: 1696431344,

model: 'gpt-3.5-turbo-0613',

choices: [ { index: 0, message: [Object], finish_reason: 'stop' } ],

usage: { prompt_tokens: 144, completion_tokens: 45, total_tokens: 189 }

}Notice the usage property. It mentions the number of tokens used in the request, the response, and in total. But what are those tokens?

Sometimes it’s easy to think that LLMs truly understand words. However, that’s not exactly how it works. It’s far easier for LLMs to understand tokens.

Tokens are numeric representations of strings, part of strings, or even individual characters. Essentially, the words we provide to the LLM becomes a set of floating numbers, which the model can then process in an easier, more performant way.

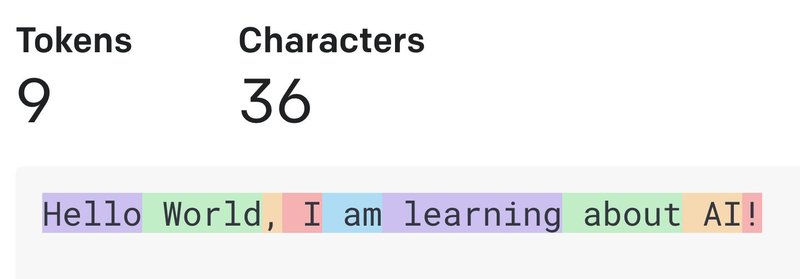

For example, the text Hello World, I am learning about AI equals 9 tokens. How exacly?

The algorithm used for tokenization has tokenized this sentence as follows:

[15496, 2159, 11, 314, 716, 4673, 546, 9552, 0]This is a fascinating topic, but for the sake of this tutorial, just know that your text inputs are handled post-tokenization, and you are billed per token, in the input (request/prompt) and the output (response) output. This is usually billed per 1,000 tokens, and the response tends to be more expensive than the request. Check out the OpenAI Pricing page to view the exact cost.

It’s important to mention that different models also have different token limits. For example, at the time of writing this, gpt-3.5-turbo has a token limit of 4097 tokens in total (request and response combined). You can find more information about token limits per model in the OpenAI documentation.

There are various tools available online to help you calculate the tokens. I really like the OpenAI Tokenizer. However, depending on the model you’re using, it might not always be 100% accurate.

The Python ecosystem is fortunate to have a package called tiktoken that really helps with that. We are fortunate to have talented folks in our ecosystem who ported it to JavaScript/TypeScript! My favorite one is @dqpd/tiktoken. It works very well and is very reliable.

If you are counting tokens in a production environment, I suggest you take a 5%-10% margin for error. These tokenizers are not always accurate. Better safe than sorry!

- Use the OpenAI Tokenizer to learn how tokens work and get an (almost accurate) idea of token usage.

- Use the OpenAI Playground to practice prompt engineering without writing a single line of code.

- Use Pezzo as a centralized prompt management platform to collaborate with your team and iterate quickly, as well as observe and monitor your AI operations and costs. It’s open-source! (disclaimer: I am the founder and CEO).

- Consider taking my AI For JavaScript Developers course on Udemy. I’ve so far educated over 200,000 students on Udemy, and this 2-hour crash course is meant for developers like you and me, who want to add AI to their toolbox. We build real-world apps powered by AI and cover Function Calling, Real-time Data, Hallucinations, Vector Stores, Vercel AI SDK, LlamaIndex, and more!

Ariel

Ariel is the Founder and CEO of Pezzo, an open-source LLMOps platform for developers. With over a decade worth of experience working as VP R&D at Amplication and Engineering Manager at Serverless, Ariel has dedicated most of his career to understanding and building for developers.

Additionally, Ariel is a best-selling instructor with over 200,000 students.

Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo