AI Agent Workflows for Building UI

Most teams have tried AI coding tools by now, with 84% of developers using or planning to use them. The demos look great until you try merging the output into your actual codebase, where it breaks against your component library, fails your linters, and requires a full rewrite before anyone will approve the PR.

This guide explains how AI agent workflows actually work for UI development, what separates production-ready systems from throwaway prototypes, and how to evaluate whether these tools can survive your team's real constraints around design systems, code quality, and deployment pipelines.

What is an AI agent workflow for UI development?

An AI agent workflow for building UI is an automated system where AI handles frontend development tasks from requirements to production-ready code. The agent reads your tickets, designs, and documentation to produce working UI that fits your existing codebase, connecting everything without manual handoffs between tools.

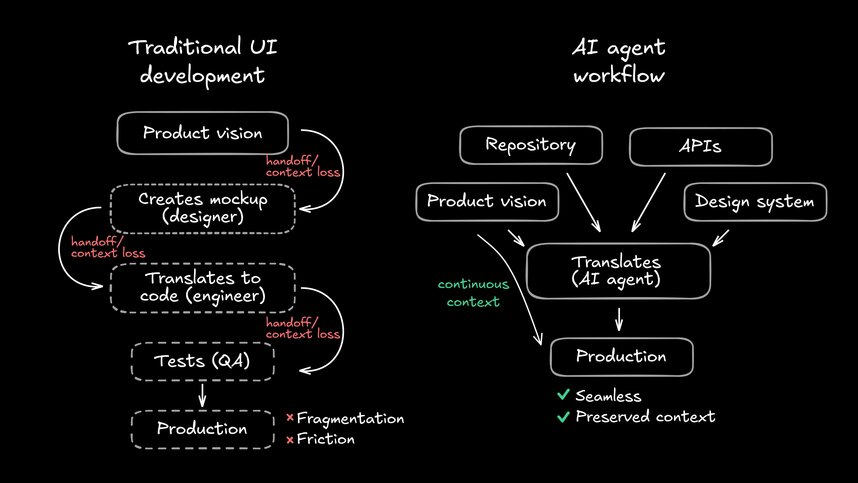

Traditional development follows a relay race model. Designers finish mockups, product managers write specs, engineers translate everything manually. Each handoff loses context. Each translation introduces drift.

How AI agent workflows differ from traditional UI development

The core difference is where translation happens. In traditional workflows, humans translate at every step. Designers translate product vision into mockups. Engineers translate mockups into code. QA translates bugs back into requirements.

| Stage | Traditional Workflow | AI Agent Workflow |

|---|---|---|

Requirements | PRDs in separate docs | Connected tickets with shared context |

Design | Static Figma mockups | Live components and tokens |

Development | Manual code translation | Automated generation with constraints |

Review | Multiple QA rounds | Real-time preview and iteration |

This compression matters because every handoff adds days, while well-executed design-to-code processes cut engineering time by 50%. When you eliminate handoffs, weeks become hours.

AI agents vs. AI copilots vs. design-to-code tools

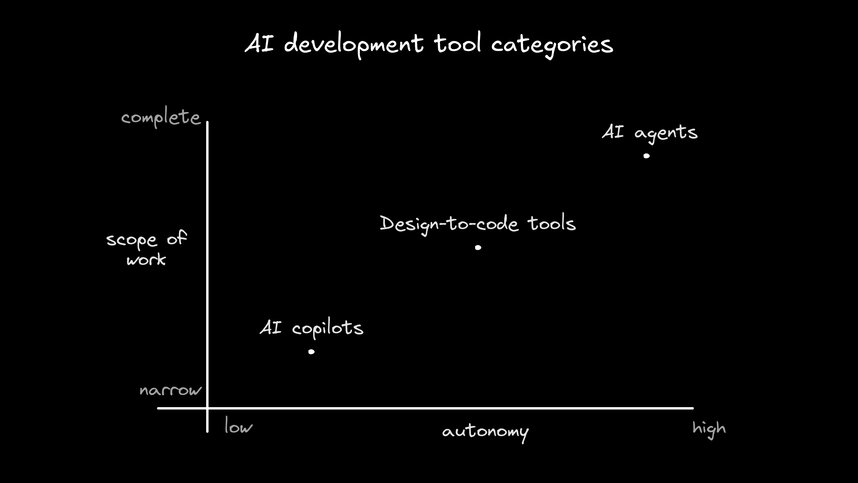

These three categories solve different problems, and the distinction matters when you're choosing tools.

- AI copilots: Tools like GitHub Copilot or Cursor suggest code as you type. You drive, they assist. Great for speeding up known tasks, but you still do the structural work yourself.

- Design-to-code tools: Figma plugins that export designs as code. One-way conversion that often ignores your component library and requires significant cleanup before merging.

- AI agents: Autonomous systems that plan, execute, and iterate on complete UI tasks. They understand your constraints and produce outputs meant to ship, not rebuild.

Copilots help you code faster. Design-to-code tools give you a starting point. Agents handle the whole job.

Key components of AI agent workflows for UI

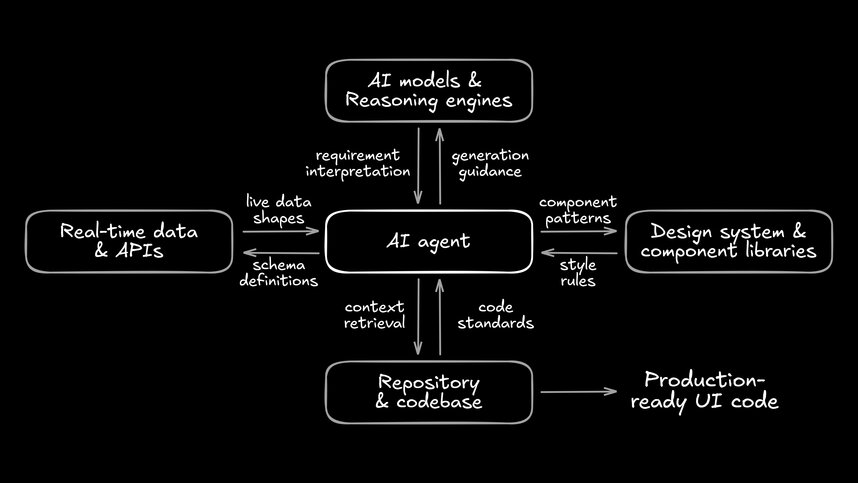

Every agent workflow relies on four pieces working together. Miss one, and the output won't survive code review.

AI models and reasoning engines

Large language models provide the reasoning that lets agents understand requirements and generate appropriate code. The model interprets what you want, plans how to build it, and generates code that implements the design.

Better models produce more accurate first attempts - developers using AI assistants complete tasks 55.8% faster in controlled studies. Fewer correction cycles mean faster shipping.

Design system integration and component libraries

Agents need access to your actual components and not just generic elements. Design system integration means indexing your component library, design tokens (your colors, spacing, and typography values), and documentation so the agent defaults to approved patterns.

When your design system is properly connected, the agent uses your Button component and not some random button it invented. This is what separates production-ready output from throwaway demos.

Repository and codebase connections

Agents must understand your existing code structure. Repository connection means reading your project architecture, following your coding standards, and creating files that fit naturally alongside what's already there.

Without this context, generated code requires rework to merge. With it, the output looks like your team wrote it.

Real-time data and API integrations

Production UIs connect to real data. Agents that only work with static mocks produce interfaces that break the moment they encounter actual API responses, loading states, or error conditions.

Connecting agents to your APIs means generated UIs handle real-world scenarios from the start. Loading indicators, error boundaries, and data formatting all work because the agent saw real response shapes.

How AI agent workflows operate in UI development

Understanding the execution flow helps you know what agents can handle and where human judgment still matters.

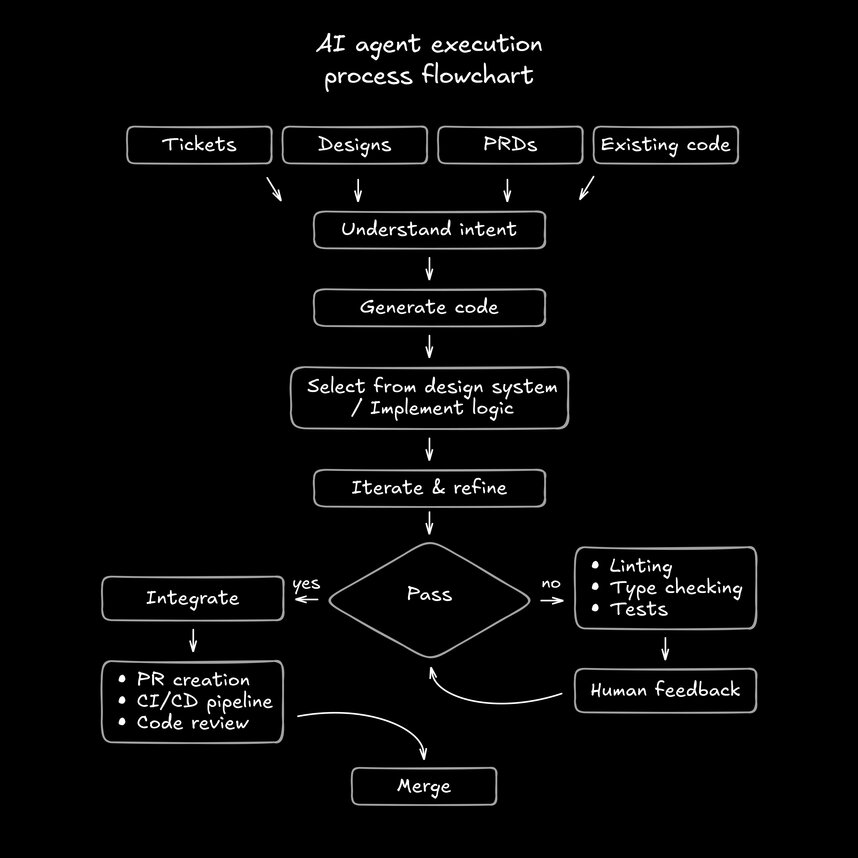

Understanding design intent and requirements

The agent starts by pulling inputs from multiple sources: tickets, designs, PRDs, existing code. It synthesizes these into an understanding of what needs building. Constraints like responsive breakpoints, accessibility requirements, and performance targets get identified early.

Context quality determines output quality. Rich inputs produce better first attempts.

Generating UI code from context

With requirements understood, the agent generates an initial implementation. It selects components from your design system, handles layout and responsiveness, and implements any business logic from the requirements.

The output should be complete and working. and not a sketch that needs rebuilding.

Iterating based on feedback and constraints

Generated code runs through your automated checks: linting, type checking, tests. The agent refines based on failures and human feedback without starting from scratch.

First attempts are rarely perfect. Good agents improve quickly with guidance.

Integrating outputs into existing repositories

The final step produces a reviewable pull request with clean diffs. The code integrates into your CI/CD pipeline, passes automated checks, and is ready for human review.

This is where most AI tools produce demos that can't survive your quality gates. Agent workflows succeed when the output merges like any other commit.

Why AI agent workflows matter for UI development

Faster iteration from concept to production

While traditional cycles from design to deployed UI take days or weeks, agent workflows compress this to hours - teams report delivering features three times faster with AI design-to-code tools. Product managers can validate ideas with working prototypes the same day, and designers see their intent implemented immediately instead of waiting in the engineering queue.

Reduced handoff friction between design and engineering

Every handoff introduces drift. Designers specify one thing, engineers interpret another, QA catches the gap. Agent workflows eliminate this by working directly from design files using your actual components.

Improved design system consistency

When agents default to your design system, every generated UI reinforces consistency. No more one-off implementations that drift from standards. No more policing developers to use approved components. The design system becomes the path of least resistance.

How to implement AI agent workflows for UI development

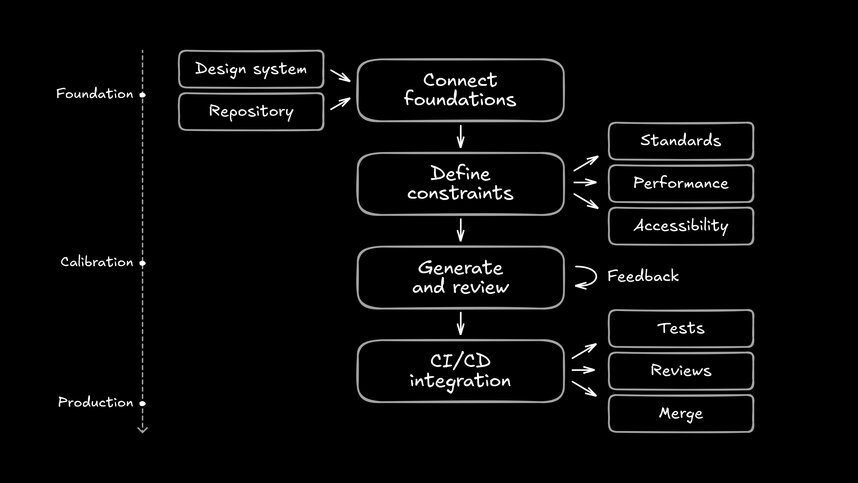

Start with foundations and expand based on success. Trying to do everything at once usually means nothing works.

Step 1: Connect your design system and repository

Index your component library and connect to your Git repository. The agent needs to understand what components exist, how they're used, and what patterns your codebase follows.

This foundation determines output quality. Skip it, and you get generic code.

Step 2: Define UI requirements and constraints

Specify your coding standards, performance requirements, and design principles as constraints the agent must follow. Include linting rules, accessibility standards, and framework conventions.

Clear constraints produce predictable outputs.

Step 3: Generate and review UI outputs

Start with a contained task: a single component or page update. Generate the output, review the code, and provide feedback. This cycle calibrates the agent to your standards.

Human review remains essential. Agents handle execution, engineers handle judgment.

Step 4: Integrate into your CI/CD pipeline

Once outputs consistently meet your standards, integrate agent-generated code into your normal deployment process. Run tests, perform reviews, merge through existing workflows.

The goal is agent outputs that look like your team wrote them.

Where most AI agent tools fall short

Even well-designed agent workflows hit common failure modes. Knowing these helps you evaluate tools honestly.

Generic outputs that ignore design systems

Many tools promise instant code but improvise when they don't understand your components. The result looks right until you examine it: mismatched buttons, off-scale spacing, patterns no one recognizes.

Code that can't survive code review

A demo has no value if it can't merge. When agent exports ignore your file structure, testing conventions, or readability standards, the experiment becomes a dead end.

No connection to production constraints

Without real data, everything looks better than it is. Placeholders hide the messy realities of latency, authentication, and error states. These details decide whether a product feels fast or broken.

How Builder.io enables AI agent workflows for UI

Builder.io operates as an AI frontend engineer that connects directly to your repository, design system, and tools your team already uses. The platform indexes your design system so components, tokens, and documentation become the default way UI gets built.

When you connect a Figma design or Jira ticket, Builder.io reasons about intent and constraints before generating code that fits your existing codebase. The output is reviewable pull requests and not throwaway prototypes.

Repository-native workflow with visual editing

Builder.io works directly in your codebase while providing visual tools. PMs and designers preview, tweak, and align layouts in the same system engineers use.

Design system integration by default

Your components and tokens are indexed, so generated code uses your approved patterns. No improvisation and no drift.

Production-ready code from day one

Each output lives as a small, reviewable change. Linters, type checks, and CI run as usual. The path from prototype to production feels like normal iteration, not a rebuild.

Common questions about AI agent workflows for UI

Can AI agents build production UI without frontend developers?

No. Agents handle routine assembly work, freeing developers to focus on architecture, complex interactions, and system design. Engineering judgment remains essential; agents extend your team's capacity, they don't replace the people who know when something is actually ready to ship.

How do AI agent workflows connect to design tools like Figma?

Agents read Figma files through plugins and APIs, translating designs using your component library. The workflow preserves design intent while generating code that matches your standards. Designers work in familiar tools, agents handle the translation.

What makes AI agent workflows different from automation scripts?

Traditional automation follows rigid scripts for repetitive tasks, whereas AI agents reason about goals, adapt to constraints, and handle complex generation that requires understanding context. Scripts simply repeat steps; agents make decisions.

Getting started with AI agent workflows

Start small. Pick one page or flow and generate it inside your real repo using your components, your data, and your normal review process.

You'll see the difference immediately: cleaner diffs, faster reviews, and a shorter path from concept to commit.

If your team is tired of rebuilding prototypes that looked great in demos but couldn't survive review, sign up for Builder.io and see how agent workflows fit your stack.