Today’s AI agents can solve narrow tasks, but they can’t hand work to each other without custom glue code. Every hand-off is a one-off patch.

To solve this problem, Google recently released the Agent2Agent (A2A) Protocol, a tiny, open standard that lets one agent discover, authenticate, and stream results from another agent. No shared prompt context, no bespoke REST endpoints, and no re-implementing auth for the tenth time.

The spec is barely out of the oven, and plenty may change, but it’s a concrete step toward less brittle, more composable agent workflows.

If you’re interested in why agents need a network-level standard, how A2A’s solution works, and the guardrails to run A2A safely, keep scrolling.

Modern apps already juggle a cast of “copilots.” One drafts Jira tickets, another triages Zendesk, a third tunes marketing copy.

But each AI agent lives in its own framework, and the moment you ask them to cooperate, you’re back to copy-pasting JSON or wiring short-lived REST bridges. (And let’s be real: copy-pasting prompts between agents is the modern equivalent of emailing yourself a draft-final-final_v2 zip file.)

The Model Context Protocol (MCP) solved only part of that headache. MCP lets a single agent expose its tool schema so an LLM can call functions safely. Trouble starts when that agent needs to pass the whole task to a peer outside its prompt context. MCP stays silent on discovery, authentication, streaming progress, and rich file hand-offs, so teams have been forced to spin up custom micro-services.

Here’s where the pain shows up in practice:

- Unstable hand-offs: A single extra field in a DIY “handover” JSON can break the chain.

- Security gridlock: Every in-house agent ships its own auth scheme; security teams refuse to bless unknown endpoints.

- Vendor lock-in: Some SaaS providers expose agents only through proprietary SDKs, pinning you to one cloud or framework.

That brings us to Agent2Agent (A2A). Think of it as a slim, open layer built on JSON-RPC. It defines just enough—an Agent Card for discovery, a Task state machine, and streamed Messages or Artifacts—so any client agent can negotiate with any remote agent without poking around in prompts or private code.

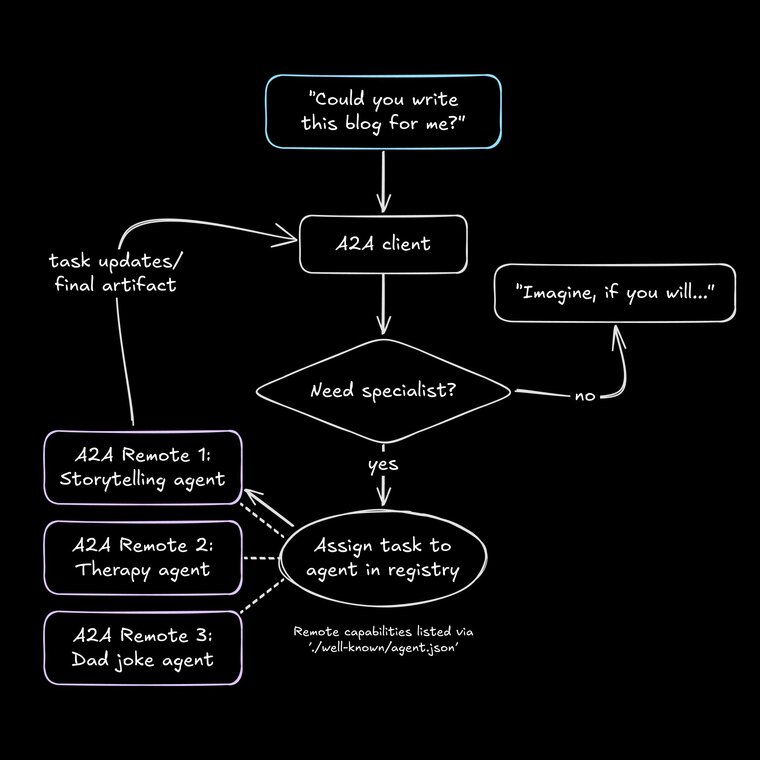

(^A2A use case example from Google’s announcement post.)

A2A doesn’t replace MCP; it sits above it, filling the “between-agent” gap that has stalled real-world adoption. Think of agents like workers at an office: MCP gives them employee handbooks, fax machines, and filing cabinets; A2A lets them chit-chat in the break room.

The goal of A2A is simple: make multi-agent orchestration feel routine rather than risky, while still giving frameworks and vendors room to innovate under the hood.

Before we walk through a full A2A exchange, it helps to tag the two players clearly.

This is the side that lives inside your stack—maybe a function in Genkit, a LangGraph node, or even an n8n workflow. It discovers a remote agent’s card, decides whether it can satisfy the announced auth method, and then creates a task by sending a JSON-RPC message such as createTask.

From that moment on the client acts as the task’s shepherd: it listens for status events, forwards any follow-up input the remote requests, and finally collects artifacts for downstream use.

Think of this as a specialized micro-service that just happens to speak A2A. It might be running in Cloud Run, Lambda, or on a bare VPS. Once it receives a task it owns the heavy lifting—whether that means querying a vector store, fine-tuning a model, or exporting a PDF.

Throughout execution, it streams back TaskStatusUpdate and TaskArtifactUpdate events. Crucially, the remote can’t flip the connection: it can ask for more input (status: input-required) from the client, but it never becomes the caller.

- Only the client initiates JSON-RPC requests.

- Only the remote updates task state.

- Either side can terminate the stream if something goes wrong, but responsibility for cleanup (e.g., deleting temp files) lies with the remote.

A mental model that works well is “front-of-house vs back-of-house.” The client stays in front, taking new orders and relaying clarifications; the remote is the kitchen, head-down until the dish is ready. (The downsides are true, too: If the remote burns the soufflé, the client still has to smile and comp dessert.)

With those lanes marked, we can zoom in on the data structures and security rails that make the hand-off safe.

When people first see A2A they often ask, “Wait, doesn’t MCP already cover agent tooling?” Almost—but not quite.

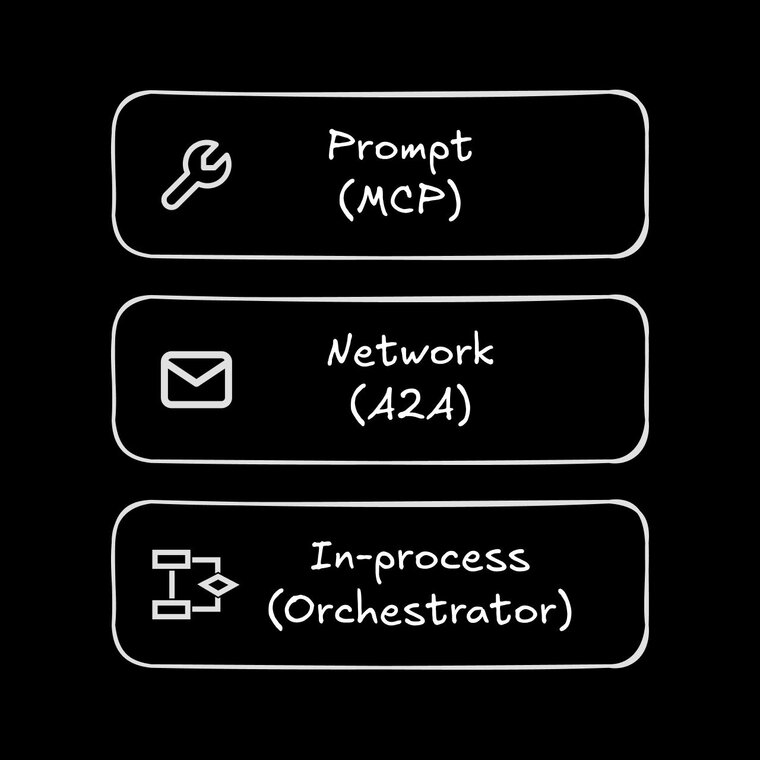

A quick map of the layers makes the distinction clear:

- Inside a single agent (prompt level): Here the agent needs a schema so its model can call a tool. That’s MCP territory: JSON schemas, function names, argument validation, prompt-injection worries.

- Between agents (network level): As soon as an agent wants to hand the whole task to a peer, MCP has nothing to say about discovery, auth, or streamed artifacts. That gap is what A2A fills with Agent Cards, Tasks, and status events. (More on agentic systems and orchestrators.)

- Inside your process (workflow level): Frameworks like LangGraph, CrewAI, and AutoGen wire steps together in memory. They’re great for small chains on one machine, but once you need to cross a network boundary—or mix languages and vendors—you step out of their sandbox and into A2A.

Think of it like this:

- MCP is the API contract inside a single micro-service.

- A2A is the HTTP layer between micro-services.

- LangGraph et al. are the workflow engine that decides when each micro-service gets called.

At scale, most real systems end up using all three. A LangGraph flow might call an internal Python agent (in-process), then hand the job to a third-party finance agent via A2A, and that finance agent might rely on MCP to trigger a spreadsheet-export tool deep inside its own prompt.

Keeping these boundaries straight prevents duplicated effort: you don’t bolt custom auth onto every MCP tool, and you don’t overload A2A with prompt schemas it was never meant to parse.

With the layers sorted, we can dig into the wire format itself—the Agent Card, the Task state machine, and how messages and artifacts move across the stream.

If you can picture buying a book on Amazon, you already understand the four data shapes A2A moves across the wire.

Take a look:

| Your Amazon flow | A2A primitive | What it contains |

|---|---|---|

| Product listing page: You browse, see what’s for sale, learn payment options | Agent Card (/.well-known/agent.json) |

Agent ID, description, capabilities list, supported auth method, optional cryptographic signature |

| Order confirmation / invoice: Click “Buy Now,” receive an order ID | Task (created via createTask) |

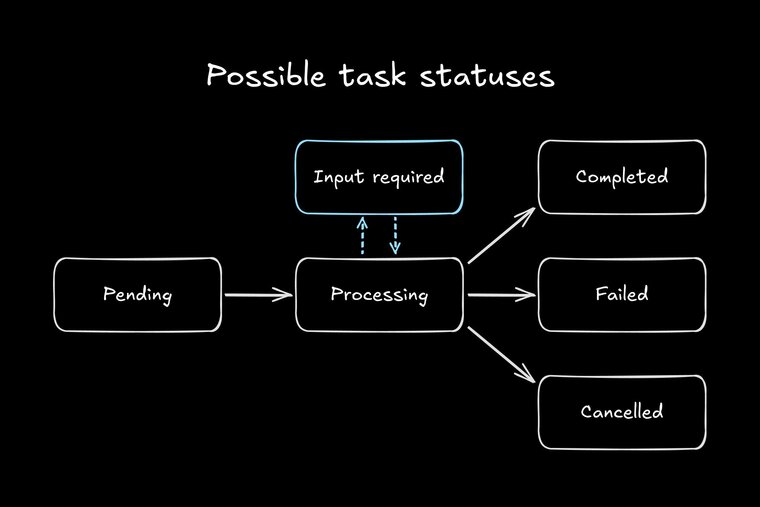

task_id, input payload, current status |

| Shipping-status pings: “Order packed,” “out for delivery,” “arriving today” | Message (TaskStatusUpdateEvent) |

Role (agent or client), text, optional small files |

| Package on your doorstep: The thing you bought | Artifact (TaskArtifactUpdateEvent) |

Typed payload: TextPart, FilePart, or DataPart |

- Browse the listing: The client fetches the Agent Card once. If the “features” (capabilities) and “checkout” (auth) look good, it proceeds.

- Place the order: The client sends a

createTaskJSON-RPC request (like clicking “Buy Now”). The remote agent replies with atask_id, your order number for the job. - Watch the tracking emails: The remote streams Messages over Server-Sent Events:

pending,processing, maybeinput-required(a “signature needed” moment). The client can answer with addInput, just as you’d update delivery instructions. - Receive the package: When status flips to

completed, Artifact events deliver the payload—PDF report, PNG asset, JSON data, or whatever was promised. - Close the loop: If the task fails or is canceled, the remote marks it

failedorcanceledand no artifacts ship (like Amazon refunding an unfulfilled order).

By framing the exchange this way, you can see why A2A keeps the spec minimal: it only defines what every shopper (client) and seller (remote) absolutely need—catalog, order, tracking, delivery—while leaving the “warehouse internals” (model prompts, tool schemas) to MCP or any other mechanism the seller chooses.

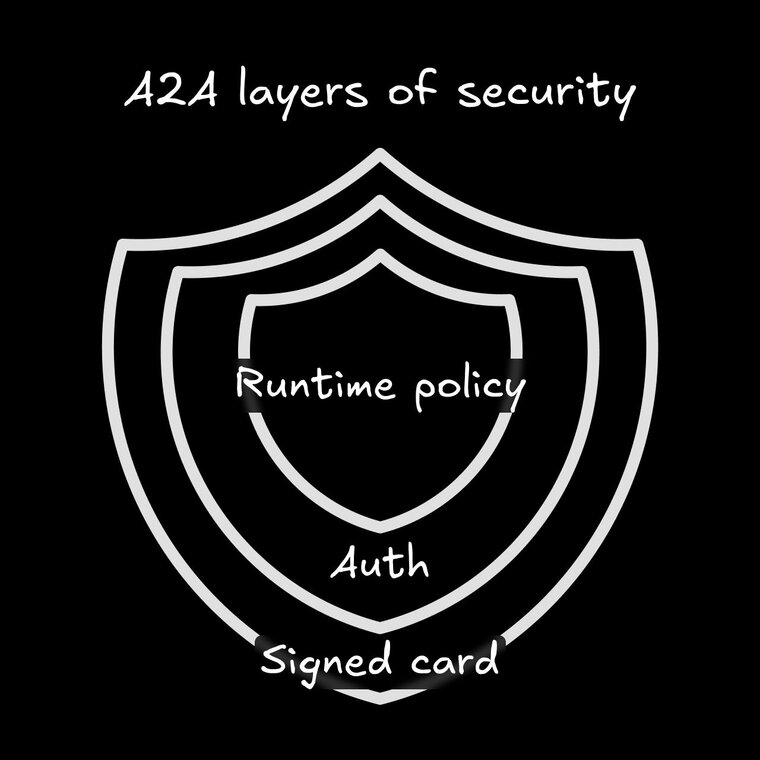

A2A keeps its on-wire spec thin, but production systems still need three layers of protection and visibility.

- Signed agent cards: Add a JSON Web Signature (JWS) to the card and publish the signer’s public key. Clients “pin” that key; if anyone swaps the card in transit, signature verification fails and the call is dropped. “Trust me, bro” isn’t a real security policy.

- Auth choices: Demos usually rely on simple Bearer tokens, but you can level-up to mutual TLS (like a secret handshake without the finger guns) or plug into your company’s single sign-on flow.

- Runtime policy: A remote agent can reject oversized or risky payloads before its model ever runs. A common guard looks like: “accept only JSON or PNG files under 5 MB.” (This is a lot like Zod schema validation in MCP.)

Each status or artifact event already carries timestamps, task_id, and an optional trace header. Wrap your A2A client in an OpenTelemetry middleware and you get end-to-end spans out of the box—no hacking JSON.

Pipe those spans into your observability stack, and you should be able to answer, “Which remote agent turned slow at 3 p.m.?” before customers notice.

Today, discovery of A2A remotes is DIY:

- YAML files for internal teams (

registry.yamlchecked into repo). - Vertex AI catalogue: Tick “Publish” and Google hosts the card in a private directory.

- Emerging public hubs: LangChain and Flowise communities are hacking on npm-style registries, but there’s no global “verified badge” yet.

Until those hubs mature, most companies will treat third-party agents like SaaS vendors: security questionnaires, software bill of materials (SBOMs), and limited network scopes.

MCP exposes every tool schema in natural-language prompts, so injection and argument-tampering are daily worries.

A2A hides all of that behind the remote’s fence; the client sees only high-level tasks and capped artifacts. You still need to trust the remote’s code, but your prompt is never on the table, which eliminates an entire class of exploits.

The takeaways for all this: Sign what you publish, pin what you trust, trace every hop, and keep payload limits sane. With those guardrails in place, A2A is no riskier than calling a well-behaved REST service—and a lot more flexible when you add new agents tomorrow.

- Browse an “Agent Mall.” You open CoolAgentMall.dev, search “tax compliance,” and see live agents with star-ratings and signed cards. One click drops the URL into your private registry—no SDKs, no secrets.

- Drag-and-drop chains. In Flowise (or n8n) you drag a green Tax-Check (A2A) block after “Generate Invoice,” hit Run, and watch a JSON artifact stream back with the correct jurisdiction codes—zero glue code on your side.

- ~50 vendors have announced support, but most agents still exist in the “DM me for a demo” stage.

- LangGraph, CrewAI, and AutoGen adapters are solid; Flowise and n8n remain on community betas.

- No public registry yet—teams rely on registry.yaml files or Vertex AI’s private catalogue.

- Very few agents ship signed cards, and rate-limits or billing caps are DIY middleware.

- Performance data is anecdotal; Google’s reference server adds ~30 ms per hop in local tests.

A2A is ready for prototypes and internal workflows, but consumer apps and regulated stacks will want extra guardrails until registries and security standards mature.

- Cross-vendor workflows: Your product-manager agent needs a finance-forecast agent from another company. A2A gives them a shared handshake, auth, and streaming without exposing prompt guts.

- Security-sensitive black boxes: A vendor won’t share its model prompts but will expose a signed Agent Card. You still get a clean contract plus task-level audit trails.

- Hybrid stacks & mixed languages: A TypeScript front end can call a Python data-science agent—or the other way around—because only JSON-RPC crosses the wire.

- Long-running jobs that need progress updates: Build pipelines, PDF rendering, data exports: stream status and artifacts over Server-Sent Events instead of polling a custom REST endpoint.

- Everything runs in one process: If your whole flow sits inside your orchestrator of choice, stick with the framework’s in-memory calls.

- Tiny helper scripts: A cron job that pings a lone OpenAI function doesn’t need discovery or streaming; direct API calls are lighter.

- One-off data pulls: For a weekly export where latency and chatter don’t matter, a plain REST endpoint is easier to monitor.

- Schema-heavy, prompt-light tools: When the main need is validating complex arguments inside a prompt, MCP alone is the right layer.

Reach for A2A when a task crosses a network boundary and you care about trust, live progress, or swapping in new specialist agents later. Skip it when a well-documented API already fits the bill, or your whole stack fits on a Raspberry Pi taped to your monitor.

A2A doesn’t add new magic to models. Instead, it adds is a dependable handshake so your existing agents can meet, swap work, and keep a tidy audit trail.

The registry story is still DIY and many agents live behind private demos, but the plumbing is solid enough for prototypes and internal workflows today.

Less glue, more interesting work.

Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo